Monday, December 14th 2020

Cyberpunk 2077 Does Not Leverage SMT on AMD Ryzen, Lower Core-Count Variants take a Bigger Hit, Proof Included

Cyberpunk 2077 does not leverage simultaneous multi-threading (SMT) on AMD Ryzen processors, according to multiple technical reviews of the game that tested it with various processors. The game does leverage the analogous HyperThreading feature on rival Intel Core processors. While this doesn't adversely affect performance of higher core-count Ryzen chips, such as the 16-core Ryzen 9 5950X or to a lesser extent the 12-core 5900X, lower core-count variants such as the 6-core 5600X take a definite performance hit, with PCGH reporting that a Ryzen 5 5600X is now matched by a Core i5-10400F, as the game is able to take advantage of HyperThreading and deal with 12 logical processors on the Intel chip, while ignoring SMT on the AMD one.

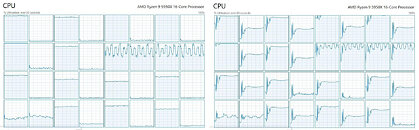

CD Projekt RED may have bigger problems on its hands than performance optimization for a PC processor, such as the game being riddled with glaring performance issues on consoles; but in the meantime, the PC enthusiast community swung to action with a fix. Authored by chaosxk on Reddit, it involves manually editing the executable binaries of the game using a Hex Editor, which tricks the game into using logical processors as cores. This fix has been found to improve frame-rates on AMD Ryzen machines. Before attempting the hex edit, make sure you back up your original executables. The screenshot below provides a before & after-patch view of Cyberpunk 2077 loading a Ryzen 9 5950X. You can learn more about this fix, and a step-by-step guide here.Update 08:28 UTC: We have some technical details on what's happening.

A Reddit post by CookiePLMonster sheds light on what is possibly happening with the game. According to them, Cyberpunk 2077 reuses AMD GPUOpen pseudo-code to optimize its scheduler for the processor. It was originally designed to let an application use more threads when an AMD "Bulldozer" processor is used; but has the opposite effect when a non-Bulldozer AMD processor is detected. The game looks for "AuthenticAMD" processor brand, and "family = 0x15" (AMD K15 or Bulldozer/derivative), and only then engages "logical processors" (as identified by Windows OS scheduler as part of its Bulldozer-optimization). When any other, including a newer AMD processor is detected, the code makes the game scheduler only send traffic to the physical cores, and not to their logical processors.

Our own W1zzard dug into the binaries to verify these claims, find a commented decompilation below. The game indeed uses this archaic GPUOpen code from 2017 to identify AMD processors, and this is responsible for its sub-optimal performance with AMD Ryzen processors. This clearly looks like a bug or oversight, not like it's intentional.

Sources:

chaosxk (Reddit), BramblexD (Reddit), PCGH, VideoCardz, CookiePLMonster (Reddit)

CD Projekt RED may have bigger problems on its hands than performance optimization for a PC processor, such as the game being riddled with glaring performance issues on consoles; but in the meantime, the PC enthusiast community swung to action with a fix. Authored by chaosxk on Reddit, it involves manually editing the executable binaries of the game using a Hex Editor, which tricks the game into using logical processors as cores. This fix has been found to improve frame-rates on AMD Ryzen machines. Before attempting the hex edit, make sure you back up your original executables. The screenshot below provides a before & after-patch view of Cyberpunk 2077 loading a Ryzen 9 5950X. You can learn more about this fix, and a step-by-step guide here.Update 08:28 UTC: We have some technical details on what's happening.

A Reddit post by CookiePLMonster sheds light on what is possibly happening with the game. According to them, Cyberpunk 2077 reuses AMD GPUOpen pseudo-code to optimize its scheduler for the processor. It was originally designed to let an application use more threads when an AMD "Bulldozer" processor is used; but has the opposite effect when a non-Bulldozer AMD processor is detected. The game looks for "AuthenticAMD" processor brand, and "family = 0x15" (AMD K15 or Bulldozer/derivative), and only then engages "logical processors" (as identified by Windows OS scheduler as part of its Bulldozer-optimization). When any other, including a newer AMD processor is detected, the code makes the game scheduler only send traffic to the physical cores, and not to their logical processors.

Our own W1zzard dug into the binaries to verify these claims, find a commented decompilation below. The game indeed uses this archaic GPUOpen code from 2017 to identify AMD processors, and this is responsible for its sub-optimal performance with AMD Ryzen processors. This clearly looks like a bug or oversight, not like it's intentional.

84 Comments on Cyberpunk 2077 Does Not Leverage SMT on AMD Ryzen, Lower Core-Count Variants take a Bigger Hit, Proof Included

I don't think this qualifies as optimization at all, it's just hardware detection. Software isn't "optimized" for SMT.Of course, those conspiracy theories never dies.

Rest assured those theories are nonsense, and this problem have nothing to do with compilers.This is certainly not excusable for a studio of this size and a game with this kind of budget.

But it's still very understandable how it happened; this is not a bug that is glaringly obvious to a normal game tester, and the core development team may only have a very limited set of hardware they test continously throughout development.This is about reading the CPUID instruction to detect CPU features, it has nothing to do with Windows.

The same instruction is used to detect CPU features on all platforms, inlcuding the cpuinfo tool on Linux.

Unfortunately, some extensions to CPUID is not standardized across CPU makers (and even microarchitectures), so it may require some extra interpretation to detect the correct core and thread count on various AMD, Intel and VIA CPUs.

But nevertheless, this "problem" is well known and only takes a few lines of code to detect correctly (at least for known CPUs so far, I can't guarantee for future CPUs).You are mostly right.

The bug here is only about detecting the correct amount of available threads, not whether a specific thread is using SMT or not, that's normally not controlled by the application. So the article claiming SMT is not leveraged is technically incorrect.Didn't AMD boast about having hundreds of engineers dedicated to this?

At least this bug comes down to reading a single instruction, not something that requires any major effort from anyone.

-----

One additional thought;

If this is hard, just wait until Alder Lake with different cores arrives. There may be a lot of software relying on the CPUID instruction to fetch capabilities, but this instruction returns the features of the core, not the whole CPU, which can get messy if different cores have different ISA features.

Onto some actual discussion on the topic:

I made the modification to my exe and here is a sample scene. CPU is a 2950x (16c/32t) and GPU is a 3080, running 3440*1440.

Original:, CPU is at 50% utilisation, GPU is at 65-76%. Result = 42FPS

Modded: CPU is at 66% utilisation, GPU is at 51-62%. Result = 33FPS.

How many people tested this on their own rigs? Might be worth posting your specs and A/B comparisons as CPU utilisation != better experience on my end.

Solid 60fps on high 1080p with a solid stream using OBS studio.youtube.com/video/CGibpEJo3Ec/edit :toast: