Wednesday, March 3rd 2021

AMD Radeon RX 6700 XT: All You Need to Know

AMD today announced the Radeon RX 6700 XT, its fourth RX 6000 series graphics card based on the RDNA2 graphics architecture. The card debuts the new 7 nm "Navi 22" silicon, which is physically smaller than the "Navi 21" powering the RX 6800/RX 6900 series. The RX 6700 XT maxes out "Navi 22," featuring 40 RDNA2 compute units, amounting to 2,560 stream processors. These are run at a maximum Game Clock frequency of 2424 MHz, a significant clock speed uplift over the previous-gen. The card comes with 12 GB of GDDR6 memory across a 192-bit wide memory interface. The card uses 16 Gbps GDDR6 memory chips, so the memory bandwidth works out to 384 GB/s. The chip packs 96 MB of Infinity Cache on-die memory, which works to accelerate the memory sub-system. AMD is targeting a typical board power metric of 230 W. The power input configuration for the reference-design RX 6700 XT board is 8-pin + 6-pin.

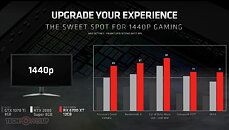

AMD is marketing the RX 6700 XT as a predominantly 1440p gaming card, positioned a notch below the RX 6800. The company makes some staggering performance claims. Compared to the previous-generation the RX 6700 XT is shown beating the GeForce RTX 2080 Super. NVIDIA marketed the current-gen RTX 3060 Ti as having the same performance outlook. Things get interesting, where AMD shows that in select games, the RX 6700 XT can even beat the RTX 3070, a card NVIDIA marketed as matching its previous-gen flagship, the RTX 2080 Ti. AMD is pricing the Radeon RX 6700 XT at USD $479 (MSRP), which is very likely to be bovine defecation, given the prevailing market situation. The company announced a simultaneous launch of its reference-design and AIB custom-design boards, starting March 18, 2021.AMD's performance claims follow.

AMD is marketing the RX 6700 XT as a predominantly 1440p gaming card, positioned a notch below the RX 6800. The company makes some staggering performance claims. Compared to the previous-generation the RX 6700 XT is shown beating the GeForce RTX 2080 Super. NVIDIA marketed the current-gen RTX 3060 Ti as having the same performance outlook. Things get interesting, where AMD shows that in select games, the RX 6700 XT can even beat the RTX 3070, a card NVIDIA marketed as matching its previous-gen flagship, the RTX 2080 Ti. AMD is pricing the Radeon RX 6700 XT at USD $479 (MSRP), which is very likely to be bovine defecation, given the prevailing market situation. The company announced a simultaneous launch of its reference-design and AIB custom-design boards, starting March 18, 2021.AMD's performance claims follow.

104 Comments on AMD Radeon RX 6700 XT: All You Need to Know

In real conditions, the 6800 is around 10-15% faster than RTX 3070.

www.techspot.com/review/2174-geforce-rtx-3070-vs-radeon-rx-6800/

What features do you request from AMD? The Radeon is a more feature-rich product line, in general and historically.

In my experience, Navi10 undervolts better than Turing, but that's to be expected really as TSMC's 7FF is better than than their older 14nm process.

Samsung 8nm looks comparable to Navi10 based on your single post, and I'm assuming that Navi22 will undervolt in a very similar fashion to Navi10, being the same process and all.

The idea of a 6700XT or 3060 running at sub-100W is very appealing to me, and looking at the ebay prices of a 5700XT I can likely make a reasonable profit by selling my 5700XT on if I can find a 6700XT or 3060 to play with.See, I'd be running a battery of OCCT tests to work out the minimum stable voltage for each clock and then trying to work out where the beginning of diminishing returns kicks in for voltage/clocks.

It's not an idea that appeals to a lot of people but I suspect somewhere between 1500-1800MHz is the sweet spot with the highest performance/Watt. So yes, I'd happily slow down the card if it has large benefits in power draw. If I ever need more performance I'll

just buy a more expensive cardcontact my AMD/Nvidia account managers and try to bypass the retail chain in a desperate last-ditch effort to obtain a card with a wider pipeline and more CUs/Cores.So if 6700 was bad, I was wondering, how you rated 3070.That's a baseless speculation.

People take stuff like Quake II RT, don't get that 90% of that perf is qurks nested in quirks nested in quirks optimized for single vendor's SHADERS, and draw funny conclusions.

One of the ray intersection issues (that didn't quite allow to drastically improve its performance) is that you need to randomle access large memory structures. Guess who has an edge at that...Uh oh, doh.

Let me try again, there is NO such gap, definitely not in NV favor, in ACTUAL hardware RT perf, perfr is all over the place.

github.com/GPSnoopy/RayTracingInVulkan

And if you wonder "but why it is faster in GREEN SPONSORED games then", because only a fraction of what happens in games for ray tracing is ray intersection.

Make sure to check "Random Thoughts" section on github, it's quite telling.

Random Thoughts

- I suspect the RTX 2000 series RT cores to implement ray-AABB collision detection using reduced float precision. Early in the development, when trying to get the sphere procedural rendering to work, reporting an intersection every time the rint shader is invoked allowed to visualise the AABB of each procedural instance. The rendering of the bounding volume had many artifacts around the boxes edges, typical of reduced precision.

- When I upgraded the drivers to 430.86, performance significantly improved (+50%). This was around the same time Quake II RTX was released by NVIDIA. Coincidence?

- When looking at the benchmark results of an RTX 2070 and an RTX 2080 Ti, the performance differences mostly in line with the number of CUDA cores and RT cores rather than being influences by other metrics. Although I do not know at this point whether the CUDA cores or the RT cores are the main bottleneck.

- UPDATE 2020-01-07: the RTX 30xx results seem to imply that performance is mostly dictated by the number of RT cores. Compared to Turing, Ampere achieves 2x RT performance only when using ray-triangle intersection (as expected as per NVIDIA Ampere whitepaper), otherwise performance per RT core is the same. This leads to situations such as an RTX 2080 Ti being faster than an RTX 3080 when using procedural geometry.

- UPDATE 2020-01-31: the 6900 XT results show the RDNA 2 architecture performing surprisingly well in procedural geometry scenes. Is it because the RDNA2 BVH-ray intersections are done using the generic computing units (and there are plenty of those), whereas Ampere is bottlenecked by its small number of RT cores in these simple scenes? Or is RDNA2 Infinity Cache really shining here? The triangle-based geometry scenes highlight how efficient Ampere RT cores are in handling triangle-ray intersections; unsurprisingly as these scenes are more representative of what video games would do in practice.

Sorry, I cannot seriously talk about "but if I downscale and slap TAA antialiasing, can I pretend I did not downscale".No, you can't. Or wait, you can. Whatever you fancy.

It's just, I won't.

Did you remove sone of the points from the curve. My 1070 has a ton abd id hate to have to get each one at the same freq hah

Here's an update.

2040-2055 stable @ 925mv (compared to stock 1965-1980 @ 1075mv). Max power draw 190W. Max temp 61C on air. The 66C max temp reported is the pic is from periodically going back to stock settings -- so yes, there is a 5 degree temp decrease and lots of MHz increase.

Fully stable.

Undervolt your Ampere cards people.

Also, we are getting a "bit" off topic, we should end this convo here or make a new thread lol.

I'd say it was an interesting experience, but I've looked through the rose-coloured glasses before and I prefer to see the entire spectrum.

And with that, the ignore button strikes again!

2) DF is an embarrassmentAh. In motion that is. And from sufficient distance, I bet.

That's ok then. As I recall DLSS took this:

and turned it into this:

all while reviewer kept saying that "better than native" mantra.

But one had to see that in motion, I'll remember that. Thanks!

I will just link to the video again.

Fantastic channel too, they do a great job on virtually all content, they do the lengthy investigation, present the findings in full showing show you the good, the bad, and the nuance, and then on a balance of it all make informed conclusions and recommendations.

It's the best analysis on RT subject that I've seen on youtube.

And it's still filled with pathetic shilling.

Yet, even without reading between the lines, you should have figured this:

Apples to apples, eh:

Typically, in any RT scenario, there are four steps.

1) To begin with, the scene is prepared on the GPU, filled with all of the objects that can potentially affect ray tracing.

2) In the second step, rays are shot out into that scene, traversing it and tested to see if they hit objects.

3) Then there's the next step, where the results from step two are shaded - like the colour of a reflection or whether a pixel is in or out of shadow.

4) The final step is denoising. You see, the GPU can't send out unlimited amounts of rays to be traced - only a finite amount can be traced, so the end result looks quite noisy. Denoising smooths out the image, and producing the final effect.

So, there are numerous factors at play in dealing with RT performance. Of the four steps, only the second one is hardware accelerated - and the actual implementation between AMD and Nvidia is different...

...Meanwhile, PlayStation 5's Spider-Man: Miles Morales demonstrates that Radeon ray tracing can produce some impressive results on more challenging effects - and that's using a GPU that's significantly less powerful than the 6800 XT....

www.eurogamer.net/articles/digitalfoundry-2021-pc-ray-tracing-deep-dive-rx-6800xt-vs-rtx-3080

So, uh, oh, doh, you were saying?

But yes, the second step is the hardware accelerated one and their measurements give a pretty good indication that Nvidia's RT hardware is more powerful at this point (probably simply by having more units). This evidenced by the place of performance falloff on the scale of amounts of rays used. Both fall off but the respective points are different.

Miles Morales on PS5 is heavily optimized using the same methods for performance improvements that RT effects use on PC, mostly to a higher degree. Also, clever design. The same Digital Foundry has a pretty good article/video on how that is achieved: www.eurogamer.net/articles/digitalfoundry-2020-console-ray-tracing-in-marvels-spider-man

For example, Quake 2 RTX and Watch Dogs Legion use a denoiser built by Nvidia and while it won't have been designed to run poorly on AMD hardware (which Nvidia would not have had access to when they coded it), it's certainly designed to run as well as possible on RTX cards.

Comparison of actual hardware RT perf benchmark, have been linked in #85 here. There is no need to run around and "guess" things, they are right there, on the surface.

The:

the RTX 3080 could render the effect in nearly half the time in Metro Exodus, or even a third of the time in Quake 2 RTX, yet increasing the amount of rays after this saw the RTX 3080 having less of an advantage.

could mean many things. This part is hilarious:

In general, from these tests it looks like the simpler the ray tracing is, the more similar the rendering times for the effect are between the competing architectures. The Nvidia card is undoubtedly more capable across the entire RT pipeline

Remember which part of ray tracing is hardware accelerated? Which "RT pipeline" cough? Vendor optimized shader code?

Yeah in motion, of course in motion. I tend to play games at something in the order of 60-144fps, not sitting and nitpicking stills, but for argument's sake, I'll do that too. If we're going to cherry-pick some native vs DLSS shots, I can easily do the same and show the side of the coin that you conveniently haven't.

Native left, DLSS Quality right

And the real kicker after viewing what is, at worst, comparable quality where each rendering has strengths and weaknesses, and at best, higher overall quality...

But you appear to have made up your mind, you don't like it, you won't "settle" for it. Fine, suit yourself, nobody will make you buy an RTX card, play a supported game and turn it on. Cherry picking examples to try and show how 'bad' it is doesn't make you come across as smart, and it certainly doesn't just make you right, you could have at least chosen a game with a notoriously 'meh' implementation. Not to mention the attitude, yikes.

I can't convince you, and you can't convince me, so where from here? ignore each other?

It's not about "in motion" at all. What you present is the "best case" for any anti-aliasing method that adds blur, TAA in particular.

There is barely any crisp texture (face eh?) to notice the added blur.

It is heavily loaded with stuff that benefits a lot from antialiasing (hair, long grass, eyebrows).

But if you dare bringing in actual, real stuff, from the very pic in your list, Death Stranding, DLSS takes this:

and turns it into this:

no shockers here, all TAA derivatives exhibit it.

NV's TAA(u) derivative adds blur to... entire screen if you move your mouse quickly. Among other things

It's a shame ars-techinca was the only site to dare point it out.

arstechnica.com/gaming/2020/07/why-this-months-pc-port-of-death-stranding-is-the-definitive-version/

My 6800XT just died that i paid $1100 usd for on ebay 4 months ago and itd ALREADY DEAD???? CANT EVEN RETURN IT. Contacted Sapphire for an RMA but doesn't look like its gonna be honored

I have no choice, I NEED SOMETHING? .. im gonna kill someone over a 6700 XT m i cant get another 6800 XT for less than 2 grand usd, so I guess im done with PC gaming if i cant get on of these? yed it should be a great upgrade from a 1080/1080TI for sure,