Tuesday, April 27th 2021

Possible NVIDIA GeForce RTX 3080 Ti Launch Date Surfaces

NVIDIA is likely to launch its upcoming GeForce RTX 3080 Ti high-end graphics card on May 18, 2021, according to a Wccftech report citing a reliable source on Chinese tech forums. May 18 is when the product could be announced, with reviews going live on May 25, followed by market availability on May 26, according to this source.

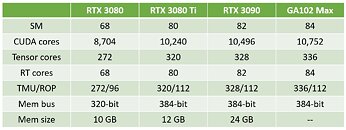

NVIDIA is likely designing the RTX 3080 Ti to better compete against the Radeon RX 6900 XT. Based on the same 8 nm GA102 silicon as its RTX 3080 and RTX 3090, this SKU will be armed with 10,240 CUDA cores, 320 Tensor cores, 80 RT cores, 320 TMUs, 112 ROPs, and the chip's full 384-bit wide GDDR6X memory interface, holding 12 GB of memory running around 19 Gbps, according to VideoCardz. NVIDIA is expected to price the card competitively against the RX 6900 XT. AMD, meanwhile, has refreshed the RX 6900 XT with higher clock-speeds, released as special SKUs through its AIB partners.

Sources:

ITHome, WCCFTech, VideoCardz

NVIDIA is likely designing the RTX 3080 Ti to better compete against the Radeon RX 6900 XT. Based on the same 8 nm GA102 silicon as its RTX 3080 and RTX 3090, this SKU will be armed with 10,240 CUDA cores, 320 Tensor cores, 80 RT cores, 320 TMUs, 112 ROPs, and the chip's full 384-bit wide GDDR6X memory interface, holding 12 GB of memory running around 19 Gbps, according to VideoCardz. NVIDIA is expected to price the card competitively against the RX 6900 XT. AMD, meanwhile, has refreshed the RX 6900 XT with higher clock-speeds, released as special SKUs through its AIB partners.

59 Comments on Possible NVIDIA GeForce RTX 3080 Ti Launch Date Surfaces

And it reflects what DIY people in that particular region are buying.

And Germany is one hell of a market.Let's compare it to AMD's last reported quarter, which is even before RDNA2 sales hit hard:

Quarterly Financial Segment Summary

- Computing and Graphics segment revenue was $2.10 billion, up 46 percent year-over-year and 7 percent quarter-over-quarter primarily driven by Ryzen processor and Radeon graphics product sales growth.

So, that is what, about 8 billion for a full year, for, okay okay, both CPUs and GPUs, but so what.Note how AMD's 7nm, highly efficient RDNA2 is also on a TSMC node ;)

I'm not touching Samsung's 8nm with a ten foot pole. And luckily, we can't anyway :D They're on a steep learning curve and it shows. Many of their fab stuff, while making strides, isn't quite perfect. Remember, pre-Ampere we all banked on getting that gen on TSMC. Even Nvidia, as you can see by the stack they released initially and still expand on. Its a complete and utter mess and there is only one explanation. Even with all the compromises and tweaks, the TDPs have exploded.

Just seeing those 3x 8 pin connector in most of AIBs is enough to make me back away.

Pascal was a phenomenal card at launch with only 1x 8pin connector for GTX 1080. Even 1080 Ti only uses 8+6pin.

My 1080 Ti Trio is using 2x 8pin.

I hope RTX 40xx and RX 7000 series have much lower TDP and power consumption. Or maybe Nvidia and AMD want to bring 500W TDP???

There is no necessity involved here, its Nvidia shoveling RT marketing BS with nothing to show for it. Oh yay, our god rays and shadows are real time now, and cost 3x as many resources. I sincerely can't even begin to care. Games are about gameplay, and graphics are just a tiny part of the puzzle, so graphics cards shouldn't suddenly be moving to different price points for what is essentially similar performance. That only happens if the technology is overpriced or underdelivers. And it does both.

The only real advance I've seen in graphics for gaming is not RT, but rather the highly improved algorithms for what is essentially (SS)AA. The upscale/downscale/approximation trick is almost down to perfection. Is that due to RT? I suppose the lukewarm reception of RTX in the entire industry for gaming, which relates directly to the immense performance hit, has been a primary driver to make DLSS happen, indeed. But another major influence would have inspired the development regardless: Higher resolutions. They enable higher render targets which in turn inspire tricks to keep that playable. But, our raster-based tech is so efficient, even the jump to 4K was doable within two generations, coming from 1080p "mid range even slaughters it" territory.

I'm eagerly awaiting a normally priced mid-to-high end GPU in the x70/x80 tier that is not overloaded with promises that won't be kept. In that sense, Pascal had everything: superb efficiency, smaller dies, higher clocks and much more VRAM per % of relative performance... we only made steps back ever since... and stack new tech on top to still keep things in check. It feels like two steps forward and one step back, or perhaps even two at the end of the day.

A clear sign of immature and rushed tech that was needed mostly to drive share price and create new demand where in fact all demands were satisfied. While I understand companies need to make money, this RTX move just oozed greed at the expense of everything else that would make it a good deal. Pre-empting a slow development to somehow control it. Its disgusting and we should not support it as it doesn't lead to better games or gaming.

48GB VRAM, 6 8-pin power connectors and a 5-slot cooler.

Or maybe it'll be the Quadro RTX 8000 on Ampere, so 48GB instead of 24GB VRAM.

VRAM gonna get toasty though.