Thursday, August 28th 2008

ATI Deliberately Retards Catalyst for FurMark

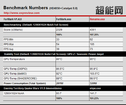

It is a known flaw that some models of the Radeon HD 4800 accelerators fail oZone3D FurMark, an OpenGL based graphics benchmark application that has found to stress Radeon HD 4800 series far enough to result in over-heating, artifacts or even driver crashes. The Catalyst 8.8 drivers have found to treat the FurMark executable differently based on its file-name. Expreview tested this hypothesis by benchmarking a reference design HD 4850 board using Catalyst 8.8 driver, with two runs of FurMark. In the first run, the test was cleared at a low score, much lower compared to those of whatever successful runs on older drivers could churn out. Suspecting that the driver could be using some sort of internal profile specific to the FurMark executable, Expreview renamed the furmark.exe file, thereby not letting the driver know it's FurMark that's being run. Voila! the margin of lead the renamed FurMark executable gave over "furmark.exe" shows the driver to behave differently. A shady thing since Radeon HD 4800 almost became infamous for failing at FurMark, and at least passing it with a low score seemed better than failing at it altogether.

Expreview caught this flaw when testing the PowerColor Radeon HD 4870 Professional Cooling System (PCS+) when odd behaviour with the newer driver was noted. Successive BIOS releases didn't fix the issue, in fact, it only got worse with erratic fan behaviour caused due to a "quick-fix" BIOS PowerColor issued (covered here).

Source:

Expreview

Expreview caught this flaw when testing the PowerColor Radeon HD 4870 Professional Cooling System (PCS+) when odd behaviour with the newer driver was noted. Successive BIOS releases didn't fix the issue, in fact, it only got worse with erratic fan behaviour caused due to a "quick-fix" BIOS PowerColor issued (covered here).

89 Comments on ATI Deliberately Retards Catalyst for FurMark

There's two ways to look at it.

A. Either they try to keep the majority of their cards cool and functioning. Apparently by using drivers now.

OR

B. They wind up like Nvidia with a recall level failure rate. Right now ATI/AMD can't afford that. Hell look what it did to Nvidias stock. Try and tell me ATI can handle something like that. They are just getting back into the High End competition.

B is probably the right choice but right now they can't afford it. Hopefully AMD's new CPUs will help pull them out and get them going on stable ground again. Honestly what would people rather have them do?? Seriously think about that for a minute!

And remember I'm an Nvidia fan and I still don't want to see ATI take the hit Nvidia did earlier this year.

I think ATI should have put something in the release notes of 8.8 as an excuse though, because after all the specific circumstance are very limited, nothing now or in the future of games will likely do what furmark does and it's mostly an issue for OC'ed cards.

I wonder though if the companies that released pre-OC'ed cards have something to do with it, I'm guessing those cards show the same issues but you can't blame the OC'ing customer then.

I also wonder if the brand of RAM has an influence, it seems there are 2 kinds of RAM used on 4850's now and the RAM is the stuff that gets bloody hot I find, and probably heats up the rest of the card too a lot including the heatsink that sits on the VRM's

Whatever the reason behind it, be it the cards over heating and needing a higher fans speed/better cooling or whatever, it is still ATi's responsibility to provide us with a quality product that will last.

Currently the cards aren't required to run at full load under normal gaming conditions, but as new games come out, the cards will be pushed harder and harder. Especially if they are required to do more than graphics, like is PhysX is offloaded to them, they are put under even more load.

Personally, I would be worried if my graphics card was unstable under load. But I am also one that doesn't like my processor to be unstable under 100% load, even if it functions perfectly except when under 100% load, and it will never really see 100% load.

Anyway that's the point, the one of yours and newtekie. It's not the benchmark, it's what this can tell us. The issue is not if the card is safe "on average" neither, because as I said there are many many places where the (usually rather favorable) conditions used to test the cards can't be replicated. Same for all those who say it has never failed for them. Average Joe won't have the same cooling as enthusiast here, and these cards are not only for enthusiast, thus the fact that changing the cooler or the fan speed manually, makes it run a lot better doesn't matter. For example, in some places summers are very hot and still the air is 96%+ wet. Not everybody has a centralised AC, and even if you have it, it will cost you a fortune to fight against the hot wet air of the outside. I know for sure that I don't live in the hottest and wettest place in the world, I'll be surprised if it's above the average, and still my GPU's are usually 5-10C higher on summers. That with the case opened and a big fan blowing directly to the case! Sometimes it doesn't matter how much air you throw at the thing because it has already warmed the air surrounding it and because this is wet it doesn't circulate as well as it would do on "normal" air (what is normal anyway?). That's just one of the possibilities.

Another good point is that as the card ages it will go worse. Not only because they inherently go worse, but most importantly because the verage joe never cleans the dust on interior of the case and the heatsinks. If the cards with one month of life are failing (and don't say it only happens on this benchmark) what will happen next year?

my friend had the same problem with his 8800gt, fan speed would not budge automatically, playing crysis the screen scrambles red and comp freezes cuz gpu's at 115c--fanspeed would stay at 40%. yeah, he could manually turn fanspeed up, but then you gotta do that always.... so he got that xigmatek hdt for it, it never passes 60c now...

my 3870 has the zerotherm cooler on it that things pretty nice.... i dont see why reference doesnt include a heatpipe.....

its like they go out of their way to find the absolute biggest bunch of idiots to come up with a plan for reference coolers... and pick the worst plan of the bunch. why even bother making a card if you cant cool it right?

the fault lies mostly with ati and nvidia, i think they need to make a WAY better reference, no reference at all, or chill out with the huge cards... manufacturers like xfx, powercolor, asus, they put the good coolers on because nobody will buy a reference card.

it would be nice to keep it all reference, that means cheaper for everybody anyways... reference coolers, single slot anyways, have been done since the 2900's came out, and they keep getting worse... a company puts together 15,000 reference cards, tests them, and like 75% probably fail, of that 75% like 50% get sent out anyways probably so they dont lose their ass, and now everybody is pissed off at ati again... ahaha

As for those that are saying "it's just one benchmark", what if a game releases that requires the same amount of processing power? Then it does become a real issue. So saying "it's only one application", is no real excuse.

Now, if this is only OCed cards this is happening to, or some non-reference designs, then ATI isn't to blame at all. They put forth a reference, and they are not responsible for other manufacturers choices when they decide to deviate from that.

The easy solution is to use Rivaturner and set the fan to 100%, people shouldn't need to do this!

I only buy cards with slim coolers if I plan to bin the piece of junk immediately and replace it with something beefy like a thermalright VGA cooler, otherwise I would only buy cards with a decent two slot cooler.

Bad Bad AMD :slap:

And can't artifacts appear because of bugs in the program? For example, when i play mass effect on my radeon x1700-based laptop i sometimes get some flickering in the water ONLY in the virmire facility. I went through some intense combat scenes whitout any issues, so overheating is clearly not the case.

Oh and furmark also rapidly heats up the temperature of the GPU which again shows it's really taxing it.

The thing with games is that it displays all kinds of objects and textures and waits for the AI and events in the game, whereas furmark just runs some very tight shaderprograms in loops in the GPU itself so it uses it at max but doesn't use all functions of the GPU like a game does I expect, that's why it's not a big worry in gaming because games are unlikely to stress a GPU in just that way in such a localised loop, however when the GPU would be used for only calculations like CUDA does on nvidia it might be an issue, but then you can just reduce the load a bit and not run it at 100% I guess.

Makes you wonder if ATI's folding client cause undue stress, you would not see it since it doesn't push the results to a display at full speed, but I guess if that was an issue people would notice it since the client would notice calculation errors and their scores would drop.

We are paying for the card and a cooler pre-installed that is just not up to the job of keeping the GPU cool enough (no >70C full load nonsense!), I would rather they sell bare cards as well for say £10 cheaper than one with a fitted fan, left up to the customer to purchase their own cooler, it isn't any more difficult than fitting a 3rd party CPU cooler on a mobo anyway...

To make matters worse they throttle those crappy fan speed to ~40% on those coolers, retards! :cry:

You can't compare the X1950 with today's power house flame throwers, my old 1950GT can be clocked to insane levels with just a crappy air cooled arctic accelero X2 heatsink with a 80mm fan strapped on top with ease and the temps are still pretty low.