Sunday, September 14th 2008

GeForce GTX 260 with 216 Stream Processors Pictured, Benchmarked

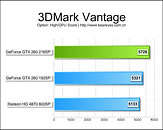

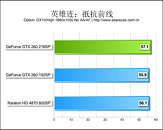

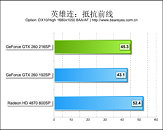

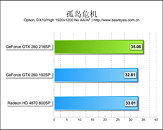

NVIDIA is dressing up a new version of the GeForce GTX 260 GPU as reported earlier, with a revision that carries 216 shader units (against 192 for the original GTX 260). Chinese website Bear Eyes has pictured the new GPU. Other than the increased shader count, that should provide a significant boost to the shader compute power, other GPU parameters such as clock speeds remain the same. The core features 72 texturing units and 28 ROPs. The core is technically called G200-103-A2 (the older core was G200-100-A2). The card reviewed by Bear Eyes was made by Inno3D, called GeForce GTX 260 Gold. This shows that the GTX 260 brand name is here to stay.The card continues to have a 448-bit wide GDDR3 memory bus with 896 MB of memory. This card features 1.0 ns memory chips made by Samsung. On to the benchmarks, and NVIDIA finally manages to comprehensively outperform the Radeon HD 4870 512M in its category. Benchmark graphs for (in the order) 3DMark Vantage, Company of Heroes: Opposing Fronts (without and with AA at 1680x1050 px), and Crysis, are provided below. To read the full (Google Translated) review, visit this page.

Source:

Bear Eyes

83 Comments on GeForce GTX 260 with 216 Stream Processors Pictured, Benchmarked

I suppose Ati stopped doing it, first, because of the architecture they decided to use didn't permit it really well (you lose 25% of the chip with each cluster on R600/RV670, ring bus, the arrangement of TMUs) and at the same time because yields were high enough to not justify the move. That along with the fact they didn't push the fab process to it's limits. Now for RV770 the only argument of the above that is still valid is that they probably have enough yields, as there's nothing preventing them from doing it again, so I dunno.

You have to take into account they already have one SP for redundancy on each SIMD array, so I supppose that already works out well for them. Even if they have to throw away some chips, they probably save some money because they don't have to test all the chips to see it's working units, just how far in Mhz they can go. You lose a bit in manufacturing, you save a bit on QA, I guess.

I read through this forum a lot, reviews, tips/ tricks etc. And I definitely agree with the point made earlier that people get so fanboy'd up about things. From what I can see many people stick their nose in and argue about things that they know nothing about. Other than the fact that they have some blind loyalty to a brand.

What people fail to realise is that being devoted to one company blinds them from the fact that another company may release a product that is better for them, cheaper etc.

I agree with having a healthy conversation / debate about the pros and cons of a product, but FFS stop getting so immature about it all.

next time I will post something worthwhile.;)

Also it takes two GTX280's to beat one 4870X2, so 549$ or 840$