AMD Ryzen AI MAX+ 395 "Strix Halo" APU Benched in 3DMark, Leak Suggests Impressive iGPU Performance

Late last month, an AMD "How to Sell" Ryzen AI MAX series guide appeared online—contents provided an early preview of the Radeon 8060S iGPU's prowess in 1080p gaming environments. Team Red seemed to have some swagger in their step; they claimed that their forthcoming "RDNA 3.5" integrated graphics solution was up to 68% faster than NVIDIA's discrete GeForce RTX 4070 Mobile GPU (subjected to thermal limits). Naturally, first-party/internal documentation should be treated with a degree of skepticism—the PC hardware community often relies on (truly) independent sources to form opinions. A Chinese leaker has procured a pre-release laptop that features a "Zen 5" AMD Ryzen AI Max+ 395 processor. By Wednesday evening, the tester presented benchmark results on the Tieba Baidu forums.

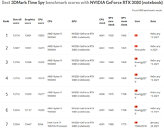

The leaker uploaded a screenshot from a 3DMark Time Spy session. No further insights were shared via written text. On-screen diagnostics pointed to a "Radeon 8050S" GPU, and the CPU being an "AMD Eng Sample: 100-000001243-50_Y." Wccftech double-checked this information; they believe that the OPN ID corresponds to a: "Ryzen AI MAX+ 395 with the Radeon 8060S, instead of the AMD Radeon 8050S iGPU...The difference between the two is that the Radeon 8060S packs the full 40 Compute Units while the Radeon 8050S is configured with 32 Compute Units. The CPU for each iGPU is also different and the one tested here packs 16 Zen 5 cores instead of the 12 Zen 5 cores featured on the Ryzen AI MAX 390." According to the NDA-busting screenshot, Team Red's Ryzen AI MAX+ 395 engineering sample racked up an overall score of 9006 in 3DMark Time Spy. Its graphics score tally came in at 10,106, while its CPU scored 5571 points. The alleged Radeon 8060S iGPU managed to pull in just under NVIDIA's GeForce RTX 4060 Mobile dGPU (average) score of 10,614. The plucky RDNA 3.5 40 CU iGPU seems to outperform a somewhat related sibling; the Radeon RX 7600M XT dGPU (with 32 RDNA 3 CUs) scored 8742 points. Radeon 8060S trails the desktop Radeon RX 7600 GPU by 884 points.

The leaker uploaded a screenshot from a 3DMark Time Spy session. No further insights were shared via written text. On-screen diagnostics pointed to a "Radeon 8050S" GPU, and the CPU being an "AMD Eng Sample: 100-000001243-50_Y." Wccftech double-checked this information; they believe that the OPN ID corresponds to a: "Ryzen AI MAX+ 395 with the Radeon 8060S, instead of the AMD Radeon 8050S iGPU...The difference between the two is that the Radeon 8060S packs the full 40 Compute Units while the Radeon 8050S is configured with 32 Compute Units. The CPU for each iGPU is also different and the one tested here packs 16 Zen 5 cores instead of the 12 Zen 5 cores featured on the Ryzen AI MAX 390." According to the NDA-busting screenshot, Team Red's Ryzen AI MAX+ 395 engineering sample racked up an overall score of 9006 in 3DMark Time Spy. Its graphics score tally came in at 10,106, while its CPU scored 5571 points. The alleged Radeon 8060S iGPU managed to pull in just under NVIDIA's GeForce RTX 4060 Mobile dGPU (average) score of 10,614. The plucky RDNA 3.5 40 CU iGPU seems to outperform a somewhat related sibling; the Radeon RX 7600M XT dGPU (with 32 RDNA 3 CUs) scored 8742 points. Radeon 8060S trails the desktop Radeon RX 7600 GPU by 884 points.