Sudden Drop in Cryptocurrency Prices Hurts Graphics DRAM Market in 3Q21, Says TrendForce

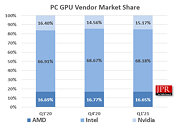

The stay-at-home economy remains robust due to the ongoing COVID-19 pandemic, so the sales of gaming products such as game consoles and the demand for related components are being kept at a decent level, according to TrendForce's latest investigations. However, the values of cryptocurrencies have plummeted in the past two months because of active interventions from many governments, with the graphics DRAM market entering into a bearish turn in 3Q21 as a result. While graphics DRAM prices in the spot market will likely show the most severe fluctuations, contract prices of graphics DRAM are expected to increase by 10-15% QoQ in 3Q21 since DRAM suppliers still prioritize the production of server DRAM over other product categories, and the vast majority of graphics DRAM supply is still cornered by major purchasers.

It should be pointed out that, given the highly volatile nature of the graphics DRAM market, it is relatively normal for graphics DRAM prices to reverse course or undergo a more drastic fluctuation compared with other mainstream DRAM products. As such, should the cryptocurrency market remain bearish, and manufacturers of smartphones or PCs reduce their upcoming production volumes in light of the ongoing pandemic and component supply issues, graphics DRAM prices are unlikely to experience further increase in 4Q21. Instead, TrendForce expects prices in 4Q21 to largely hold flat compared to the third quarter.

It should be pointed out that, given the highly volatile nature of the graphics DRAM market, it is relatively normal for graphics DRAM prices to reverse course or undergo a more drastic fluctuation compared with other mainstream DRAM products. As such, should the cryptocurrency market remain bearish, and manufacturers of smartphones or PCs reduce their upcoming production volumes in light of the ongoing pandemic and component supply issues, graphics DRAM prices are unlikely to experience further increase in 4Q21. Instead, TrendForce expects prices in 4Q21 to largely hold flat compared to the third quarter.