Monday, April 19th 2021

GPU Memory Latency Tested on AMD's RDNA 2 and NVIDIA's Ampere Architecture

Graphics cards have been developed over the years so that they feature multi-level cache hierarchies. These levels of cache have been engineered to fill in the gap between memory and compute, a growing problem that cripples the performance of GPUs in many applications. Different GPU vendors, like AMD and NVIDIA, have different sizes of register files, L1, and L2 caches, depending on the architecture. For example, the amount of L2 cache on NVIDIA's A100 GPU is 40 MB, which is seven times larger compared to the previous generation V100. That just shows how much new applications require bigger cache sizes, which is ever-increasing to satisfy the needs.

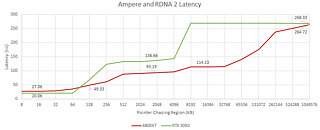

Today, we have an interesting report coming from Chips and Cheese. The website has decided to measure GPU memory latency of the latest generation of cards - AMD's RDNA 2 and NVIDIA's Ampere. By using simple pointer chasing tests in OpenCL, we get interesting results. RDNA 2 cache is fast and massive. Compared to Ampere, cache latency is much lower, while the VRAM latency is about the same. NVIDIA uses a two-level cache system consisting out of L1 and L2, which seems to be a rather slow solution. Data coming from Ampere's SM, which holds L1 cache, to the outside L2 is taking over 100 ns of latency.AMD on the other hand has a three-level cache system. There are L0, L1, and L2 cache levels to complement the RDNA 2 design. The latency between the L0 and L2, even with L1 between them, is just 66 ns. Infinity Cache, which is an L3 cache essentially, is adding only additional 20 ns of additional latency, making it still faster compared to NVIDIA's cache solutions. NVIDIA's GA102 massive die seems to represent a big problem for the L2 cache to go around it and many cycles are taken. You can read more about the test here.

Source:

Chips and Cheese

Today, we have an interesting report coming from Chips and Cheese. The website has decided to measure GPU memory latency of the latest generation of cards - AMD's RDNA 2 and NVIDIA's Ampere. By using simple pointer chasing tests in OpenCL, we get interesting results. RDNA 2 cache is fast and massive. Compared to Ampere, cache latency is much lower, while the VRAM latency is about the same. NVIDIA uses a two-level cache system consisting out of L1 and L2, which seems to be a rather slow solution. Data coming from Ampere's SM, which holds L1 cache, to the outside L2 is taking over 100 ns of latency.AMD on the other hand has a three-level cache system. There are L0, L1, and L2 cache levels to complement the RDNA 2 design. The latency between the L0 and L2, even with L1 between them, is just 66 ns. Infinity Cache, which is an L3 cache essentially, is adding only additional 20 ns of additional latency, making it still faster compared to NVIDIA's cache solutions. NVIDIA's GA102 massive die seems to represent a big problem for the L2 cache to go around it and many cycles are taken. You can read more about the test here.

92 Comments on GPU Memory Latency Tested on AMD's RDNA 2 and NVIDIA's Ampere Architecture

Using cache is in general the lazy man way of solving things.

BTW Nvidia has higher bandwidth than AMD and in high end(GA102) It's significantly higher, but you ignore this.I think Nvidia adding FP32 functionality to Its INT units is a pretty good idea. Although I don't know how much transistors or power It cost gaming performance increased by ~25% and then there is the advantage in compute workload. I wouldn't mind If AMD did the same thing.For CPU yes, but for GPU what better alternative do we have? Super expensive HBM2 or expensive GDDR6x with wider memory controller?

So you can't really say Infinity cache was a bad move. I just wonder, If a smaller one(1/2 or 1/3 smaller) wouldn't be a good enough option, because honestly IC uses up a lot of space.

Assuming die shots in AMD presentation is somewhat accurate, Infinity Cache is 15% of Navi21 die.

I never said to get rid of the whole IC, which was clearly stated in my post. What I wanted is to halve It(64MB instead of 128MB) and the saved up space would be used for more CU. BTW I would love to see a performance penalty graph for using smaller IC to know, If that much cache is really needed or It can be smaller.

Oxford: www.oxfordlearnersdictionaries.com/us/definition/english/smoothness#:~:text=smoothness-,noun,any rough areas or holes

I can present a new topic for depate too: "Does 1d10t live up to his username?".