Thursday, December 3rd 2020

AMD Radeon RX 6900 XT Graphics Card OpenCL Score Leaks

AMD has launched its RDNA 2 based graphics cards, codenamed Navi 21. These GPUs are set to compete with NVIDIA's Ampere offerings, with the lineup covering the Radeon RX 6800, RX 6800 XT, and RX 6900 XT graphics cards. Until now, we have had reviews of the former two, but not the Radeon RX 6900 XT. That is because the card is coming at a later date, specifically on December 8th, in just a few days. As a reminder, the Radeon RX 6900 XT GPU is a Navi 21 XTX model with 80 Compute Units that give a total of 5120 Stream Processors. The graphics card uses a 256-bit bus that connects the GPU with 128 MB of its Infinity Cache to 16 GB of GDDR6 memory. When it comes to frequencies, it has a base clock of 1825 MHz, with a boost speed of 2250 MHz.

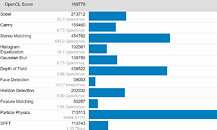

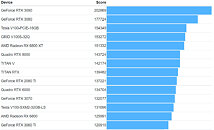

Today, in a GeekBench 5 submission, we get to see the first benchmarks of AMD's top-end Radeon RX 6900 XT graphics card. Running an OpenCL test suite, the card was paired with AMD's Ryzen 9 5950X 16C/32T CPU. The card managed to pass the OpenCL test benchmarks with a score of 169779 points. That makes the card 12% faster than RX 6800 XT GPU, but still slower than the competing NVIDIA GeForce RTX 3080 GPU, which scores 177724 points. However, we need to wait for a few more benchmarks to appear to jump to any conclusions, including the TechPowerUp review, which is expected to arrive once NDA lifts. Below, you can compare the score to other GPUs in the GeekBench 5 OpenCL database.

Sources:

@TUM_APISAK, via VideoCardz

Today, in a GeekBench 5 submission, we get to see the first benchmarks of AMD's top-end Radeon RX 6900 XT graphics card. Running an OpenCL test suite, the card was paired with AMD's Ryzen 9 5950X 16C/32T CPU. The card managed to pass the OpenCL test benchmarks with a score of 169779 points. That makes the card 12% faster than RX 6800 XT GPU, but still slower than the competing NVIDIA GeForce RTX 3080 GPU, which scores 177724 points. However, we need to wait for a few more benchmarks to appear to jump to any conclusions, including the TechPowerUp review, which is expected to arrive once NDA lifts. Below, you can compare the score to other GPUs in the GeekBench 5 OpenCL database.

35 Comments on AMD Radeon RX 6900 XT Graphics Card OpenCL Score Leaks

www.phoronix.com/scan.php?page=article&item=amd-rx6800-opencl&num=6

Actual OpenCL performance :

All it's good for is generating hype, conjecture, and FUD.

8GB / 50% more VRAM

Very strong productivity performance

NVidia software suite (gamestream, recording, voice etc)

Stronger RT performance

DLSS

Granted if any or all of those mean bupkiss to you, thats fine too, but theres a bit more to it than 3% for +$500 and +100w. In some ways at least, you're definitely getting more product.

Not to mention availability, the 6900XT is going to be unobtanium, and Nvidia might just sell a few 3090's to people who want a GPU right away/sooner.

So for some of us that isn't a deciding factor on anything.

And the point of my post is it will all depend on the games you play and not everyone cares for RT performance.

When 50% of games have it and we are on the Nv 4000 series and RX 7000 series then maybe it will be a bigger factor for me.

I intend on buying a 6xxx series, will still probably play on 1080 for another year with eye candy turned to max, and with upgrade mods on games to make them look as good or better than RTRT with mediocre texture and too shiny items.

Heh, anyone who has seen that RDNA ISA can tell that AMD has put a lot of work at improving the assembly code this generation. The real issue is that RDNA remains unsupported on ROCm/HIP, so Radeon VII remains the best consumer-ish card to run in an ROCm/HIP setting. (Maybe Rx 580 if you wanted something cheaper). AMD's compute line is harder to get into as long as these RDNA cards don't work with ROCm/HIP.

ROCm/OpenCL support is pleasant to hear, but I bet it still doesn't run Blender reliably (I realize ROCm is under active development and things continue to improve, but I really haven't had much luck with OpenCL)

The 6900 series shouldn't exist - it's the true "XT" card artificially inflated to rip-off pricing just because it's close enough to Nvidia's own rip-off card that AMD can get away with it.

Historically, the XT model has always been the full silicon, and the overclocked binned silicon of those called the XTX.

What we have with the 6900 is the XTX variant, the 6800XT is what would normally have been the vanilla SKU with a cut down core harvesting those defective dies, and then the current vanilla 6800 would be an OEM-only LE version because at 60CU out of a potential 80CU, it's a seriously hampered piece of silicon using some incredibly defective dies. In an RX 6800, 25% of the cores are disabled, and that's huge.

Like literally.

usually it goes like this:

Zen 3 going to be faster than intel in games? nope, no way, don't kid yourself. -> Zen 3 actually ends up being faster than intel in games? heh, it's not an upgrade to intel users anyway!

RDNA 2 going to be faster than 2080 ti in games? nope, no way, don't kid yourself. -> RDNA 2 actually ends up being faster than a 2080 ti AND rivals nvidia's top cards? well, it might be faster than the 2080 ti by a nice margin, but the RT performance isn't up to far, so who cares if their raster performance is on point!

I've seen these two exact scenarios happen in threads he commented on, at this point I wouldn't be surprised if he gets paid to post such tripe... either that or it's a massive cope/buyers remorse, don't know whichfirst/second gen tessellation was slow as fuck too, who cares. RTRT effects won't become REALLY mainstream until at least 5 years, and won't be mandatory in any game for at least 10 more years/end of the new console generation... and by the time they do become mainstream, current RTX and RX cards will be outdated by then, and you'll be getting much better performance for less in said time, so really it's a flawed argument anyway