Thursday, December 3rd 2020

AMD Radeon RX 6900 XT Graphics Card OpenCL Score Leaks

AMD has launched its RDNA 2 based graphics cards, codenamed Navi 21. These GPUs are set to compete with NVIDIA's Ampere offerings, with the lineup covering the Radeon RX 6800, RX 6800 XT, and RX 6900 XT graphics cards. Until now, we have had reviews of the former two, but not the Radeon RX 6900 XT. That is because the card is coming at a later date, specifically on December 8th, in just a few days. As a reminder, the Radeon RX 6900 XT GPU is a Navi 21 XTX model with 80 Compute Units that give a total of 5120 Stream Processors. The graphics card uses a 256-bit bus that connects the GPU with 128 MB of its Infinity Cache to 16 GB of GDDR6 memory. When it comes to frequencies, it has a base clock of 1825 MHz, with a boost speed of 2250 MHz.

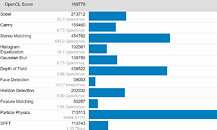

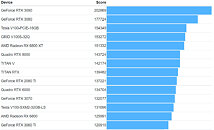

Today, in a GeekBench 5 submission, we get to see the first benchmarks of AMD's top-end Radeon RX 6900 XT graphics card. Running an OpenCL test suite, the card was paired with AMD's Ryzen 9 5950X 16C/32T CPU. The card managed to pass the OpenCL test benchmarks with a score of 169779 points. That makes the card 12% faster than RX 6800 XT GPU, but still slower than the competing NVIDIA GeForce RTX 3080 GPU, which scores 177724 points. However, we need to wait for a few more benchmarks to appear to jump to any conclusions, including the TechPowerUp review, which is expected to arrive once NDA lifts. Below, you can compare the score to other GPUs in the GeekBench 5 OpenCL database.

Sources:

@TUM_APISAK, via VideoCardz

Today, in a GeekBench 5 submission, we get to see the first benchmarks of AMD's top-end Radeon RX 6900 XT graphics card. Running an OpenCL test suite, the card was paired with AMD's Ryzen 9 5950X 16C/32T CPU. The card managed to pass the OpenCL test benchmarks with a score of 169779 points. That makes the card 12% faster than RX 6800 XT GPU, but still slower than the competing NVIDIA GeForce RTX 3080 GPU, which scores 177724 points. However, we need to wait for a few more benchmarks to appear to jump to any conclusions, including the TechPowerUp review, which is expected to arrive once NDA lifts. Below, you can compare the score to other GPUs in the GeekBench 5 OpenCL database.

35 Comments on AMD Radeon RX 6900 XT Graphics Card OpenCL Score Leaks

Justify it all you want, but you know what I said is the truth. If you don't use it, that's fine, but there are plenty who do, more daily, and that train gains momentum thanks to consoles.

Given how popular streaming and WFH is at the moment, those are features that can't really be ignored and AMD simply doesn't compete at all. Like, they haven't even bothered trying! On top of that, the number of things that are vastly superior when run using CUDA instead of OpenCL is starting to get ridiculous. I know CUDA is proprietary, but Nvidia have put the work in to make it a thing, and spent years investing in it at this point. AMD have basically said "sure, we do OpenCL" and left it to rot with developers left to fend for themselves. Is it any wonder that CUDA is now the dominant application acceleration API?

Before anyone wonders, No, I'm not an Nvidia fanboy. If anything I hate Nvidia for regular unethical and shady practices and have a strong preference for the underdog (because that's the better option that promotes healthy competition and benefits us as consumers the most) but I can't deny the facts - RDNA2 is completely uncompetitive in many ways. The only thing it is actually competitive in is traditional raster-based gaming and that's a shrinking slice of the market.

AMD's architectures improving over time relative to their Nvidia counterpart when they launched may be at least in part due to their compute heavy nature, and it might be how Ampere fares over time too.

To hammer in an old point, indeed if RT, DLSS, voice, streaming, CUDA etc etc mean bupkiss to you, and I know there would be MANY out there that fit the bill, all the power to you, why pay for features you don't want or wont use. But accept that the other side of that coin is if you want any or all of those, AMD is either weaker on non-existent in those spaces.

As for the PC gaming market, yes - it's pretty big - but it's not all running AMD 6800-series raytracing cards or better.