341

341

NVIDIA GeForce GTX 1080 8 GB Review

Overclocking »NVIDIA Boost 3.0

With Pascal, NVIDIA is also introducing a new version of their Boost Technology, which increases GPU clocks when the GPU thinks it can do so, providing higher performance without user intervention.

I did some testing of Boost 3.0 on the GeForce GTX 1080 (not using Furmark). First, the card is in idle, before a game is started and clocks shoot up to 1885 MHz. As GPU temperature climbs, we immediately see Boost 3.0 reducing clocks - with Boost 2.0, clocks stayed at their maximum until a certain temperature was reached. Once the card reaches around 83°C, the clocks level out at around 1705 MHz, which is still 100 MHz higher than the base clock.

I did some additional testing using a constant game-like load (not Furmark), adjusting the fan's speed to dial in certain temperatures without changing any other parameter. The curve above is a cleaned up version of this test's data to better highlight my findings.

We can see a linear trend that has clocks go down as the temperature increases, in steps of 13 MHz, which is the clock generator's granularity. Once the card exceeds 82°C (I had to stop the fan manually to do that), the card will drop all the way down to its base clock, but will never go below that guaranteed minimum (until 95°C where thermal trip will kick in).

This means that for the first time in GPU history, lower temperatures directly translate into more performance - at any temperature point and not only in the high 80s. I just hope that this will not tempt custom board manufacturers to go for ultra-low temperatures while ignoring fan noise.

Overclocking with Boost 3.0

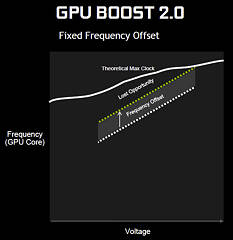

In the past, overclocking worked by directly selecting the clock speed you wanted. With Boost, this changed to defining a fixed offset that is applied to any frequency that Boost picks.On Boost 3.0, this has changed again, giving you much finer control since you can now individually select the clock offset that is applied at any given voltage level.

NVIDIA claims that there is some additional OC potential by using this method because the maximum stable frequency depends on the operating voltage (higher voltage = higher clock).

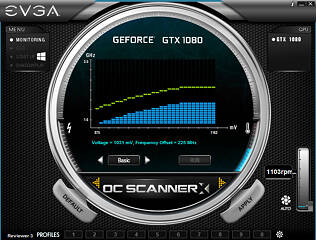

Currently, there are 80 voltage levels and you can set an individual OC frequency for each, which would make manually OCing this card extremely time intensive. That's why NVIDIA is recommending that board partners implement some sort of OC Stability scanning tool that will create a profile for you.

We were given a pre-release version of EVGA Precision, which implements that feature, and I have to say that it does not work. This version of Precision is in its Alpha state, so later versions may work better, but right now, you are better off manually picking frequencies, or by sticking to the traditional overclock that uses the same increase for each voltage point.

You also have to consider that for this new method of overclocking to be effective, the card must actually run at these voltages at some point while gaming. Most overclockers I know try to get the card to run at the highest voltage level, which provides the most OC potential. If the card is always running at that voltage, there is no point in fine-tuning the lower voltages the card will never run.

Overvoltage

Overvoltaging now also works slightly differently. All it does is enable additional, higher voltage points for Boost 3.0 to run at. So if you refer to the chart above, enabling overvoltage would add additional points to the right of the area marked in the chart, for which you can implement individual frequency adjustments.

Mar 29th, 2025 16:56 EDT

change timezone

Latest GPU Drivers

New Forum Posts

- Throttlestop undervolt asus tuf f15 i5-10300H NEED HELP UNCLEWEBB (7)

- Dell Workstation Owners Club (3313)

- What's your latest tech purchase? (23426)

- 9070 XT - 2x HDMI high refresh displays (144 and 120 Hz) not working (86)

- Intel Arc A770 LE Temperature (2)

- Did Nvidia purposely gimp the performance of 50xx series cards with drivers (123)

- TPU's Nostalgic Hardware Club (20138)

- Overclocking ROG Astral 5080 - Power Limited (0)

- Upgrade from a AMD AM3+ to AM4 or AM5 chipset MB running W10? (43)

- Is the futureproof gaming solution a four drive system? (4)

Popular Reviews

- Sapphire Radeon RX 9070 XT Pulse Review

- ASRock Phantom Gaming B850 Riptide Wi-Fi Review - Amazing Price/Performance

- Samsung 9100 Pro 2 TB Review - The Best Gen 5 SSD

- Assassin's Creed Shadows Performance Benchmark Review - 30 GPUs Compared

- Sapphire Radeon RX 9070 XT Nitro+ Review - Beating NVIDIA

- be quiet! Pure Rock Pro 3 Black Review

- Palit GeForce RTX 5070 GamingPro OC Review

- ASRock Radeon RX 9070 XT Taichi OC Review - Excellent Cooling

- Pulsar Feinmann F01 Review

- AMD Ryzen 7 9800X3D Review - The Best Gaming Processor

Controversial News Posts

- MSI Doesn't Plan Radeon RX 9000 Series GPUs, Skips AMD RDNA 4 Generation Entirely (142)

- Microsoft Introduces Copilot for Gaming (124)

- AMD Radeon RX 9070 XT Reportedly Outperforms RTX 5080 Through Undervolting (118)

- NVIDIA Reportedly Prepares GeForce RTX 5060 and RTX 5060 Ti Unveil Tomorrow (115)

- Over 200,000 Sold Radeon RX 9070 and RX 9070 XT GPUs? AMD Says No Number was Given (100)

- NVIDIA GeForce RTX 5050, RTX 5060, and RTX 5060 Ti Specifications Leak (96)

- Retailers Anticipate Increased Radeon RX 9070 Series Prices, After Initial Shipments of "MSRP" Models (90)

- China Develops Domestic EUV Tool, ASML Monopoly in Trouble (88)