Friday, June 17th 2011

AMD Charts Path for Future of its GPU Architecture

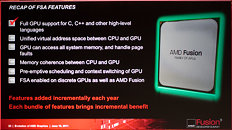

The future of AMD's GPU architecture looks more open, broken from the shackles of a fixed-function, DirectX-driven evolution model, and that which increases the role of GPU in the PC's central processing a lot more than merely accelerating GPGPU applications. At the Fusion Developer Summit, AMD detailed its future GPU architecture, revealing that in the future, AMD's GPUs will have full support for C, C++, and other high-level languages. Integrated with Fusion APUs, these new number-crunching components will be called "scalar co-processors".

Scalar co-processors will combine elements of MIMD (multiple-instruction multiple-data,) SIMD (single-instruction multiple data), and SMT (simultaneous multithreading). AMD will ditch the VLIW (very long instruction word) model that has been in use for several of AMD's past GPU architectures. While AMD's GPU model will break from the shackles of development that is pegged to that of DirectX, it doesn't believe that APIs such as DirectX and OpenGL will be discarded. Game developers can continue to develop for these APIs, and C++ support is more for general purpose compute applications. That does, however, create a window for game developers to venture out of the API-based development model (specifically DirectX). With its next Fusion processors, the GPU and CPU components will make use of a truly common memory address space. Among other things, this eliminate the "glitching" players might sometimes experience when games load textures as they go over the crest of a hill.

Source:

TechReport

Scalar co-processors will combine elements of MIMD (multiple-instruction multiple-data,) SIMD (single-instruction multiple data), and SMT (simultaneous multithreading). AMD will ditch the VLIW (very long instruction word) model that has been in use for several of AMD's past GPU architectures. While AMD's GPU model will break from the shackles of development that is pegged to that of DirectX, it doesn't believe that APIs such as DirectX and OpenGL will be discarded. Game developers can continue to develop for these APIs, and C++ support is more for general purpose compute applications. That does, however, create a window for game developers to venture out of the API-based development model (specifically DirectX). With its next Fusion processors, the GPU and CPU components will make use of a truly common memory address space. Among other things, this eliminate the "glitching" players might sometimes experience when games load textures as they go over the crest of a hill.

114 Comments on AMD Charts Path for Future of its GPU Architecture

What needs to be done is for someone to effectively show why other options make more sense, not fro, a technical standpoint, but from a business standpoint.

And like mentioned, none of these technologies AMD/ATI introduced over the years really seem to make much business sense, and as such, they fail hard.

Amd's board now seems to realize this...Dirk was dumped, and Bulldozer "delayed", simply becuase that made the MOST business sense...they met the market demand, and rightly so, as market demnad for those products is so high that they have no choice but to delay the launch of Bulldozer.

Delaying a new product, because an existing one is in high demand, makes good business sense.

Buying ATI was a good move because both AMD and NV are now obviously trying to bypass Intel's dominance by creating a new GPU compute sector. I'm not sure if that will ever benefit the common user though because of the limited types of computing that work well with GPUs.

Also, Llano and Brazos are redefining the low end in a way that Intel didn't bother to so that's interesting too.

(below in relpy to someone above, i'm not sure how relevant it is but it's true none the less)

the whole do what makes the most money now and we'll deal with the consequences later ideology, is why the american economy is in the state that it is. companies are like children, they want the candy & lots of it now but then they make themselves sick because they had too much. a responsible parent regulates them, it doesn't matter how big a tantrum they throw, because they know that cleaning up the resulting mess that occurs if they let them do as they please is much worse. just saying companies will do what makes the biggest short term gains regardless of the long term consequences doesn't help you or i see better games.

www.anandtech.com/show/4455/amds-graphics-core-next-preview-amd-architects-for-compute

We aren't anywhere near the chips being able to independently determine data type and scheduling like that.

hothardware.com/News/Microsoft-Demos-C-AMP-Heterogeneous-Computing-at-AFDS/

www.pcper.com/reviews/Graphics-Cards/AMD-Fusion-System-Architecture-Overview-Southern-Isle-GPUs-and-Beyond

That's what that APU common sense is about :PVery nice find sir, i want to read it all but i might have to bookmark it.:toast:,

They better release that demo to the public! :laugh:

Honestly, I definitely think AMD is going to take a leap in innovation over Nvidia these next 5 years or so. I really do think AMD's experience with CPU's is going to pay off when it comes to integrating compute performance in their GPU...well APU. Nvidia has the lead right now, but i can see AMD loosening that grip.

I would bet money this is not hardware based at all, and requires special software/drivers to work properly.

Or if all of AMDs cpus go this way, means people don't have to buy a gpu straight away which is also nice.

AMD got it right, 6 years ago when they started down this road, thats why bulldozer is modular.

Again, this is just gpgpu. Same thing we've had for ages. It is not transparent to the OS, and must specifically be coded for. Said coding is always where AMD ends up dropping the ball on this crap. I will not be excited until I see this actually being used extensively in the wild.

It does have benefits.

Thats where this is headed, INT + GPU the FPU is on borrowed time and thats likely why they shared it.

I don't know why you even care if it uses software. All computing does....PC's are useless without software.