Friday, June 17th 2011

AMD Charts Path for Future of its GPU Architecture

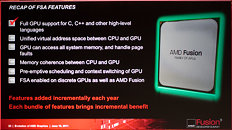

The future of AMD's GPU architecture looks more open, broken from the shackles of a fixed-function, DirectX-driven evolution model, and that which increases the role of GPU in the PC's central processing a lot more than merely accelerating GPGPU applications. At the Fusion Developer Summit, AMD detailed its future GPU architecture, revealing that in the future, AMD's GPUs will have full support for C, C++, and other high-level languages. Integrated with Fusion APUs, these new number-crunching components will be called "scalar co-processors".

Scalar co-processors will combine elements of MIMD (multiple-instruction multiple-data,) SIMD (single-instruction multiple data), and SMT (simultaneous multithreading). AMD will ditch the VLIW (very long instruction word) model that has been in use for several of AMD's past GPU architectures. While AMD's GPU model will break from the shackles of development that is pegged to that of DirectX, it doesn't believe that APIs such as DirectX and OpenGL will be discarded. Game developers can continue to develop for these APIs, and C++ support is more for general purpose compute applications. That does, however, create a window for game developers to venture out of the API-based development model (specifically DirectX). With its next Fusion processors, the GPU and CPU components will make use of a truly common memory address space. Among other things, this eliminate the "glitching" players might sometimes experience when games load textures as they go over the crest of a hill.

Source:

TechReport

Scalar co-processors will combine elements of MIMD (multiple-instruction multiple-data,) SIMD (single-instruction multiple data), and SMT (simultaneous multithreading). AMD will ditch the VLIW (very long instruction word) model that has been in use for several of AMD's past GPU architectures. While AMD's GPU model will break from the shackles of development that is pegged to that of DirectX, it doesn't believe that APIs such as DirectX and OpenGL will be discarded. Game developers can continue to develop for these APIs, and C++ support is more for general purpose compute applications. That does, however, create a window for game developers to venture out of the API-based development model (specifically DirectX). With its next Fusion processors, the GPU and CPU components will make use of a truly common memory address space. Among other things, this eliminate the "glitching" players might sometimes experience when games load textures as they go over the crest of a hill.

114 Comments on AMD Charts Path for Future of its GPU Architecture

And I don't buy the nV argument either.

DirectX, is largely, broken, because of CUDA. Should I mention the whole Batman antialiasing mumbo-jumbo?

I mean, I understand teh business side, and CUDA, potentially, has saved nV's butt.

But it's existence as a closed platform does more harm than good.

Thankfully, AMD will have thier GPUs in thier CPUs, which, in hardware, will provide alot more functionality than nV can ever bring to the table.

More anti-CUDA bs with nothing to back it.

Tesselation and compute features. ( Ati had a a tessellation unit ready a long time ago)

In that regard, it's impossible for me to be "anti-CUDA". It's wrapping GPGPU functions into that specific term that's the issue.;)

As for tesselation, it was not included because it didn't make sense to include it at all, not because Nvidia was not ready. ANYTHING besides a current high-end card is brought to its knees when tesselation is enabled, so tesselation in HD4000 and worse yet HD2/3000 was a waste of time that no developer really wanted, because it was futile. If they had wanted it then no one would have stopped them from implementing it in games, they don't even use it on the Xbox which is a closed platform and much easier to implement without worries of screwing up for non-supporting cards.

Besides a tesselator (especially the one that Ati used before the DX11 implementation) is the most simple thing you can throw on a circuit, it's just an interpolator, and Nvidia already toyed with the idea of interpolated meshes with the FX series. It even had some dedicated hardware for it, like a very archaic tesselator. Remember how that went? Ati also created something similar, much more advanced (yet nowhere near close to DX11 tesselation) and was also scrapped by game developers, because it was not viable.What are you talking about man? CUDA has nothing to do with DirectX. They are two very different API's that have hardware (ISA) correlation on the GPU and are exposed via the GPU drivers. DirectX and Windows have nothing to do with that. BTW considering what you think about it, how do you explain CUDA (GPGPU) on Linux and Apple OS's?

G80 launched November 2006.

R520, which featured CTM, and Compute support(and as such, even supported F@H on GPU long before nVidia did), launched a year earlier, when nVidia had no such options, due to a lack of "double precision", which was the integral feature that G80 brought to the market for nV. This "delay" is EXACTLY what delayed DirectCompute.

That has nothing to do with our discussion though. Ati being first means nothing as to the current and 5 past years situation. Ati was bought and dissapeared a long time ago and in the process the project was abandoned. AMD* was simply not ready to let GPGPU interfere with their need to sell high-end CPU (none is Intel), and that's why they have never really pused for GPGPU programs until now. Until Fusion, so that they can continue selling high-end CPU AND high end GPUs. There's nothing honorable on this Fusion thing.

* I want to be clear about a fact that not many see apparently. Ati != AMD and has never been. I never said nothing about what Ati pursued, achieved or made before it was bought. It's after the acquisition that the GPGPU push was completely abandoned.

BTW your last sentence holds no water. So DirectCompute was not included in DX10 because Nvidia released a DX10 card 7 months earlier than AMD, which also happens to be compute ready (and can be used even on todays GPGPU programs)? Makes no sense dude. Realistically only AMD could have halted DirectCompute, but reality is that they didn't because DirectCompute never existed, nor was it planned until other APIs appeared and showed that DirectX's supremacy and Windows as a gaming platform was in danger.

I said, very simply, that nVidia's delayed implementations ("CUDA" hardware support), and the supporting software, has greatly affected the transparacy of "stream"-based computing iin the end-user space.Says it all.

The "software" needed is already there(there's actually very limited purposes for "GPU" based computing), and has been for a long time. Hardware functionality is here, with APUs.CUDA has EVERYTHING to do with DirectX, as it replaces it, rather than works with it. Because the actual uses are very limited, there's no reason for a closed API such as CUDA, except to make money. And that's fine, that's business, but it does hurt the consumer in the end.

As per usual in this forum, there is a lot of CUDA/nV hate, with no real substance to back it up.Wrong. See above. It creates a market that open standards eventually capitalize on. Again, your disdain for CUDA is still completely unfounded.

The idea of APU for laptops and HTPC is great, but for HPC or enthusiast use it's retarded and I don't know why so many people are content with it. Why I need 400 SPs on a CPU, which are not enough for modern games, just to run GPGPU code on it, when I can have 3000 on a GPU and use as many as I want? Also when a new game is released and needs 800 SP, oh well I need a new CPU, not because I need a better CPU, but because I need the integrated GPU to have 800 SP. RETARDED. And of course I would still need the 6000 SP GPU for the game to run.

It's also false that GPGPU runs better on an APU because t's close to the CPU. It varies with the task. many tasks are run much much better on dedicated GPU, thanks to the high bandwidth and numenrous and fast local cache and registers.

Like why haven't they jsut sold the software to microsoft, already?

Why don't they make it work on ATI GPUs too?

I mean really...uses are so few, what's the point?

And I'm not saying they copied CUDA btw (although it's very similar), but the concept and CUDA is in fact the evolution of Brook/Brook++/BrookGPU, made by the same people who made Brook in Standford and who actually invented the Stream processor concept. Nvdia didn't invent GPGPU, but many people who did work fr Nvidia now. i.e. Bill Dally.Because AMD doesn't want it and they can't do it without permission. And never wanted it tbh, because it would have exposed their inferiority on that front. Nvidia already offered CUDA and PhysX to AMD and for free in 2007, but AMD refused.

Also there's OpenCL which is the same thing and something both AMD and Nvidia are supporting so...Uses are few, there's no point, yet AMD is promoting the same concept as the future. A hint, uses are not few. Until now you don't see many because:

1- Intel and AMD have been trying hard to delay GPGPU.

2- It takes time to implement things. i.e. How much it took developers to implement SSE? And the complexity of SSE in comparison to GPGPU is like...

3- You don't read a lot. There's hundreds of implementations in the scientific arena.

Given the state of the NV drivers for the G80 and that ATI hasn’t released their hw yet; it’s hard to see how this is really a bad plan. We really want to see final ATI hw and production quality NV and ATI drivers before we ship our DX10 support. Early tests on ATI hw show their geometry shader unit is much more performant than the GS unit on the NV hw. That could influence our feature plan.

As a home user, there's 3D browser acceleration, encoding accelleration, and game physics. Is there more than that for a HOME user? Because that's what I am, right, so that's all I care about.

Which brings me to my point...why do I care? GPGPU doesn't offer me much.

Theirs actually a lot of stuff ATIs architecture is better at.

Well cept the 580, that's built for that stuff :laugh:

But barely outclass G80?

I've got apps where my 6870 completely smashes apart even top end nvidia cards.

May sound a bit fan-boyish here but just sharing my experience take it as you will.

Geeks3d and the other tech blogs demi-frequently post up comparisons of cards on new benchmarks or compute programs can find results there.

Been a while since I've read up though so can't point you in a specific direction, only that it's not so much a case of hardware vs hardware.

Cheers for clearing up about the compute though.

MS shifted their goals seeing this

Physics toys! ( my favourite, I love n-body simulations and water simulations)

I believe GPGPU can help with search results too if I'm not mistaken.

Lot's of stuff can benefit just hard to think of stuff of the top of your head.

There's many apps where AMD cards are faster. This is obvious, highly parallel applications which riquire very little CPU-like behavior, will always run on a highly parallelized architecture. That's not to say that Cayman has many GPGPU oriented hardware features that G80 didn't have 5 years ago.

And regarding that advantage, AMD is stepping away from that architecture in the future right? They are embracing scalar design. So which architecture was essentially right in 2006? VLIW or scalar? It really is that simple, if moving into the future for AMD means going scalar, there really is very few questions unanswered. When AMD's design is almost a copy* of Kepler and Maxwell which were announced a year ago, there's very few questions about what is the correct direction. And then it just becomes obvious who followed that path before...Well you said "it does not offer anything to the end user". That's not the same as saying that it does not offer anything to you. It offers a lot to me. Of course that's subjective, but even for the arguably few apps where it works, I feel it helps a lot. Kinda unrelated or not, but I usually hear how useless it is because "it only boasts video encoding by 50-100%". Lol you need a completely new $1000 CPU + supporting MB to achieve the same improvement, but nevermind.

It's something I'll need to research more.

Both future architectures from AMD and Nvidia are going to be scalar + vector. For AMD it's the arch in the OP. For Nvidia I'm not sure if it was kepler or Maxwell, but in any case by 2013 both companies will be there.