Friday, June 17th 2011

AMD Charts Path for Future of its GPU Architecture

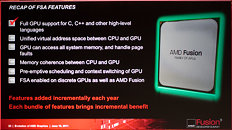

The future of AMD's GPU architecture looks more open, broken from the shackles of a fixed-function, DirectX-driven evolution model, and that which increases the role of GPU in the PC's central processing a lot more than merely accelerating GPGPU applications. At the Fusion Developer Summit, AMD detailed its future GPU architecture, revealing that in the future, AMD's GPUs will have full support for C, C++, and other high-level languages. Integrated with Fusion APUs, these new number-crunching components will be called "scalar co-processors".

Scalar co-processors will combine elements of MIMD (multiple-instruction multiple-data,) SIMD (single-instruction multiple data), and SMT (simultaneous multithreading). AMD will ditch the VLIW (very long instruction word) model that has been in use for several of AMD's past GPU architectures. While AMD's GPU model will break from the shackles of development that is pegged to that of DirectX, it doesn't believe that APIs such as DirectX and OpenGL will be discarded. Game developers can continue to develop for these APIs, and C++ support is more for general purpose compute applications. That does, however, create a window for game developers to venture out of the API-based development model (specifically DirectX). With its next Fusion processors, the GPU and CPU components will make use of a truly common memory address space. Among other things, this eliminate the "glitching" players might sometimes experience when games load textures as they go over the crest of a hill.

Source:

TechReport

Scalar co-processors will combine elements of MIMD (multiple-instruction multiple-data,) SIMD (single-instruction multiple data), and SMT (simultaneous multithreading). AMD will ditch the VLIW (very long instruction word) model that has been in use for several of AMD's past GPU architectures. While AMD's GPU model will break from the shackles of development that is pegged to that of DirectX, it doesn't believe that APIs such as DirectX and OpenGL will be discarded. Game developers can continue to develop for these APIs, and C++ support is more for general purpose compute applications. That does, however, create a window for game developers to venture out of the API-based development model (specifically DirectX). With its next Fusion processors, the GPU and CPU components will make use of a truly common memory address space. Among other things, this eliminate the "glitching" players might sometimes experience when games load textures as they go over the crest of a hill.

114 Comments on AMD Charts Path for Future of its GPU Architecture

Course this is much bigger. Saw this coming. Our CPUs are gonna be replaced by GPUs eventually. Those who laughed at AMD's purchase of ATI...heh. Nice move and I guess it makes more sense to ditch the ATI name if you are gonna eventually merge the tech even more. Oh well, I still won't ever call their discrete GPUs AMD.

Also the article in TechReport says:Don't you think that with less than 6 months left for HD7000 release it would be the time already to talk about specific products?

Extend to discreet GPU is the last step, which suggests that that will happen in 2 generations. This is for Fusion only, at least for now it seems. Not in vain the new architecture is called FSA, Fusion System Architecture.

I think I'll just wait for Kepler and Maxwell.

Either that, or they have been forced to adopt the nVidia route due to entrenched CUDA and nVidia paid de-optimizations for folding and other parallel computing.

www.pcper.com/reviews/Graphics-Cards/AMD-Fusion-System-Architecture-Overview-Southern-Isle-GPUs-and-Beyond

It seems this is indeed the base for the HD7000 Southern islands architecture. This wil be interesting...

From what I understand, it sounds very similar to the old SPARC HPC processors...What I'm worried about is that such a drastic design change may require an even more drastic change on the software side which will distance the already limited number of developers backing AMD ...

Thanks Carmack...

I am excited to get both on a more common platform though, and as much as I like my 5870 I have been wanting a green card for better GTA performance.

AMD has followed a totally passive approach because that's the cheaper approach. I'm not saying that's a bad strategy for them, but they have not fought for the GPGPU side until very recently.In fact yes. Entrepreneur companies constantly invest in products whose viability is still in question and with little markets. They create the market.

There's nothing wrong in being one of the followers, just give credit where credit is due. And IMO AMD deserves none.They have had top performers in gaming. Other than that Nvdia has been way ahead in professional markets.

And AMD did not pioneer GPGPU. It was a group in Standford who did it and yes they used X1900 cards, and yes AMD collaborated, but that's far from pioneering it and was not really GPGPU, it mostly used DX and OpenGL for doing math. By the time that was happening Nividia had already been working on GPGPU on their architecture for years as can be seen with the launch of G80 only few monts after the introduction of X1900.That for sure is a good thing. My comments were just regarding how funny it is that after so many years of AMD promoting VLIW and telling everyone and dog that VLIW was the way to go and a much better approach. Even downplaying and mocking Fermi, well they are going to do the same thing Nvidia has been doing for years.

I already predicted this change in direction a few years ago anyway. When Fusion was frst promoted I knew they would eventually move into this direction and I also predcted that Fusion would represent a turning point in how aggressively would AMD promote GPGPU. And that's been the case. I have no love (neither hate) for AMD for this simple reason. I understand they are the underdog, and need some marketing on their side too, but they always sell themselves as the good company, but do nothing but downplay other's strategies until they are able to follow them and they do unltimately follow them. Just a few months ago (HD6000 introduction) VLIW was the only way to go, almost literally the godsend, while Fermi was mocked up as the wrong way to go. I knew it was all marketing BS, and now it's demostrated, but I guess people have short memories so it works for them. Oh well all these fancy new features are NOW the way to go. And it's true, except there's nothing new on them...

Now to wait for IOMMU support in Windows-based OS!!

The question remains though...why did Larrabee really fail? I mean, they said Larrabee wouldn't get a public launch, but wasn't fully dead yet either...so they must ahve had at least some success...or this path is inevitible.

If they can pull it off my hats off to them.

I mean, they can use IOMMU for address translation, as the way i see it, the GART space right now is effectively the same, but with a limited size, so while it would be much more work for memory controllers, I don't really see anything standing in the way other than programming.

Not trying to say your wrong just saying we don't know both sides of the story or reasoning behind it.