Thursday, January 15th 2015

NVIDIA GeForce GTX 960 Specs Confirmed

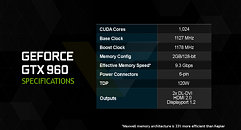

Here's what NVIDIA's upcoming performance-segment GPU, the GeForce GTX 960, could look like under the hood. Key slides from its press-deck were leaked to the web, revealing its specs. To begin with, the card is based on NVIDIA's 28 nm GM206 silicon. It packs 1,024 CUDA cores based on the "Maxwell" architecture, 64 TMUs, and possibly 32 ROPs, despite its 128-bit wide GDDR5 memory interface, which holds on to 2 GB of memory. The bus may seem narrow, but NVIDIA is using a lossless texture compression tech, that will effectively improve bandwidth utilization.

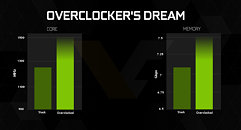

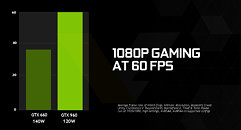

The core is clocked at 1127 MHz, with 1178 MHz GPU Boost, and the memory at 7.00 GHz (112 GB/s real bandwidth). Counting its texture compression mojo, NVIDIA is beginning to mention an "effective bandwidth" figure of 9.3 GHz. The card draws power from a single 6-pin PCIe power connector, the chip's TDP is rated at just 120W. Display outputs will include two dual-link DVI, and one each of HDMI 2.0 and DisplayPort 1.2. In its slides, NVIDIA claims that the card will be an "overclocker's dream" in its segment, and will offer close to double the performance over the GTX 660. NVIDIA will launch the GTX 960 on the 22nd of January, 2015.

Source:

VideoCardz

The core is clocked at 1127 MHz, with 1178 MHz GPU Boost, and the memory at 7.00 GHz (112 GB/s real bandwidth). Counting its texture compression mojo, NVIDIA is beginning to mention an "effective bandwidth" figure of 9.3 GHz. The card draws power from a single 6-pin PCIe power connector, the chip's TDP is rated at just 120W. Display outputs will include two dual-link DVI, and one each of HDMI 2.0 and DisplayPort 1.2. In its slides, NVIDIA claims that the card will be an "overclocker's dream" in its segment, and will offer close to double the performance over the GTX 660. NVIDIA will launch the GTX 960 on the 22nd of January, 2015.

119 Comments on NVIDIA GeForce GTX 960 Specs Confirmed

I would have seeing mid-range cards with 3GB or more. I guess that a 4GB GTX 960 is just a question of time for those SLIing people

The GTX 960 is a mid range card and as I said before having 2gb with the new compression system makes up for it any how and should be enough for anyone playing 1080p games. Not sure I would expect it to contain a vast feature set and extreme amount of ram for the price though I guess having at least 1gb more VRAM would be better if your looking to SLI though I doubt it would make much of a difference.

That said I think it will find many homes only because AMD has nothing new on the horizon, and at best lower price of 285/280X won't stir people. AMD seems real late in thwarting the frenzy and that's clearly their problem.

There is also this.. PCWorld - New Intel graphics driver adds 4K video support, Chrome video acceleration and more

The only one not doing 10bit out is Nvidia GeForce.

EDIT:

*added GeForce before someone decides to try and troll like usual. :p

This card is going to be killer. If the price/perfrmance is better than the 970 it will be insane.

As for bus width drops, that isn't necessarily the case. Third/fourth tier GPUs have historically been 128-bit for some time ( AMD's Juniper HD 57x0/67x0, Bonaire and Cape Verde HD 77xx/R7 260) while Nvidia often compromised with 192-bit to offset slower GDDR3/GDDR5 frequencies before they got their memory controller act together.

As the low end discrete graphics market basically evaporates, it also puts more pressure on the next tier up the product stack to remain cost effective, so die size becomes a significant factor as does getting a good return on investment - which is why both AMD and Nvidia's product stacks look less than easy to categorize. Nvidia's present range includes architectures from three architectures (Fermi, Kepler, Maxwell), and AMD five.Yes. The SLI finger can be clearly seen in this MSI GTX 960

I feel bad for those who can't afford 970.

If they're on the shelves, how about some quick phone pictures for us?