Thursday, January 15th 2015

NVIDIA GeForce GTX 960 Specs Confirmed

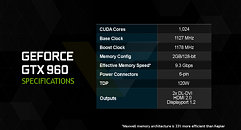

Here's what NVIDIA's upcoming performance-segment GPU, the GeForce GTX 960, could look like under the hood. Key slides from its press-deck were leaked to the web, revealing its specs. To begin with, the card is based on NVIDIA's 28 nm GM206 silicon. It packs 1,024 CUDA cores based on the "Maxwell" architecture, 64 TMUs, and possibly 32 ROPs, despite its 128-bit wide GDDR5 memory interface, which holds on to 2 GB of memory. The bus may seem narrow, but NVIDIA is using a lossless texture compression tech, that will effectively improve bandwidth utilization.

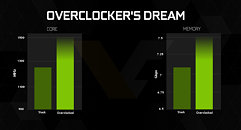

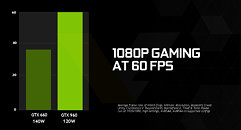

The core is clocked at 1127 MHz, with 1178 MHz GPU Boost, and the memory at 7.00 GHz (112 GB/s real bandwidth). Counting its texture compression mojo, NVIDIA is beginning to mention an "effective bandwidth" figure of 9.3 GHz. The card draws power from a single 6-pin PCIe power connector, the chip's TDP is rated at just 120W. Display outputs will include two dual-link DVI, and one each of HDMI 2.0 and DisplayPort 1.2. In its slides, NVIDIA claims that the card will be an "overclocker's dream" in its segment, and will offer close to double the performance over the GTX 660. NVIDIA will launch the GTX 960 on the 22nd of January, 2015.

Source:

VideoCardz

The core is clocked at 1127 MHz, with 1178 MHz GPU Boost, and the memory at 7.00 GHz (112 GB/s real bandwidth). Counting its texture compression mojo, NVIDIA is beginning to mention an "effective bandwidth" figure of 9.3 GHz. The card draws power from a single 6-pin PCIe power connector, the chip's TDP is rated at just 120W. Display outputs will include two dual-link DVI, and one each of HDMI 2.0 and DisplayPort 1.2. In its slides, NVIDIA claims that the card will be an "overclocker's dream" in its segment, and will offer close to double the performance over the GTX 660. NVIDIA will launch the GTX 960 on the 22nd of January, 2015.

119 Comments on NVIDIA GeForce GTX 960 Specs Confirmed

I didn't take it myself. Grabbed it from the store's FB page. No unboxed pictures though.

PS. The same store sells Gigabyte GTX970 G1 at around 410$.

Having said that, a 2GB card should still suffice at 19x10 resolution for the image quality setting likely being used. Unless you're a masochist, I doubt many people would deliberately amp up the game I.Q. and play at sub-optimal framerates just to make a point.

* The larger framebuffers only create separation when the smaller vRAM capacity cards are deliberately overwhelmed (e.g. use of high res texture packs) - so less a gain by the large framebuffer card than a deliberate hobbling of the standard offering.

I have a GTX 750 1GB. So many were saying the low vram would hobble it, but I researched it before buying and decided this wouldn't be the case... and I've not experienced a problem yet. Possibly I will. As you said, if I turned up the settings, it might be an issue, but no one would want to play at 10-15 fps anyway.

The GTX 960 looks to be coming in ~2x the speed of a GTX 750, so I don't expect the 2GB to necessarily be a problem, but the bandwidth is only 40% greater. And overclocking my vram helped quite a lot, so I think that will be a restriction on the 960... ie it could run considerably faster if it had more bandwidth. Well... on the other hand maybe not, since it is already twice as fast using 2x the shaders, TMUs, and ROPs.

The 960 is in different league altogether. I'm sure that a GTX 960 looks pathetic to someone with SLI 980s, but it is reported to be literally 2x the speed of a 750, and ~1.8x the speed of a 750 Ti. At a retail price of $199, the 960 will sell like crazy.

* Using a higher quality (denser) paint would allow for more coverage (delta compression).Yup. Just a simple case of adjusting the workload ( screen resolution, gaming image quality settings) to fit the available hardware.

The GTX 960 has 112GB/s, so it's not quite enough to run it at 1440p during peak gameplay. Once I've done my 1080p benchmarks, we'll see what the figures are.

These are rough and approximate figures based on some educated extrapolation with a test version of GPU-Z W1zzard sorted for me. The values could be entirely wrong. I'll explain in detail once the full article is up.

Running benchmark X Game X Scene X as appose to running Bx,Cx,Sy. Game A-Z will never share similar while Game X Scene A-Z will always vary outcome.

I don't know how your running it but wouldn't it be

Kepler & Maxwell similar performance with same frame buffer comparison.

Am I totally miss interpreting it?

I've got a separate benchmark for non-compressed memory bandwidth usage, as well as some graphs to show how bandwidth usage correlates (or doesn't) with other usage on GPU hardware (VRAM, PCIe Bus, GPU Load). The best possible thing to do is to take the highest bandwidth usage figure and go by that figure to be utterly and completely sure it won't be a bottleneck. I'm also attempting to cover 4 games that represent a couple of different types, including VRAM hogs, CPU limited, GPU limited, and general well rounded title. Once I've finished the full write up, people are welcome to request benchmarks on games.

I'd ideally like a 770 as it shares identical bandwidth to the 970, the difference being the Maxwell compression technique. As it stands I'm having to assume it's 30%. In reality it varies a lot.

I must stress at this point though, even NVidia has mentioned that the available tools for measuring such a thing are not particularly accurate. (They actually said the software application available is not 100% representative, just that the values are similar by proxy)

And the main thing I've been wondering about is all the buzz about how new games need 4GB+ vram. If not this year then the next. I can fully believe that a top card needs that kind of vram because it is capable of processing information fast enough to be limited with less. I've not seen anything yet that convinces me that things have changed. Vram requirements seem to scale with processing speed about the same as several years ago. Are newer higher res textures something that will impact vram quantity more than processing speed? Even if so, would turning down the texture detail enough to solve the problem, make the game look ugly?

Path tracing

Larger texture packs as screen resolution increases

Improved physics particle models (fog, water, smoke, interactive/destructible environments) and a host of other graphical refinements. For further reading I'd suggest Googling upcoming/future rendering techniques. The yearly SIGGRAPH is a good place to start being as it is independent.

You'll always have the opportunity to lower game image quality. How good/bad it looks will depend on the game engine, and how far the options are dialled down (few PC gamers would willingly choose static lighting for instance).

At this point we're straying pretty far from the actual topic at hand, the GTX 960.

As it stands now, both AMD and Nvidia's gaming programs push the software to a point where the game at its maximum quality/resolution levels is around two generations of GPUs removed from the ability to play them with a single GPU. This is not by accident. Having the games outstrip the cards ability ensures a market for SLI/Crossfire.Nothing extreme. Larger framebuffers are as much a marketing tool as a requirement. As I said before, there is always the option of dialling down image quality and/or playing at a lower resolution...and as should be apparent, not every game is a GPU-killing resource hog and the console market dictates to a large degree how much graphics horsepower is required.

As a trend will memory capacity get larger? Of course, unless you expect the quality of gaming images and the game environment to remain unchanged. If you'd followed up any of the links I pointed you towards, the message is pretty clear - the resources to make gaming more realistic are available, but one of the biggest stumbling blocks to implementation (aside from consolitis) is memory capacity and bandwidth. Read though any next-gen 3D articleor paper and count how often the words memory/bandwidth limitation (or similar) pop up.This is the internet. Your choice as to what to take on board and what to leave aside. People who aim broadsides at a vendor usually have some weird attachment to another brand. The view from the other side of the fence isn't much dissimilar - "Don't buy Radeon their drivers suck, their support sucks" etc. Filter the opinion and question and evaluate the fact. The fun part is separating out the comments that are opinion (or trolling/shilling) that masquerade as fact....but on one ever said the quest for knowledge was easy.