Thursday, January 15th 2015

NVIDIA GeForce GTX 960 Specs Confirmed

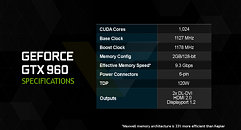

Here's what NVIDIA's upcoming performance-segment GPU, the GeForce GTX 960, could look like under the hood. Key slides from its press-deck were leaked to the web, revealing its specs. To begin with, the card is based on NVIDIA's 28 nm GM206 silicon. It packs 1,024 CUDA cores based on the "Maxwell" architecture, 64 TMUs, and possibly 32 ROPs, despite its 128-bit wide GDDR5 memory interface, which holds on to 2 GB of memory. The bus may seem narrow, but NVIDIA is using a lossless texture compression tech, that will effectively improve bandwidth utilization.

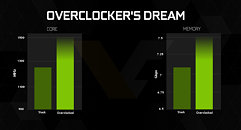

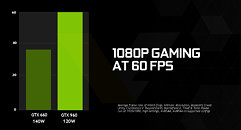

The core is clocked at 1127 MHz, with 1178 MHz GPU Boost, and the memory at 7.00 GHz (112 GB/s real bandwidth). Counting its texture compression mojo, NVIDIA is beginning to mention an "effective bandwidth" figure of 9.3 GHz. The card draws power from a single 6-pin PCIe power connector, the chip's TDP is rated at just 120W. Display outputs will include two dual-link DVI, and one each of HDMI 2.0 and DisplayPort 1.2. In its slides, NVIDIA claims that the card will be an "overclocker's dream" in its segment, and will offer close to double the performance over the GTX 660. NVIDIA will launch the GTX 960 on the 22nd of January, 2015.

Source:

VideoCardz

The core is clocked at 1127 MHz, with 1178 MHz GPU Boost, and the memory at 7.00 GHz (112 GB/s real bandwidth). Counting its texture compression mojo, NVIDIA is beginning to mention an "effective bandwidth" figure of 9.3 GHz. The card draws power from a single 6-pin PCIe power connector, the chip's TDP is rated at just 120W. Display outputs will include two dual-link DVI, and one each of HDMI 2.0 and DisplayPort 1.2. In its slides, NVIDIA claims that the card will be an "overclocker's dream" in its segment, and will offer close to double the performance over the GTX 660. NVIDIA will launch the GTX 960 on the 22nd of January, 2015.

119 Comments on NVIDIA GeForce GTX 960 Specs Confirmed

This would be a good card to swap the 660 ti out with in this machine. I could then replace the 5870 in another machine with my 660 ti.

Nope it will not. It will be better in some areas (lower power consumption and noise) but for $200 in will not be an upgrade that will really make a difference. Except if your favorite games use PhysX effects. In that case it could look as a serious upgrade to you.

A bunch of indies and some 360-built ports--might as well include the Saints Row Gat out of Hell benchmarks too right?--don't really represent why having less than 2GB is unwise going forward.

They are stockpiling chips better than 1024/128 for their GTX 960 Ti

if its stock that would mean I could reach gtx 970 levels of performance :0

For full H265/VP9 you have to get Radeon HD 6xx0 or newer, FirePro or Quadro card

through DP 1.2+ or HDMI 1.4+.

Content->Processing->Panel

Would be great to have a testbed set up with two identical fast cards except for double the vram on one. The 770s are pretty ideal, or maybe the 960s once the 4GB version comes out.

You could let the CPU do the work load if its fast enough and still be crippled by your 8bit GPU.

Content True H265/VP9 4k 10bit 4:2:0+ -> Processing CPU/GPU if your GPU is processing it at 8bit out your already downgrading the quality before it gets to your panel.

An interesting snippet in the source article - One million GTX 970/980's sold so far. An impressive number given their pricing and sales over barely three months.