Thursday, March 19th 2015

AMD Announces FreeSync, Promises Fluid Displays More Affordable than G-SYNC

AMD today officially announced FreeSync, an open-standard technology that makes video and games look more fluid on PC monitors, with fluctuating frame-rates. A logical next-step to V-Sync, and analogous in function to NVIDIA's proprietary G-SYNC technology, FreeSync is a dynamic display refresh-rate technology that lets monitors sync their refresh-rate to the frame-rate the GPU is able to put out, resulting in a fluid display output.

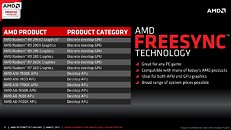

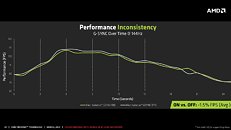

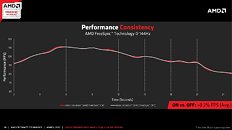

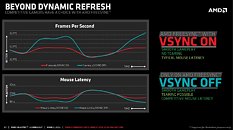

FreeSync is an evolution of V-Sync, a feature that syncs the frame-rate of the GPU to the display's refresh-rate, to prevent "frame tearing," when the frame-rate is higher than refresh-rate; but it is known to cause input-lag and stutter when the GPU is not able to keep up with refresh-rate. FreeSync works on both ends of the cable, keeping refresh-rate and frame-rates in sync, to fight both page-tearing and input-lag.What makes FreeSync different from NVIDIA G-SYNC is that it's a specialization of a VESA-standard feature by AMD, which is slated to be a part of the DisplayPort feature-set, and advanced by DisplayPort 1.2a standard, featured currently only on AMD Radeon GPUs, and Intel's upcoming "Broadwell" integrated graphics. Unlike G-SYNC, FreeSync does not require any proprietary hardware, and comes with no licensing fees. When monitor manufacturers support DP 1.2a, they don't get to pay a dime to AMD. There's no special hardware involved in supporting FreeSync, either, just support for the open-standard and royalty-free DP 1.2a.AMD announced that no less than 12 monitors from major display manufacturers are already announced or being announced shortly, with support for FreeSync. A typical 27-inch display with TN-film panel, 40-144 Hz refresh-rate range, and WQHD (2560 x 1440 pixels) resolution, such as the Acer XG270HU, should cost US $499. You also have Ultra-Wide 2K (2560 x 1080 pixels) 34-inch and 29-inch monitors, such as the LG xUM67 series, start at $599. These displays offer refresh-rates of up to 75 Hz. Samsung is leading the 4K Ultra HD pack for FreeSync, with the UE590 series 24-inch and 28-inch, and UE850 series 24-inch, 28-inch, and 32-inch Ultra HD (3840 x 2160 pixels) monitors, offering refresh-rates of up to 60 Hz. ViewSonic is offering a full-HD (1920 x 1080 pixels) 27-incher, the VX2701mh, with refresh-rates of up to 144 Hz. On the GPU-end, FreeSync is currently supported on Radeon R9 290 series (R9 290, R9 290X, R9 295X2), R9 285, R7 260X, R7 260, and AMD "Kaveri" APUs. Intel's Core M processors should, in theory, support FreeSync, as its integrated graphics supports DisplayPort 1.2a.On the performance side of things, AMD claims that FreeSync has lesser performance penalty compared to NVIDIA G-SYNC, and has more performance consistency. The company put out a few of its own benchmarks to make that claim.For AMD GPUs, the company will add support for FreeSync with the upcoming Catalyst 15.3 drivers.

FreeSync is an evolution of V-Sync, a feature that syncs the frame-rate of the GPU to the display's refresh-rate, to prevent "frame tearing," when the frame-rate is higher than refresh-rate; but it is known to cause input-lag and stutter when the GPU is not able to keep up with refresh-rate. FreeSync works on both ends of the cable, keeping refresh-rate and frame-rates in sync, to fight both page-tearing and input-lag.What makes FreeSync different from NVIDIA G-SYNC is that it's a specialization of a VESA-standard feature by AMD, which is slated to be a part of the DisplayPort feature-set, and advanced by DisplayPort 1.2a standard, featured currently only on AMD Radeon GPUs, and Intel's upcoming "Broadwell" integrated graphics. Unlike G-SYNC, FreeSync does not require any proprietary hardware, and comes with no licensing fees. When monitor manufacturers support DP 1.2a, they don't get to pay a dime to AMD. There's no special hardware involved in supporting FreeSync, either, just support for the open-standard and royalty-free DP 1.2a.AMD announced that no less than 12 monitors from major display manufacturers are already announced or being announced shortly, with support for FreeSync. A typical 27-inch display with TN-film panel, 40-144 Hz refresh-rate range, and WQHD (2560 x 1440 pixels) resolution, such as the Acer XG270HU, should cost US $499. You also have Ultra-Wide 2K (2560 x 1080 pixels) 34-inch and 29-inch monitors, such as the LG xUM67 series, start at $599. These displays offer refresh-rates of up to 75 Hz. Samsung is leading the 4K Ultra HD pack for FreeSync, with the UE590 series 24-inch and 28-inch, and UE850 series 24-inch, 28-inch, and 32-inch Ultra HD (3840 x 2160 pixels) monitors, offering refresh-rates of up to 60 Hz. ViewSonic is offering a full-HD (1920 x 1080 pixels) 27-incher, the VX2701mh, with refresh-rates of up to 144 Hz. On the GPU-end, FreeSync is currently supported on Radeon R9 290 series (R9 290, R9 290X, R9 295X2), R9 285, R7 260X, R7 260, and AMD "Kaveri" APUs. Intel's Core M processors should, in theory, support FreeSync, as its integrated graphics supports DisplayPort 1.2a.On the performance side of things, AMD claims that FreeSync has lesser performance penalty compared to NVIDIA G-SYNC, and has more performance consistency. The company put out a few of its own benchmarks to make that claim.For AMD GPUs, the company will add support for FreeSync with the upcoming Catalyst 15.3 drivers.

96 Comments on AMD Announces FreeSync, Promises Fluid Displays More Affordable than G-SYNC

Either you get tearing or you get input lag, im sure tis all mitigated a little but damn it, why cant we just have neither already?

But if you want to get technical and call freesync the driver section...and call it proprietary then you are probably correct in that AMD will not do your work for you and make you a driver... but if you are in the green team you probably think that is a good thing... so you can stop your bitching. :P

The troll unsubbed ... Darn. :)

As it is an open standard.... it is up to the individual manufacturer as to how them implement it in the panel.

www.guru3d.com/articles-pages/amd-freesync-review-with-the-acer-xb270hu-monitor,3.htmlSeems like i'll be waiting for 21-144hz.

While the spec may allow for as low as 9.... going to take some magic on the panel side to make it work.

Good, AMD, just expand the support, please, to more than this.

a leaked driver for aus, iirc, made an nVidia GPU connected w/ eDP (laptop) to enable G-sync.

What nvidia doesn't tell you is that their G-sync is DOA and already supported in old h/w e.g. all laptops w/ eDP.

Also, proof of it happening plus actual critical dissection by neutral source required.

If the above requirements can't be fulfilled, then its little more than Trolling.

What we can say is the adaptive v-sync pathway looks to be better for all involved (except perhaps Nvidia).

Just say that it is a lie, in the same way like someone else said earlier today that nvidia's price of 999$ is a lie. We could have accused them of big trolling....

Either way, this tech sounds cool and seems to work so I am interested.

Maybe the 980 has the hardware built in and doesn't need the external solution.