Thursday, March 19th 2015

AMD Announces FreeSync, Promises Fluid Displays More Affordable than G-SYNC

AMD today officially announced FreeSync, an open-standard technology that makes video and games look more fluid on PC monitors, with fluctuating frame-rates. A logical next-step to V-Sync, and analogous in function to NVIDIA's proprietary G-SYNC technology, FreeSync is a dynamic display refresh-rate technology that lets monitors sync their refresh-rate to the frame-rate the GPU is able to put out, resulting in a fluid display output.

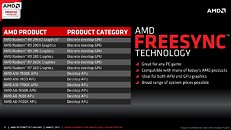

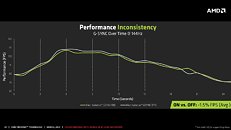

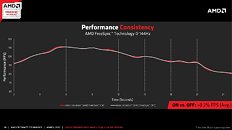

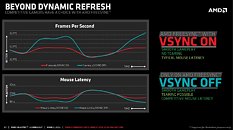

FreeSync is an evolution of V-Sync, a feature that syncs the frame-rate of the GPU to the display's refresh-rate, to prevent "frame tearing," when the frame-rate is higher than refresh-rate; but it is known to cause input-lag and stutter when the GPU is not able to keep up with refresh-rate. FreeSync works on both ends of the cable, keeping refresh-rate and frame-rates in sync, to fight both page-tearing and input-lag.What makes FreeSync different from NVIDIA G-SYNC is that it's a specialization of a VESA-standard feature by AMD, which is slated to be a part of the DisplayPort feature-set, and advanced by DisplayPort 1.2a standard, featured currently only on AMD Radeon GPUs, and Intel's upcoming "Broadwell" integrated graphics. Unlike G-SYNC, FreeSync does not require any proprietary hardware, and comes with no licensing fees. When monitor manufacturers support DP 1.2a, they don't get to pay a dime to AMD. There's no special hardware involved in supporting FreeSync, either, just support for the open-standard and royalty-free DP 1.2a.AMD announced that no less than 12 monitors from major display manufacturers are already announced or being announced shortly, with support for FreeSync. A typical 27-inch display with TN-film panel, 40-144 Hz refresh-rate range, and WQHD (2560 x 1440 pixels) resolution, such as the Acer XG270HU, should cost US $499. You also have Ultra-Wide 2K (2560 x 1080 pixels) 34-inch and 29-inch monitors, such as the LG xUM67 series, start at $599. These displays offer refresh-rates of up to 75 Hz. Samsung is leading the 4K Ultra HD pack for FreeSync, with the UE590 series 24-inch and 28-inch, and UE850 series 24-inch, 28-inch, and 32-inch Ultra HD (3840 x 2160 pixels) monitors, offering refresh-rates of up to 60 Hz. ViewSonic is offering a full-HD (1920 x 1080 pixels) 27-incher, the VX2701mh, with refresh-rates of up to 144 Hz. On the GPU-end, FreeSync is currently supported on Radeon R9 290 series (R9 290, R9 290X, R9 295X2), R9 285, R7 260X, R7 260, and AMD "Kaveri" APUs. Intel's Core M processors should, in theory, support FreeSync, as its integrated graphics supports DisplayPort 1.2a.On the performance side of things, AMD claims that FreeSync has lesser performance penalty compared to NVIDIA G-SYNC, and has more performance consistency. The company put out a few of its own benchmarks to make that claim.For AMD GPUs, the company will add support for FreeSync with the upcoming Catalyst 15.3 drivers.

FreeSync is an evolution of V-Sync, a feature that syncs the frame-rate of the GPU to the display's refresh-rate, to prevent "frame tearing," when the frame-rate is higher than refresh-rate; but it is known to cause input-lag and stutter when the GPU is not able to keep up with refresh-rate. FreeSync works on both ends of the cable, keeping refresh-rate and frame-rates in sync, to fight both page-tearing and input-lag.What makes FreeSync different from NVIDIA G-SYNC is that it's a specialization of a VESA-standard feature by AMD, which is slated to be a part of the DisplayPort feature-set, and advanced by DisplayPort 1.2a standard, featured currently only on AMD Radeon GPUs, and Intel's upcoming "Broadwell" integrated graphics. Unlike G-SYNC, FreeSync does not require any proprietary hardware, and comes with no licensing fees. When monitor manufacturers support DP 1.2a, they don't get to pay a dime to AMD. There's no special hardware involved in supporting FreeSync, either, just support for the open-standard and royalty-free DP 1.2a.AMD announced that no less than 12 monitors from major display manufacturers are already announced or being announced shortly, with support for FreeSync. A typical 27-inch display with TN-film panel, 40-144 Hz refresh-rate range, and WQHD (2560 x 1440 pixels) resolution, such as the Acer XG270HU, should cost US $499. You also have Ultra-Wide 2K (2560 x 1080 pixels) 34-inch and 29-inch monitors, such as the LG xUM67 series, start at $599. These displays offer refresh-rates of up to 75 Hz. Samsung is leading the 4K Ultra HD pack for FreeSync, with the UE590 series 24-inch and 28-inch, and UE850 series 24-inch, 28-inch, and 32-inch Ultra HD (3840 x 2160 pixels) monitors, offering refresh-rates of up to 60 Hz. ViewSonic is offering a full-HD (1920 x 1080 pixels) 27-incher, the VX2701mh, with refresh-rates of up to 144 Hz. On the GPU-end, FreeSync is currently supported on Radeon R9 290 series (R9 290, R9 290X, R9 295X2), R9 285, R7 260X, R7 260, and AMD "Kaveri" APUs. Intel's Core M processors should, in theory, support FreeSync, as its integrated graphics supports DisplayPort 1.2a.On the performance side of things, AMD claims that FreeSync has lesser performance penalty compared to NVIDIA G-SYNC, and has more performance consistency. The company put out a few of its own benchmarks to make that claim.For AMD GPUs, the company will add support for FreeSync with the upcoming Catalyst 15.3 drivers.

96 Comments on AMD Announces FreeSync, Promises Fluid Displays More Affordable than G-SYNC

Why does this have to be labeled as "More affordable than G-Sync" , even if it's true all it is going to do is be click bait for fan boy arguments on why AMD blows and Freesync should come in the form of a firmware update..... We know Freesync is coming out (BenQ monitor is already out), why poke that horse again? I can already here it "Freesync is not free, long live green"

Anyways, super excited to try it out. Once some of the Asus monitors drop, I am going after IPS + 120hz + adaptive sync.

Gosh I so wish that means it will work on nVidia (and Intel) GPUs but I know I'll be wrong.

Also, dang those LG ultrawides look enticing

Currently, to have always above 40FPS on 1440P you got to have enough GPU horsepower to use decent settings on many new games. Otherwise it won't be worth it much.

Hoped to see is in the low 30s. Anyway, with a new top end gen of AMD cards this shouldn't (hopefully) be the case if performance are as leaked.

You have crossfired 295X2 and a (if your specs are up to date) 1080p(144hz) capable display? Are you insane? Free-sync is for when fps drop below your refresh rate. What at 1080p with the power of 4 290X's drops below 144fps? I cap BF4 at 90fps on sli 780ti at 1440p, you should be storming past 144fps on 1080p?

If I was you, I'd wait for a good 4K Free-sync monitor at 60Hz. You'd get ultra smooth motion with your graphics cards and freesync then.

But yes, it's free from the DP 1.2a spec, as long as the hardware displaying to it is supported.

Intel Broadwell IGP supports DP 1.2a.

And yes, the cards are on cruise control with 1080p, doesn't stop me anyways :)

Am I reading that right, AMD is supporting 9 - 240 hz with freesync? Not the speculated bottom limit of 30/40?

Most seem to bottom out at 40 though. Getting the backlight to not flicker at 9 might be tricky.I actually wouldn't be surprised to see Intel adopting this with a driver update.

It is an open standard they can use it if they want to.

Nvidia won't because G-sync would die.

G-Sync could potentially live as a premium option if it has a compelling performance improvement over FreeSync, but does it?

G-Sync = flickers

Freesync = tears/lags (V-sync off/V-Sync On) depends on which option you choose

Above monitor refresh it looks like Freesync option to have V-Sync on/off is a better trade off then Gsync always on which adds lag.

All those % are what you could call a margin of error as nor all playthroughs are the same and small things could effect performance numbers. AMD could nit picked ones that looked best for them.

Flickering been in G-sync since its inception.

PC Perspective - A Look into Reported G-Sync Display Flickering

You can find a lot more by googling or looking through forums.

Without either camp budging, this may end up turning into a monitor battle as well. With monitors coming with either freesync, _or_ g-sync only (thus locking in the consumers choice of graphics manufacturer).

The only real hope for consumers, is if monitor makers start shipping monitors with both freesync and g-sync enabled in the same monitor.

Now, since this is a killer feature and cheaper to get than G-Sync, then it's likely to take sales away from NVIDIA. Of course, NVIDIA will counter in some way and it will be interesting to see how they do this.

Oh and btw that shiny, new and seriously overpriced Titan X is looking even more lacklustre now for not supporting it. :laugh:Unfortunately, NVIDIA is sure to have a clause in their contract which prevents both standards being implemented. Hopefully it will be seen as anti-competitive by the competition commision or whatever they're called today and be unenforceable by NVIDIA.

Had a bit of a thought, Since AMD has to certify all monitors to be freesync. They have pretty much locked up freesync to AMD only gpu's. Since g-sync module could possible be updated firmware to support it, but since freesync software is as it stands amd proprietary software. AMD in sense has done same thing everyone rips on nvidia for, they just did it under everyones nose's.