Monday, June 22nd 2015

AMD "Fiji" Block Diagram Revealed, Runs Cool and Quiet

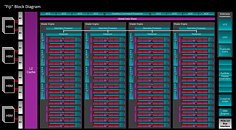

AMD's upcoming flagship GPU silicon, codenamed "Fiji," which is breaking ground on new technologies, such as HBM, memory-on-package, a specialized substrate layer that connects the GPU with it, called Interposer; features a hefty feature-set. More on the "Fiji" package and its memory implementation, in our older article. Its block diagram (manufacturer-drawn graphic showing the GPU's component hierarchy), reveals a scaling up, of the company's high-end GPU launches over the past few years.

"Fiji" retains the quad Shader Engine layout of "Hawaii," but packs 16 GCN Compute Units (CUs), per Shader Engine (compared to 11 CUs per engine on Hawaii). This works out to a stream processor count of 4,096. Fiji is expected to feature a newer version of the Graphics CoreNext architecture than "Hawaii." The TMU count is proportionately increased, to 256 (compared to 176 on "Hawaii"). AMD doesn't appear to have increased the ROP count, which is still at 64. The most significant change, however, is its 4096-bit HBM memory interface, compared to 512-bit GDDR5 on "Hawaii."At its given clock speeds, of up to 1050 MHz core, with 500 MHz memory (512 GB/s bandwidth), on the upcoming Radeon R9 Fury X graphics card, "Fiji" offers a GPU compute throughput of 8.6 TFLOP/s, which is greater than the 7 TFLOP/s rated for NVIDIA's GeForce GTX Titan X. The reference board may draw power from a pair of 8-pin PCIe power connectors, but let that not scare you. Its typical board power is rated at 275W, just 25W more than the GTX Titan X, for 22% higher SPFP throughput (the two companies may use different methods to arrive at those numbers).

AMD claims that the reference cooling solution will pay heavy dividends in terms of temperatures and noise. In a typical gaming scenario, the temperatures will be around 50°C, and noise output under 32 dB. To put these into perspective, the reference NVIDIA GeForce GTX Titan X, sees its load temperatures reach 84°C, and its fan puts out 45 dB, in our testing. The cooling solution is confirmed to feature a Nidec-Servo made 120 mm fan. As with all flagship graphics cards over the past few generations, Radeon R9 Fury X will feature dual-BIOS, and ZeroCore (which powers down the GPU when the display-head is idling, and completely powers down non-primary GPUs in CrossFire setups, unless 3D loads warrant the driver to power them back up).

The Radeon R9 Fury X will be priced at US $649.99, and will be generally available in the next 3 or so weeks.

Source:

Hispazone

"Fiji" retains the quad Shader Engine layout of "Hawaii," but packs 16 GCN Compute Units (CUs), per Shader Engine (compared to 11 CUs per engine on Hawaii). This works out to a stream processor count of 4,096. Fiji is expected to feature a newer version of the Graphics CoreNext architecture than "Hawaii." The TMU count is proportionately increased, to 256 (compared to 176 on "Hawaii"). AMD doesn't appear to have increased the ROP count, which is still at 64. The most significant change, however, is its 4096-bit HBM memory interface, compared to 512-bit GDDR5 on "Hawaii."At its given clock speeds, of up to 1050 MHz core, with 500 MHz memory (512 GB/s bandwidth), on the upcoming Radeon R9 Fury X graphics card, "Fiji" offers a GPU compute throughput of 8.6 TFLOP/s, which is greater than the 7 TFLOP/s rated for NVIDIA's GeForce GTX Titan X. The reference board may draw power from a pair of 8-pin PCIe power connectors, but let that not scare you. Its typical board power is rated at 275W, just 25W more than the GTX Titan X, for 22% higher SPFP throughput (the two companies may use different methods to arrive at those numbers).

AMD claims that the reference cooling solution will pay heavy dividends in terms of temperatures and noise. In a typical gaming scenario, the temperatures will be around 50°C, and noise output under 32 dB. To put these into perspective, the reference NVIDIA GeForce GTX Titan X, sees its load temperatures reach 84°C, and its fan puts out 45 dB, in our testing. The cooling solution is confirmed to feature a Nidec-Servo made 120 mm fan. As with all flagship graphics cards over the past few generations, Radeon R9 Fury X will feature dual-BIOS, and ZeroCore (which powers down the GPU when the display-head is idling, and completely powers down non-primary GPUs in CrossFire setups, unless 3D loads warrant the driver to power them back up).

The Radeon R9 Fury X will be priced at US $649.99, and will be generally available in the next 3 or so weeks.

73 Comments on AMD "Fiji" Block Diagram Revealed, Runs Cool and Quiet

"Cool and quiet"

...when there's a CLC waterblock attached to it.

I'm sure we'd all like to know.

~36Hrs left and the reviews will tell all....

*grabs popcorn*

i wonder if i can fit 2 AIO rad in my Air540 ... in the front in place of the Phobya G-Changer 240V2 (60mm)

pfah! i prefer 2 fury X or nano with a custom block and a single slot shield so i can reuse my l oop and put my 290 and the Kryographics block+ backplate to rest on my shelf as "the best card ever since 2yrs and still going strong" (or just "the best bang for bucks card" )

As for AMD not trusting their own CPUs claim, which sounds true, I don't think you're getting the whole point. Under a good multi-core aware graphics API, their 8 core FX CPUs do better than Intel's 4 core CPUs. DX11 is not that... and all we have now are DX11 games, so they had to use their competitors CPUs to drive two FuryX graphics cards in their custom Fury system.

Whether you're a shill, a troll or just a misinformed person, I hope I have taught you something, son )

Side note: Cool 'n Quiet? What the hell is this, a CPU? I think the Athlon 64 in my attic wants its technology back. :)

AMD were more efficient in their 3000, 4000, 5000 and 6000 series. They were slightly less efficient with their 7000 series, but now they caught up and seem to have done quite a good job with Fiji.

I just took the 390X review because it was right up front, but it's another great example of how AMD would rather suck down the amps which is why I'm not overly optimistic that there will be anything ground breaking. Also consider the AIO water cooler. That's a sign that the GPU's TDP might be a bit on the high side which would be consistent with what AMD has been doing.

Don't give these performance/watt charts when a couple of Gameworks/Nvidia-poisoned games can swing things to their side by a great deal. Here's a chart of load power consumption. Keep in mind that on average your graphics card is under full load less than 20% of the day.

If you look at the chart, you'll see that TitanX consumes about the same as the 290X while being ~40ish% faster.

I personally wouldn't care about 40% more power under load for the same performance if the product is considerably cheaper. Again, an extra $10 - $15 for power bills in a one year period isn't much to talk about.

How much of that do you think is my 6870s? :p Actually #2 is in ULV, so I suspect any of it is mostly the primary which is the non-reference one.You see, the problem with that is the way that Hilbert at Guru3d calculates power consumption and how W1zz actually gets it. Hilbert does some math to approximate the GPU's power usage. If you actually read his reviews, he explains it:Then you have @W1zzard who has gone through painsteaking work to figure out that actual power draw of the GPU from the PCI-E slot and PCI-E power connectors as described in his reviews:In all candor, who do you think has gone through more work to answer this question? Hilbert used a Kill-a-watt and did some math. W1zz used meters on the GPU itself. I think some credit goes to W1zz for going through such lengths to give us such detailed information for those of us (sp. most of us,) who don't have the hardware to do get a real number and not simply doing some math with a kill-a-watt and I think that amount of data W1zz provides describes that in detail.

Lastly, Hilbert doesn't even describe what kind of test he uses to figure out power consumption. All he says is that "he stresses the GPU to 100%." All things considered, that's a bit more shady than W1zz giving us the full lowdown.

Now without you going ape shit on me let me ask you why would you think it needs a full coverage block on it? The memory is right beside the gpu so why a full coverage,? Ahh but it is full coverage.. Just not the coverage thats ment to be, see what i just did there :rockout:Does the water cooler just cover the gpu and not the 4 memory modules :wtf: ... I'm about 99.99999% sure the block covers the entire gpu/ memory..

Tbh I can't wait 3 more weeks to get my new card... I want it now, gaming evolved, see what I did there :laugh:

Ok fun times over :peace:

Also I am more worried about the GPU + VRM temps, than the memory(maybe HBM is different, but I know the VRMs on my 780 run hotter then the memory), which is where full cover comes into play.

Also temps will be even better in a real water cooling loop. My 360 and 240 rad set up laughs at that dinky 120mm.