Tuesday, March 22nd 2016

Microsoft Details Shader Model 6.0

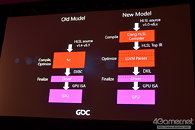

Microsoft is giving final touches to Shader Model 6.0, an update to a key component of its Direct3D API. This succeeds Shader Model 5.0, which remained largely unchanged since the introduction of DirectX 11.0 in 2009. Shader Model 6.0 provides a more optimized pathway for shader code to make its way to the metal (GPU, hardware). The outgoing Shader Model 5.0, which is featured on DirectX 11 and DirectX 12, relies on FXC, an offline shader compiler, to both compile and optimize HLSL shader code, supporting HLSL v1.4 to v5.1 code.

Shader Model 6.0, on the other hand, dedicates compiling to Clang HLSL compiler, and optimization to multiple LLVM passes. Since Shader Model 6.0 supports HLSL code from v5.0 upwards, it should also benefit existing DirectX 11 and DirectX 12 apps, while relegating older apps to the then legacy Shader Model 5.0 pathway. In addition, Shader Model 6.0 claims to provide the right performance to cope with API level features such as tiled resources (mega-textures). It remains to be seen how Microsoft deploys Shader Model 6.0.

Source:

PCGH

Shader Model 6.0, on the other hand, dedicates compiling to Clang HLSL compiler, and optimization to multiple LLVM passes. Since Shader Model 6.0 supports HLSL code from v5.0 upwards, it should also benefit existing DirectX 11 and DirectX 12 apps, while relegating older apps to the then legacy Shader Model 5.0 pathway. In addition, Shader Model 6.0 claims to provide the right performance to cope with API level features such as tiled resources (mega-textures). It remains to be seen how Microsoft deploys Shader Model 6.0.

45 Comments on Microsoft Details Shader Model 6.0

We'll either be in for a generation of really efficient engines, or a generation of really complicated games (physics, AI, etc)

On the topic at hand, additional and improved features are always welcome, good on Microsoft. :)

I want the push towards new features because I want to utilize them, not because I think existing hardware will support it.

www.dualshockers.com/2016/03/17/directx-12-adoption-huge-among-developers-hdr-support-coming-in-2017-as-microsoft-shares-new-info/ (first slide on third row)

Thanks,

John

Its not a HW but dx api feature. They changed 5.0 access to a more optimized 6.0 way now done on the fly instead "offline". That doesnt automatically mean it needs 6.0 pixel shader. Its a improved 5.0 code path using 5.x gpu HW.

Btw tiled resources are present in Kepler too so that kinda says it all how this SM 6.0 will work.

Read the following

devtalk.nvidia.com/default/topic/732728/how-much-speed-of-64bit-integer-algebra-in-the-latest-gpus-/

It seems Shader Model 6 is being modeled from AMD GCN and Microsoft Xbox One has GCN.

It wasn't until the GeForce 8000 and HD 2000 series that D3D 10 and SM4 was implemented (due mostly fundamental changes in how D3D worked in D3D 10), and D3D11 and SM5 didn't show up until the GeForce GTX 400 and Radeon HD 5000 series of cards showed up to market.

New tech happened to you. Deal with it and upgrade.

Granted, some of 12_1 is emulated at the moment but what you are spouting is complete BS.

"I could go on for pages listing the types of things the spu's are used for to make up for the machines aging gpu, which may be 7 series NVidia but that's basically a tweaked 6 series NVidia for the most part. But I'll just type a few off the top of my head:"

1) Two ppu/vmx units

There are three ppu/vmx units on the 360, and just one on the PS3. So any load on the 360's remaining two ppu/vmx units must be moved to spu.

2) Vertex culling

You can look back a few years at my first post talking about this, but it's common knowledge now that you need to move as much vertex load as possible to spu otherwise it won't keep pace with the 360.

3) Vertex texture sampling

You can texture sample in vertex shaders on 360 just fine, but it's unusably slow on PS3. Most multi platform games simply won't use this feature on 360 to make keeping parity easier, but if a dev does make use of it then you will have no choice but to move all such functionality to spu.

4) Shader patching

Changing variables in shader programs is cake on the 360. Not so on the PS3 because they are embedded into the shader programs. So you have to use spu's to patch your shader programs.

5) Branching

You never want a lot of branching in general, but when you do really need it the 360 handles it fine, PS3 does not. If you are stuck needing branching in shaders then you will want to move all such functionality to spu.

6) Shader inputs

You can pass plenty of inputs to shaders on 360, but do it on PS3 and your game will grind to a halt. You will want to move all such functionality to spu to minimize the amount of inputs needed on the shader programs.

7) MSAA alternatives

Msaa runs full speed on 360 gpu needing just cpu tiling calculations. Msaa on PS3 gpu is very slow. You will want to move msaa to spu as soon as you can.

8) Post processing

360 is unified architecture meaning post process steps can often be slotted into gpu idle time. This is not as easily doable on PS3, so you will want to move as much post process to spu as possible.

9) Load balancing

360 gpu load balances itself just fine since it's unified. If the load on a given frame shifts to heavy vertex or heavy pixel load then you don't care. Not so on PS3 where such load shifts will cause frame drops. You will want to shift as much load as possible to spu to minimize your peak load on the gpu.

10) Half floats

You can use full floats just fine on the 360 gpu. On the PS3 gpu they cause performance slowdowns. If you really need/have to use shaders with many full floats then you will want to move such functionality over to the spu's.

11) Shader array indexing

You can index into arrays in shaders on the 360 gpu no problem. You can't do that on PS3. If you absolutely need this functionality then you will have to either rework your shaders or move it all to spu.

Etc, etc, etc...

On ATI X1000 series

Microsoft's Warp12 software CPU render has DirectX12 compatibility but it has performance issues.

From msdn.microsoft.com/en-us/library/windows/desktop/bb944006(v=vs.85).aspx#Pixel_Shader_Inputs

Pixel Shader Inputs

For pixel shader versions ps_1_1 - ps_2_0, diffuse and specular colors are saturated (clamped) in the range 0 to 1 before use by the shader.

Color values input to the pixel shader are assumed to be perspective correct, but this is not guaranteed (for all hardware). Colors sampled from texture coordinates are iterated in a perspective correct manner, and are clamped to the 0 to 1 range during iteration.

There's a reason why NVIDIA has "The Way It's Meant to be Played" exist and it's not a paper weight.

I mean, sure, Xenos most certainly looks like it's a better, more flexible piece of kit than the RSX, but again, what does it have to do with SM6?

For example

From https://msdn.microsoft.com/en-us/library/windows/desktop/bb944006(v=vs.85).aspx#Pixel_Shader_Inputs

Pixel Shader Inputs

For pixel shader versions ps_1_1 - ps_2_0, diffuse and specular colors are saturated (clamped) in the range 0 to 1 before use by the shader.

Color values input to the pixel shader are assumed to be perspective correct, but this is not guaranteed (for all hardware). Colors sampled from texture coordinates are iterated in a perspective correct manner, and are clamped to the 0 to 1 range during iteration.

There's a reason why NVIDIA has "The Way It's Meant to be Played" exist and it's not a paper weight. If Geforce 7 and competing ATi X1000 cards are the same then "The Way It's Meant to be Played" shouldn't exist.

PS3's RSX exposes the hardware issues on GeForce 7. NVIDIA "The Way It's Meant to be Played" for Geforce 7 will be avoiding or minimise some of the points shown with RSX's hardware issues.

I might point out AMD GCN 1.0 is aging better than NVIDIA Kepler.

AMD GCN 1.0 just needs Tiled Resource Tier 2 for DirectX12 FL12_0

There are other D3D12 capabilities exposed as cap-bits not listed (I.e. standard swizzle, raw major texture access on multi-adapter, cross-node sharing tier, process and resource address space in bits).