Friday, July 8th 2016

AMD Releases PCI-Express Power Draw Fix, We Tested, Confirmed, Works

Earlier today, AMD has posted a new Radeon Crimson Edition Beta, 16.7.1, which actually includes two fixes for the reported PCI-Express overcurrent issue that kept Internet people busy the last days.

The driver changelog mentions the following: "Radeon RX 480's power distribution has been improved for AMD reference boards, lowering the current drawn from the PCIe bus", and there's also a second item "A new "compatibility mode" UI toggle has been made available in the Global Settings menu of Radeon Settings. This option is designed to reduce total power with minimal performance impact if end users experience any further issues."In order to adjust the power distribution between PCI-Express slot power and power drawn from the PCI-Express 6-pin power connector, AMD uses a feature of the IR3567 voltage controller that's used on all reference design cards.This feature lets you adjust the power phase balance by changing the controller's configuration via I2C (a method to talk to the voltage controller directly, something that GPU-Z uses too, to monitor VRM temperature, for example). By default, power draw is split 50/50 between slot and 6-pin, this can be adjusted per-phase, by a value between 0 to 15. AMD has chosen a setting of 13 for phases 1, 2 and 3, which effectively shifts some power draw from the slot away onto the 6-pin connector, I'm unsure why they did not pick a setting of 15 (which I've tested to shift even more power).

The second adjustment is an option inside Radeon Settings, called "Compatibility Mode", kinda vague, and the tooltip doesn't reveal anything else either. Out of the box, the setting defaults to off and should only be employed by people who still run into trouble, even with the adjusted power distribution from the first change, which is always on and has no performance impact. When Compatibility Mode is enabled, it will slightly limit the performance of the GPU, which results in reduced overall power draw.

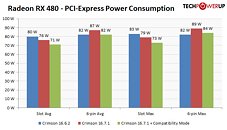

We tested these options, below you will find our results using Metro Last Light (with the card being warmed up before the test run). First we measured power consumption using the previous 16.6.2 driver, then we installed 16.7.1 (while keeping Compatibility Mode off), then we turned Compatibility Mode on.As you can see, just the power-shift alone, while working, is not completely sufficient to reduce slot power below 75 W, we measured 76 W. As the name suggests, the changed power distribution results in increased power draw from 6-pin, which can easily handle slightly higher power draw though.

With the Compatibility Mode option enabled, power from the slot goes down to 71 W only, which is perfectly safe, but will cost performance.

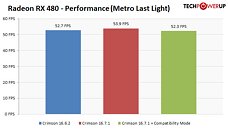

AMD has also promised improved overall performance with 16.7.1, so we took a look at performance, using Metro again.Here you can see that the new driver adds about 2.3% performance, which is a pretty decent improvement. Once you enable Compatibility Mode though, performance goes down slightly below the original result (0.8% lower), which means Compatibility Mode costs you around 3%, in case you really want to use it. I do not recommend using Compatibility Mode, personally I don't think anyone with a somewhat modern computer would have run into any issues due to the increased power draw in the first place, neither did AMD. It is good to see that AMD still chose to address the problem, and solved it fully, in a good way, and quick.

The driver changelog mentions the following: "Radeon RX 480's power distribution has been improved for AMD reference boards, lowering the current drawn from the PCIe bus", and there's also a second item "A new "compatibility mode" UI toggle has been made available in the Global Settings menu of Radeon Settings. This option is designed to reduce total power with minimal performance impact if end users experience any further issues."In order to adjust the power distribution between PCI-Express slot power and power drawn from the PCI-Express 6-pin power connector, AMD uses a feature of the IR3567 voltage controller that's used on all reference design cards.This feature lets you adjust the power phase balance by changing the controller's configuration via I2C (a method to talk to the voltage controller directly, something that GPU-Z uses too, to monitor VRM temperature, for example). By default, power draw is split 50/50 between slot and 6-pin, this can be adjusted per-phase, by a value between 0 to 15. AMD has chosen a setting of 13 for phases 1, 2 and 3, which effectively shifts some power draw from the slot away onto the 6-pin connector, I'm unsure why they did not pick a setting of 15 (which I've tested to shift even more power).

The second adjustment is an option inside Radeon Settings, called "Compatibility Mode", kinda vague, and the tooltip doesn't reveal anything else either. Out of the box, the setting defaults to off and should only be employed by people who still run into trouble, even with the adjusted power distribution from the first change, which is always on and has no performance impact. When Compatibility Mode is enabled, it will slightly limit the performance of the GPU, which results in reduced overall power draw.

We tested these options, below you will find our results using Metro Last Light (with the card being warmed up before the test run). First we measured power consumption using the previous 16.6.2 driver, then we installed 16.7.1 (while keeping Compatibility Mode off), then we turned Compatibility Mode on.As you can see, just the power-shift alone, while working, is not completely sufficient to reduce slot power below 75 W, we measured 76 W. As the name suggests, the changed power distribution results in increased power draw from 6-pin, which can easily handle slightly higher power draw though.

With the Compatibility Mode option enabled, power from the slot goes down to 71 W only, which is perfectly safe, but will cost performance.

AMD has also promised improved overall performance with 16.7.1, so we took a look at performance, using Metro again.Here you can see that the new driver adds about 2.3% performance, which is a pretty decent improvement. Once you enable Compatibility Mode though, performance goes down slightly below the original result (0.8% lower), which means Compatibility Mode costs you around 3%, in case you really want to use it. I do not recommend using Compatibility Mode, personally I don't think anyone with a somewhat modern computer would have run into any issues due to the increased power draw in the first place, neither did AMD. It is good to see that AMD still chose to address the problem, and solved it fully, in a good way, and quick.

147 Comments on AMD Releases PCI-Express Power Draw Fix, We Tested, Confirmed, Works

I don't think it actually had anything to do with that. I originally thought it made their binning process easier to do it the way they did.

wccftech.com/amd-rx-490-dual-gpu/

Good old WCCFTech.....

Perhaps Vega will be called "Faster and Fury-ous"?

as for the 490's "duality" :D i dont think they have any other choice until vega is ready. which is sad because it means that the prices will remain high in the nvidia camp due to lack of competition. neither sli nor cf have been better till now than a single card solution.

Geezus. You know that some motherboards offer Crossfire or SLI up to 4 slots? And those slots DONT even have an extra Molex-connector? How do you think these boards will hold up the moment you put 4 cards in without any PCI-express booster? That's 4x 75W's pulling from PCI-E bus alone, over 2 wires on that poor 24 ATX connector.

Geezus people even many ancient motherboards offer onboard Molex-connector, it proberly sits near the PCI-express slot which feeds an additional current.

And if it's not there, you'd buy a pci-express booster:

This way you are able to pull more current then the standard 75W's.

The 12V power comes straight from the 24 pins ATX plug. Some motherboards even share the 8 / 4 PINS from the CPU towards the PCI-express.

As for the dual card, it would be very foolish if they do that. Unless its priced super aggressively (like 1.5-1.75x the price of the RX 480) its not going to be worth it. Plus its still a dual card which carries lots of problems relating to scaling in proper games. I don't think they would do that personally because dual GPU's have been on the down slide alot lately in sales.

www.techpowerup.com/reviews/ASUS/GTX_950/21.HTML

Btw, tdp means thermal design power, it's designed to still be able to cool up until 75W.

tdp thermal dissipation power its mean average power disipated as i do undestand , but in this case than we are talking about there are no doubts the real tdp be 75w tdp o even more as in the OC case where consumption rises without touch voltage.

Glad AMD addressed the juicy-ness of the 480.

It's almost always a mistake subbing to any GPU-related thread. :shadedshu: