Friday, February 24th 2017

AMD's X370 Only Chipset to Support NVIDIA's SLI

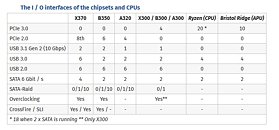

Only AMD's top-of-the-line X370 chipset will support competing NVIDIA's SLI technology. AMD's next-in-line B350 eschews SLI support but retains CrossFire compatibility, while the low-end A320 chipset will offer no support for any such multi-GPU technologies. While this may seem a move by AMD to purposely gimp NVIDIA products on its platforms, it stands to reason that even enthusiasts tend to stay away from multi-GPU solutions and their associated problems. Besides, AMD will surely avoid any way of giving NVIDIA more funds than the company already has, by way of paying the "SLI Tax" on every chipset it ships. By limiting SLI support to its highest-end chipsets, AMD shaves some expenses from licensing efforts, whilst keeping SLI support to those that are, in truth, more likely to use them: power users, who will certainly spare no expense in springing to a X370-based platform.

As of now, some details remain unclear in the overall feature-set and compatibility differences between AMD's upcoming AM4 chipsets, but it would seem that only AMD's X370 chipset manages to leverage the full 20 PCIe lanes (18x if you run 2x SATA connections) delivered by AMD's Ryzen CPUs. This would look like a way for AMD to impose a "motherboard tax" on users, by limiting the number of PCIe lanes available on lower-end motherboards, and thus urging them to take the next step to their own X370. Apparently, PCIe lanes are not a differentiating factor between AMD chipsets (with X370, B350 and A320 all offering 4 native lanes), only their ability to access (or not) Ryzen's own 20.

Not much time until all of this is adequately cleared up, though.

Source:

Computerbase.de

As of now, some details remain unclear in the overall feature-set and compatibility differences between AMD's upcoming AM4 chipsets, but it would seem that only AMD's X370 chipset manages to leverage the full 20 PCIe lanes (18x if you run 2x SATA connections) delivered by AMD's Ryzen CPUs. This would look like a way for AMD to impose a "motherboard tax" on users, by limiting the number of PCIe lanes available on lower-end motherboards, and thus urging them to take the next step to their own X370. Apparently, PCIe lanes are not a differentiating factor between AMD chipsets (with X370, B350 and A320 all offering 4 native lanes), only their ability to access (or not) Ryzen's own 20.

Not much time until all of this is adequately cleared up, though.

69 Comments on AMD's X370 Only Chipset to Support NVIDIA's SLI

BUT that double asterisk, over clocking on X300, B300 and A300 THAT is serious news for the budget minded out there, the R5 1300 is a 4 core 8 thread Ryzen cpu at 175 USD, all Ryzen are unlocked, that could be a budget users dream, if the OC on ryzen is good this could herald a 175 USD AMD part going toe to toe with the i7 7700k.

This specific review

same numbers are shown (mostly positive occasional game drop, but game engines update so the same truth fro GTA5 exists in both directions depending on update Fallout 4 with the texture pack for example) in this review (same driver)

375.70 appears to be mostly positive as well? link

Here this is as far back as I can find matching games, 365, 375, 376; 375 to 376 is no change 365 to the later driver is positive. Yet again what is the FUD that drivers hurt nvidia performance in earlier cards?

On another note, what happened to "DX12 and Vulkan mGPU rendering will make SLI/Crossfire obsolete"?

Just saw xorbe above. ;)

Not sure how SLi will fair, but the intrinsic problem is the frame pacing, and that will ALWAYS introduce stuttering no matter how it is optimized, or if you have free sync or gsync.

It will never be as smooth as using a single card. My opinion is that, if your game is now playing at 30 fps, getting SLi or CF to have 50-60fps will surely help.

If you have now 45-50 fps, going with dual cards and get 80-90 fps, you might end up having less flurent gameplay.

It also appears most people in this thread have a terrible understanding of the position SLI is currently in. With the increase in frame latency by traditional means (AFR/AFR2), SLI's primary reason for use right now is to achieve playable framerates at 4k+. At 1440 and 1080, sli is entirely meaningless at the top end because performance is so ludicrus currently. Triple SLI is no longer viable in any current games. Once again, low res options are far too high of a framerate to benefit from the latency increase, the SLI bridge is not capable of the bandwidth at 4k+, and the third card is just a dead weight beyond 3 monitor surround.

Two way SLI with the 9xx series will actually provide substantially better performance at 4k using the HB/PCB bridge Nvidia makes than 3 way SLI in every single game I tried/play. The best scaling I found was perhaps FFXIV, but two card was still a preferred option and I have since sold my third card.

I will say two way SLI is still supported extremely well currently though. Most games either already have sufficient profiles or can be fixed at launch to operate, including RE7. DX12 support and functionality is still effectively nonexistent for users via SFR unless you're using SLI-VR.

Very very weak those specs.

butter?

I have seen plenty (And owned 1) motherboards that support Crossfire but not SLI. It's just how Nvidia looks at things vs how AMD does. One wants uniformity, and one wants total freedom.