Monday, November 6th 2017

Intel Announces "Coffee Lake" + AMD "Vega" Multi-chip Modules

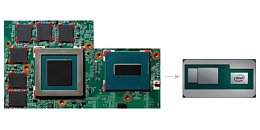

Rumors of the unthinkable silicon collaboration between Intel and AMD are true, as Intel announced its first multi-chip module (MCM), which combines a 14 nm Core "Coffee Lake-H" CPU die, with a specialized 14 nm GPU die by AMD, based on the "Vega" architecture. This GPU die has its own HBM2 memory stack over a 1024-bit wide memory bus. Unlike on the AMD "Vega 10" and "Fiji" MCMs, in which a silicon interposer is used to connect the GPU die to the memory stacks, Intel deployed the Embedded Multi-Die Interconnect Bridge (EMIB), a high-density substrate-level wiring. The CPU and GPU dies talk to each other over PCI-Express gen 3.0, wired through the package substrate.

This multi-chip module, with a tiny Z-height, significantly reduces the board footprint of the CPU + discrete graphics implementation, when compared to having separate CPU and GPU packages with the GPU having discrete GDDR memory chips, and enables a new breed of ultra portable notebooks that pack a solid graphics muscle. The MCM should enable devices as thin as 11 mm. The specifications of the CPU and dGPU dies remain under the wraps. The first devices with these MCMs will launch by Q1 2018.A video presentation follows.

This multi-chip module, with a tiny Z-height, significantly reduces the board footprint of the CPU + discrete graphics implementation, when compared to having separate CPU and GPU packages with the GPU having discrete GDDR memory chips, and enables a new breed of ultra portable notebooks that pack a solid graphics muscle. The MCM should enable devices as thin as 11 mm. The specifications of the CPU and dGPU dies remain under the wraps. The first devices with these MCMs will launch by Q1 2018.A video presentation follows.

51 Comments on Intel Announces "Coffee Lake" + AMD "Vega" Multi-chip Modules

If both gpu and cpu runs same VRM it's a big effeciency winConspiracy incoming!:

AMD needs money and sales, Intel wants to weaken Nvidia to have better chances at IP and AMD didn't want to give IP either so here they are?

Though with Vega already in place and IF added to the mix, I'd be a bit worried about power draw. But hey, let's see it before criticising it, right?

Me I'm more interested in Raven Ridge and the MX150 from Nvidia.

mightprobably won't matter a lot, mind you)Which On tsmc 7 nm would be pretty small around 100mm2 the 1070Ti and also 40%faster reaching 1080ti performance. Be nice to have a separate GFX on socket, no need to be integrated and soldered with CPU at all. Since GPU part gets irrelevant much faster.

* Intel gets graphics chips that can actually compete and finally kills their ailing "GT" graphics line. Dear god, it's about damn time.

* AMD gets something that (presumably) works better/more reliably than a silicon interposer, doesn't necessarily destroy good chips if it's faulty, and is probably cheaper too. Only question is around bandwidth, although I'm pretty sure if EMIB wasn't performant enough, they wouldn't be pairing it with HBM2.

This is an interesting move with interesting timing from AMD (although I imagine it was in the works long before Zen was a success) - it could mean that Raven Ridge isn't quite as good as we're all hoping. Or, it could simply mean that Intel is paying AMD a shitload of money.

I honestly believe that the end result will be Intel making a bid to buy RTG at some point in the future. Now that truly would make for interesting times...

AMD could sell Ryzen CPU's together with VEGA GPU's onto it's own packaging with HBM2. It would mean a bigger margin on the long run.

But, having Intel covering 80% of CPU market in both desktop, laptop and enterprise this is a great chance that dev's actually start working on AMD GCN technology and get the best out of AMD's GPU's.

The HBM2 is a very good technique. It kills the expensive cache Intel used to drop onto their CPU's for the IGP's. Or the motherboards with a soldered GDDR5 chip, or the systems with shared memory. Both sollutions are very limited and takes bandwidth, power and resources compared to HBM2.

What the hell is happening? Is this what travelling to an alternate reality feels? :)))))))))))))))))))))

Apple may be interested in Macbook pro and other oem's in that class.

Who'd want intel+amd gpu in an console, that's like throwing money out the window.... much better with ARM-Nvidia or X86-AMD which is the only choices for a high performance console.

tl;dr: Welcome to the future.

It's a good solution for what we need right now, but it won't scale allowing us to glue 100 chips as easily ;)