Thursday, September 27th 2018

NVIDIA's Weakness is AMD's Strength: It's Time to Regain Mid-Range Users

It won't be easy to see an AMD counterpart to NVIDIA GeForce RTX 2080 Ti in the short time. Heck, it will be difficult to see any real competitors to the RTX 2000 Series anytime soon. But maybe AMD doesn't need to compete on that front. Not yet. The reason is sound and clear: RTX prices are NVIDIA's weakness, and that weakness, my fellow readers, could become AMD's biggest strength.

The prices NVIDIA and its partners are asking for RTX 2080 and RTX 2080 Ti have been a clear discussion topic since we learnt about them. Most users have criticized those price points, even after NVIDIA explained the reasoning behind them. Those chips are bigger, more complex and more powerful than ever, so yes, costs have increased and that has to be taken into account.None of that matters. It even doesn't matter what ray-tracing can bring to the game, and it doesn't matter if those Tensor Cores will provide benefits beyond DLSS. I'm not even counting on the fact that DLSS is a proprietary technology that will lock us (and developers, for that matter) a little bit more in another walled garden. Even if you realize that the market perception is clear: who has the fastest graphics card is perceived as the tech/market leader.

There's certainly a chance that RTX takes off and NVIDIA sets again the bar in this segment: the reception of the new cards hasn't been overwhelming, but developers could begin to take advantage of all the benefits Turing brings. If they do, we will have a different discussion, one in which future cards such as RTX 2070/2060 and its derivatives could bring a bright future for NVIDIA... and a dimmer one for AMD.

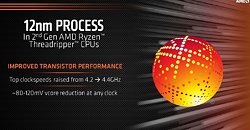

But the thing that matters now for a lot of users is pricing, and AMD could leverage that crucial element of the equation. In fact, the company could do that very soon. Some indications reveal that AMD could launch a new Polaris revision in the near future. This new silicon is allegedly being built on TSMC's 12 nm process, something AMD did successfully with its Ryzen 2000 Series of CPUs.AMD must have learnt a good lesson there: its CPU portfolio is easily the best in its history, and the latest woes at Intel are helping and causing forecast revisions that estimate a 30% market share globally for AMD in Q4 2018. See? Intel's weakness is AMD's strength on this front.

2018 started with the worst news for Intel -Spectre and Meltdown- and it hasn't gone much better later on: the jump to the 10 nm process never seems to come, and Intel's messages about those delays have not helped to reinforce confidence in this manufacturer. The company would be three generations further than they are now without those big problems, and the situation for AMD would be quite different too.

Everything seems to make sense here: customers are upset with that RTX 2000 Series for the elite, and that Polaris revision could arrive at the right time and the right place. With a smaller node AMD could gain higher yields, decrease cost, increase clock frequencies and provide that 15% performance increase some publications are pointing to. Those are a lot of "coulds", and in fact there's no reason to believe that Polaris 30 is more than just a die shrink, so we would have the same unit counts and higher clocks.That won't probably be enough to make the hypothetical RX 680 catch up with a GTX 1070: performance of the latter is +34% the one we found in the RX 580 on average according to our tests, so even with that refresh we will have a more competitive Radeon RX family that could win the price/performance battle, and that is no small feat.

The new cards would also not target just existing GTX 7/9 Series users, but also those older AMD Radeon users that were expecting a nice upgrade on performance without having to sell their souls. And for the undecided users, the ones that are thinking about getting a GTX 1050/Ti or a GTX 1060, AMD's offer could be quite attractive if price/performance ratio hits NVIDIA where it hurts more.

That would put that new family of graphic cards (Radeon RX 600?) on a pretty good position to compete with GeForce GTX 1000. NVIDIA presumably could still be king in the power consumption area, but besides that, AMD could position itself on that $300-$500 range (and even below that) with a really compelling suite of products.

So yes, AMD could have a winning hand here. Your move, AMD.

The prices NVIDIA and its partners are asking for RTX 2080 and RTX 2080 Ti have been a clear discussion topic since we learnt about them. Most users have criticized those price points, even after NVIDIA explained the reasoning behind them. Those chips are bigger, more complex and more powerful than ever, so yes, costs have increased and that has to be taken into account.None of that matters. It even doesn't matter what ray-tracing can bring to the game, and it doesn't matter if those Tensor Cores will provide benefits beyond DLSS. I'm not even counting on the fact that DLSS is a proprietary technology that will lock us (and developers, for that matter) a little bit more in another walled garden. Even if you realize that the market perception is clear: who has the fastest graphics card is perceived as the tech/market leader.

There's certainly a chance that RTX takes off and NVIDIA sets again the bar in this segment: the reception of the new cards hasn't been overwhelming, but developers could begin to take advantage of all the benefits Turing brings. If they do, we will have a different discussion, one in which future cards such as RTX 2070/2060 and its derivatives could bring a bright future for NVIDIA... and a dimmer one for AMD.

But the thing that matters now for a lot of users is pricing, and AMD could leverage that crucial element of the equation. In fact, the company could do that very soon. Some indications reveal that AMD could launch a new Polaris revision in the near future. This new silicon is allegedly being built on TSMC's 12 nm process, something AMD did successfully with its Ryzen 2000 Series of CPUs.AMD must have learnt a good lesson there: its CPU portfolio is easily the best in its history, and the latest woes at Intel are helping and causing forecast revisions that estimate a 30% market share globally for AMD in Q4 2018. See? Intel's weakness is AMD's strength on this front.

2018 started with the worst news for Intel -Spectre and Meltdown- and it hasn't gone much better later on: the jump to the 10 nm process never seems to come, and Intel's messages about those delays have not helped to reinforce confidence in this manufacturer. The company would be three generations further than they are now without those big problems, and the situation for AMD would be quite different too.

Everything seems to make sense here: customers are upset with that RTX 2000 Series for the elite, and that Polaris revision could arrive at the right time and the right place. With a smaller node AMD could gain higher yields, decrease cost, increase clock frequencies and provide that 15% performance increase some publications are pointing to. Those are a lot of "coulds", and in fact there's no reason to believe that Polaris 30 is more than just a die shrink, so we would have the same unit counts and higher clocks.That won't probably be enough to make the hypothetical RX 680 catch up with a GTX 1070: performance of the latter is +34% the one we found in the RX 580 on average according to our tests, so even with that refresh we will have a more competitive Radeon RX family that could win the price/performance battle, and that is no small feat.

The new cards would also not target just existing GTX 7/9 Series users, but also those older AMD Radeon users that were expecting a nice upgrade on performance without having to sell their souls. And for the undecided users, the ones that are thinking about getting a GTX 1050/Ti or a GTX 1060, AMD's offer could be quite attractive if price/performance ratio hits NVIDIA where it hurts more.

That would put that new family of graphic cards (Radeon RX 600?) on a pretty good position to compete with GeForce GTX 1000. NVIDIA presumably could still be king in the power consumption area, but besides that, AMD could position itself on that $300-$500 range (and even below that) with a really compelling suite of products.

So yes, AMD could have a winning hand here. Your move, AMD.

110 Comments on NVIDIA's Weakness is AMD's Strength: It's Time to Regain Mid-Range Users

But Navi is not going to be a redesign of the fundamental architecture of GCN.Wait a minute, you're arguing Vega is a good buy since you can flash the BIOS and overclock it?

You are talking about something which is very risky, and even when successful, the Pascal counterparts are still better. So what's the point? You are encouraging something which should be restricted to enthusiasts who do that as a hobby. This should never be a buying recommendation.

No mather who you flip it, Vega is an inferior choice vs. Pascal at last year's prices, and currently with Pascal on sale and Turing hitting the shelves, there is no reason to by Vega for gaming.Turing is currently about twice as efficient per watt as Vega, even with a node shrink Vega will not be able to compete there. And don't forget that Nvidia have access to the same node as AMD.

Sill, the first generation of 7 nm node will not produce high volumes. Production with triple/quad patterning on DUV will be very slow and have issues with yields. A few weeks ago GloFo gave up 7 nm, not because it didn't work, but because it wasn't cost effective. It will take a while before we see wide adoption of 7 nm, volumes and costs probably eventually beat the current nodes, but it will take a long time.When the first GCN cards was released, they competed well with Kepler, but Maxwell started to pull ahead the 2nd/3rd gen GCNs of the 300-series. Polaris (4th gen GCN) is barely different from it's predecessors, most of the improvement is a pure node shrink, and you can't call it good when it's on par with the previous Maxwell on an older node. RX 480/580 was never a better choice than GTX 1060, and the lower models are just low-end anyway.We don't know if there will be another refresh of Polaris, but Navi is still many months away.

But I would be worried to buy AMD cards so late in the product cycle, they have been known to drop driver support for cards that are still sold. Until their policy changes, I wouldn't buy anything but their latest generation.

The image quality of AMD cards in general in games is still superior compared to Nvidia. One reason for me to stick with AMD.

Over 10 years ago, there used to be large variations in render quality, both texture filtering and AA, between different generations. But since Fermi and HD 5000 I haven't seen any substantial differences.

There are benchmarks like 3D Mark where both are known to cheat by overriding the shader programs. But as of GCN vs. Pascal, there is no general difference in image quality. You might be able to find an edge case in a special AA mode or a game where the driver "optimizes"(screw up) a shader program. But both Direct3D, OpenGL and Vulkan are designed with requirements such that variations in the rendering output should be minimal.

Turing is better at computing than Pascal but not because of Ray Tracing or Tensor cores but because it has a slightly evolved microarchitecture. Turing is close to Volta in how it works in compute.

There are enough signs that Ray Tracing will take off in some form or another - DXR, Vulkan RT extensions, Optix/ProRender and the technology is likely to bleed over from professional sector where it already has taken off. The question is, when and how and whether Turing's implementation will be relevant.

Ray tracing is just another computing task and it definitely can be put under the "general processing" umbrella.

Hence, adding RT-specific ASIC to the chip will (by definition) improve computing potential in a very small class of problems.

However, as we learn how to use RT cores in other problems, they will become more and more useful (and the GPGPU "gain" will increase).

Ray Tracing is primarily a specific case of collision detection problem, so it's not that difficult to imagine a problem, where this ASIC could be used.Most of the things written above apply to Tensor cores. They're good at matrix operations and they will speed up other tasks. They already do.2080Ti's RT cores speed up Ray Tracing around 6x (alleged) compared to what this chip could do on CUDA alone. That means they're few dozen times more effective than the CUDA cores that they could be replaced with (measured by area). That's fairly significant.

If Ray Tracing catches on as a feature, hardware accelerator's performance will be so far ahead that it will become a standard.

And even if RT remains niche (or only available in high-end GPUs), someone will soon learn how to use this hardware to boost physics or something else. That's why we shouldn't rule out RT cores in cheaper GPUs - even if they would be too slow to cover the "flagship" use case, aka RTRT in gaming.

Also, I believe you're thinking about RTRT when saying RT, right? ;-)

RT is really new so there are no real applications for it in consumer space. Same for Tensor (although this has very clear uses in HPC space). I would not count on these being very useful beyond their intended use case.

You are right, I did mean RTRT. Although strictly speaking RTX does not necessarily have to mean RTRT. A lot of applications for Quadro are not real-time yet still accelerated on the same units.