Thursday, September 27th 2018

NVIDIA's Weakness is AMD's Strength: It's Time to Regain Mid-Range Users

It won't be easy to see an AMD counterpart to NVIDIA GeForce RTX 2080 Ti in the short time. Heck, it will be difficult to see any real competitors to the RTX 2000 Series anytime soon. But maybe AMD doesn't need to compete on that front. Not yet. The reason is sound and clear: RTX prices are NVIDIA's weakness, and that weakness, my fellow readers, could become AMD's biggest strength.

The prices NVIDIA and its partners are asking for RTX 2080 and RTX 2080 Ti have been a clear discussion topic since we learnt about them. Most users have criticized those price points, even after NVIDIA explained the reasoning behind them. Those chips are bigger, more complex and more powerful than ever, so yes, costs have increased and that has to be taken into account.None of that matters. It even doesn't matter what ray-tracing can bring to the game, and it doesn't matter if those Tensor Cores will provide benefits beyond DLSS. I'm not even counting on the fact that DLSS is a proprietary technology that will lock us (and developers, for that matter) a little bit more in another walled garden. Even if you realize that the market perception is clear: who has the fastest graphics card is perceived as the tech/market leader.

There's certainly a chance that RTX takes off and NVIDIA sets again the bar in this segment: the reception of the new cards hasn't been overwhelming, but developers could begin to take advantage of all the benefits Turing brings. If they do, we will have a different discussion, one in which future cards such as RTX 2070/2060 and its derivatives could bring a bright future for NVIDIA... and a dimmer one for AMD.

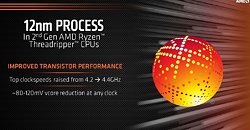

But the thing that matters now for a lot of users is pricing, and AMD could leverage that crucial element of the equation. In fact, the company could do that very soon. Some indications reveal that AMD could launch a new Polaris revision in the near future. This new silicon is allegedly being built on TSMC's 12 nm process, something AMD did successfully with its Ryzen 2000 Series of CPUs.AMD must have learnt a good lesson there: its CPU portfolio is easily the best in its history, and the latest woes at Intel are helping and causing forecast revisions that estimate a 30% market share globally for AMD in Q4 2018. See? Intel's weakness is AMD's strength on this front.

2018 started with the worst news for Intel -Spectre and Meltdown- and it hasn't gone much better later on: the jump to the 10 nm process never seems to come, and Intel's messages about those delays have not helped to reinforce confidence in this manufacturer. The company would be three generations further than they are now without those big problems, and the situation for AMD would be quite different too.

Everything seems to make sense here: customers are upset with that RTX 2000 Series for the elite, and that Polaris revision could arrive at the right time and the right place. With a smaller node AMD could gain higher yields, decrease cost, increase clock frequencies and provide that 15% performance increase some publications are pointing to. Those are a lot of "coulds", and in fact there's no reason to believe that Polaris 30 is more than just a die shrink, so we would have the same unit counts and higher clocks.That won't probably be enough to make the hypothetical RX 680 catch up with a GTX 1070: performance of the latter is +34% the one we found in the RX 580 on average according to our tests, so even with that refresh we will have a more competitive Radeon RX family that could win the price/performance battle, and that is no small feat.

The new cards would also not target just existing GTX 7/9 Series users, but also those older AMD Radeon users that were expecting a nice upgrade on performance without having to sell their souls. And for the undecided users, the ones that are thinking about getting a GTX 1050/Ti or a GTX 1060, AMD's offer could be quite attractive if price/performance ratio hits NVIDIA where it hurts more.

That would put that new family of graphic cards (Radeon RX 600?) on a pretty good position to compete with GeForce GTX 1000. NVIDIA presumably could still be king in the power consumption area, but besides that, AMD could position itself on that $300-$500 range (and even below that) with a really compelling suite of products.

So yes, AMD could have a winning hand here. Your move, AMD.

The prices NVIDIA and its partners are asking for RTX 2080 and RTX 2080 Ti have been a clear discussion topic since we learnt about them. Most users have criticized those price points, even after NVIDIA explained the reasoning behind them. Those chips are bigger, more complex and more powerful than ever, so yes, costs have increased and that has to be taken into account.None of that matters. It even doesn't matter what ray-tracing can bring to the game, and it doesn't matter if those Tensor Cores will provide benefits beyond DLSS. I'm not even counting on the fact that DLSS is a proprietary technology that will lock us (and developers, for that matter) a little bit more in another walled garden. Even if you realize that the market perception is clear: who has the fastest graphics card is perceived as the tech/market leader.

There's certainly a chance that RTX takes off and NVIDIA sets again the bar in this segment: the reception of the new cards hasn't been overwhelming, but developers could begin to take advantage of all the benefits Turing brings. If they do, we will have a different discussion, one in which future cards such as RTX 2070/2060 and its derivatives could bring a bright future for NVIDIA... and a dimmer one for AMD.

But the thing that matters now for a lot of users is pricing, and AMD could leverage that crucial element of the equation. In fact, the company could do that very soon. Some indications reveal that AMD could launch a new Polaris revision in the near future. This new silicon is allegedly being built on TSMC's 12 nm process, something AMD did successfully with its Ryzen 2000 Series of CPUs.AMD must have learnt a good lesson there: its CPU portfolio is easily the best in its history, and the latest woes at Intel are helping and causing forecast revisions that estimate a 30% market share globally for AMD in Q4 2018. See? Intel's weakness is AMD's strength on this front.

2018 started with the worst news for Intel -Spectre and Meltdown- and it hasn't gone much better later on: the jump to the 10 nm process never seems to come, and Intel's messages about those delays have not helped to reinforce confidence in this manufacturer. The company would be three generations further than they are now without those big problems, and the situation for AMD would be quite different too.

Everything seems to make sense here: customers are upset with that RTX 2000 Series for the elite, and that Polaris revision could arrive at the right time and the right place. With a smaller node AMD could gain higher yields, decrease cost, increase clock frequencies and provide that 15% performance increase some publications are pointing to. Those are a lot of "coulds", and in fact there's no reason to believe that Polaris 30 is more than just a die shrink, so we would have the same unit counts and higher clocks.That won't probably be enough to make the hypothetical RX 680 catch up with a GTX 1070: performance of the latter is +34% the one we found in the RX 580 on average according to our tests, so even with that refresh we will have a more competitive Radeon RX family that could win the price/performance battle, and that is no small feat.

The new cards would also not target just existing GTX 7/9 Series users, but also those older AMD Radeon users that were expecting a nice upgrade on performance without having to sell their souls. And for the undecided users, the ones that are thinking about getting a GTX 1050/Ti or a GTX 1060, AMD's offer could be quite attractive if price/performance ratio hits NVIDIA where it hurts more.

That would put that new family of graphic cards (Radeon RX 600?) on a pretty good position to compete with GeForce GTX 1000. NVIDIA presumably could still be king in the power consumption area, but besides that, AMD could position itself on that $300-$500 range (and even below that) with a really compelling suite of products.

So yes, AMD could have a winning hand here. Your move, AMD.

110 Comments on NVIDIA's Weakness is AMD's Strength: It's Time to Regain Mid-Range Users

nVidia will just dump a 12nm shrink of GP104 on Polaris 30, call it GTX 2030 and be done with it.

Lisa Su has no clue how to run Radeon Group, she hires Raja Koduri to head it up and then just runs him over by crippling VEGA development among other things.

I understand why he just gave Su the finger and moved on to Intel, Intel knows Raja's true worth and just gave him a blank check and said do your thing.

You just have to have well optimized games like Forza Horizon 4and you will see that AMD is way better than it shows in (earlier) NV sponsored titles, where sometimes they are on par with a lower tier NV card. Newer titles like AC: Origins or Shadow of the Tomb Raider show that it may not be this way anymore, as a Vega 56 is on par with a 1070 in NV sponsored games.What a comment. :D Saying the 2080 is an excellent product just shows how green you are. People DID NOT expect to get a 70-80% jump again as with Pascal, but if you get a little less performance increase than the 700-900 change, where the 900 cards were 50$ cheaper, and now you get the new cards 100-150, and 500-600$ MORE EXPENSIVE, saying these are excellent products shows how biased you are. You are also forgetting that maybe around 2% of the gamers buy the high end GPU of the current gen from NV, which is marginal. Most of the cards come from the 1050Ti and 1060 performance level. And you even had to mention that Intel is the king in CPU. You just forget (again) that most of the gamers use mid range GPUs, from which you don't get a single fps boost with Intel compared to AMD, even not with mid-high 1070... And I can assure you that most 1080Ti owners do not play CS: GO with 720p minimum settings, but use it on Ultrawide or UHD monitors, where the that maximum 10% CPU difference totally disappears. I just laugh at you so loud.

Techpowerup MSI Gaming X:

GTX 1060 6GB 67 degrees with 28 DBa

RX 480 8GB 73 degrees with 31 DBa

Guru3D MSI Gaming X:

GTX 1060 6GB 65 degrees with 37 DBa

RX 480 8GB 73 degrees with 38 DBa

Given the same cooler, GPU with more power consumed will emit more heat. This will either translate into more heat and/or faster fan speed on the cooler. More emitted heat will also heat up surrounding air.

And while most readers of TPU likely do have proper air movement in the chassis, many people do not. I have seen many many people who have come to complain about performance issues. When looking at the computer, they slapped a GPU into a random machine in mATX tower than has one fan lazily blowing air in. After about 20 minutes of gaming, temperature inside the case is 50+C. And that gives interesting results.

In general, less heat, less problems.

So you say most people buy a 1060-1070 with a 10$ chassis? :D

I do not know what was up in TPU's RX480 review but 196W for RX480 sounds just wrong. Maybe a bad card.

Guru3D's figures:

Power consumption 168W vs 136W (23% more).

Ambient is probably 22C, lets say 20C. Delta temps 53 vs 45 (17% more).

1 DBa probably means a slightly slower fan speed so that is the rest of the difference.

Btw, noise is a logarithmic scale. 3 DBa louder is about 30% more perceived loudness.

www.techpowerup.com/247985/nvidia-fixes-rtx-2080-ti-rtx-2080-power-consumption-tested-better-but-not-good-enoughSo, right your 1440p monitor and games you play... you feel are good holding on... While I can't expect you to ante up perhaps another $500 over what 2-1/2 years. Although, there are gamer who are on 280X that are leaning to 1440p and if a $230 card came up they might see the value to purchase.So you're saying AMD sold 580's in mass, but those who bought them (miners) weren't showing up on Steam playing games... you don't say? And I'm surprised that for every say 100 - 1060's (all versions ) AMD had 20 folks showing up with a 580, even though all 1060's together and had been on the market over almost a year before a 580 showed and still best's it. Or, does that include the 480, and the 470/570's... Cause if it's just a 580's wow how unfair; it still shows AMD was actually kicking-ass, even while the phenomenal pricing got to from mining... AMD still had 1in5 showing up with a 580, while be cognizant that such prices drove folks to other... How are you exactly extrapolating the data?

Sound level measured in dB is logarithmic and relative (dB are used to compare, not to give absolute values).

31 dB vs 28 dB means that RX480 makes 40% more noise (measured in how you feel it) and wastes twice as much power on noise.

Temperature is even worse, because you should be thinking about the distance to ambient level. And when you think about actual impact on your comfort, you should be thinking about heat...

That's why review sites that do this properly report thermal rise, not temperature (and often give very extensive information on how the test setup looks).

The simplest explanation I can give is this: 73*C vs 65*C most likely means something like 50*C vs 42*C of temperature rise. And since the coolers are more or less the same (they move similar quantities of air) it means the RX480 generates A LOT more heat.

There are certainly professional workloads where even more memory bandwidth could be useful, which is what Vega 20 is targeting.I seriously doubt there will be any major changes in Vega 20, it's mostly a node shrink with full fp64 support and potentially some other hardware for professional use. AMD would need a new architecture to fix GCN, Vega 20 wouldn't do that, and probably Navi will just be a number of tweaks.

The point with AMD is, it creates card primarily for Pro market, and downsizes this for the consumer market (gaming). Nvidia does the very same. You cant flash Geforce's these days into Quaddro's anymore without performance being crippled compared to a Pro card. And due to this vega is behind. And it took a few driver revisions to get it on par with the 1080 and not 1080Ti.

Vega 56 would be worth better for your money, esp the flashing to 64 bios, overclocking and undervolting. These seem to have very good results as AMD was pretty much rushing those GPU's out without any proper testing about power consumption. The Vega arch on this procede is already maxed out. Anything above 1650Mhz and a full load applied is running towards 350 to 400W terrority. Almost twice as a 1080 and proberly not even 1/3rd performance more.

The refresh on a smaller node is good > it allows AMD to lower power consumption, push for higher clocks and hopefully produce cheaper chips. The smaller you make them the more fit on a silicon wafer. RTX is so damn expensive because those are frankly big dies and big dies take up alot of space on a wafer.

The Polaris was a good mid-range card, and still is. Pubg does excellent at 75Hz/FPS lock at WQHD. In my opinion people dont need 144fps on a 60hz screen. Cap that and you can half your power bill easily. :) Something you dont hear people saying either.

I've yet to see an actual use of this card. AFAIK it's not even being offered by OEMs (but I'd love to be surprised).

There's also one other argument for it being pointless - Nvidia ignored the idea. It's not that hard to put an SSD into a Tesla or something.Yeah... the Pro market disagrees.

AMD may be thinking they're making GPUs for pros, but actually they're still just making powerful chips. A pro product has to offer way more than just performance.

To be honest, I don't understand why this is happening. AMD is just way too big to make such weird mistakes.

I doubt AMD share in enterprise GPU market is larger than in CPU one...

Radeon does make very good custom chips though. So when someone orders a particular setup, large potential of GCN can finally explored. Consoles are great. The Radeon Pro GPUs inside MacBooks are excellent.

Also, the Radeon Pro made for Apple is beautifully efficient unlikely desktop parts (or even other mobile ones).

It shows that the power consumption / heat issues of GCN are a result of either really bad tuning or the quality is really bad (i.e. Apple gets all the GCN that gets near Nvidia's quality).

There's not a single AMD GPGPU accelerated machine on Top500 as well. There's nothing based on EPYC either.

Is there a new product coming... something, or AMD just goes idle for 12-18mo's maintaining with Polaris products as they are?