Thursday, September 27th 2018

NVIDIA's Weakness is AMD's Strength: It's Time to Regain Mid-Range Users

It won't be easy to see an AMD counterpart to NVIDIA GeForce RTX 2080 Ti in the short time. Heck, it will be difficult to see any real competitors to the RTX 2000 Series anytime soon. But maybe AMD doesn't need to compete on that front. Not yet. The reason is sound and clear: RTX prices are NVIDIA's weakness, and that weakness, my fellow readers, could become AMD's biggest strength.

The prices NVIDIA and its partners are asking for RTX 2080 and RTX 2080 Ti have been a clear discussion topic since we learnt about them. Most users have criticized those price points, even after NVIDIA explained the reasoning behind them. Those chips are bigger, more complex and more powerful than ever, so yes, costs have increased and that has to be taken into account.None of that matters. It even doesn't matter what ray-tracing can bring to the game, and it doesn't matter if those Tensor Cores will provide benefits beyond DLSS. I'm not even counting on the fact that DLSS is a proprietary technology that will lock us (and developers, for that matter) a little bit more in another walled garden. Even if you realize that the market perception is clear: who has the fastest graphics card is perceived as the tech/market leader.

There's certainly a chance that RTX takes off and NVIDIA sets again the bar in this segment: the reception of the new cards hasn't been overwhelming, but developers could begin to take advantage of all the benefits Turing brings. If they do, we will have a different discussion, one in which future cards such as RTX 2070/2060 and its derivatives could bring a bright future for NVIDIA... and a dimmer one for AMD.

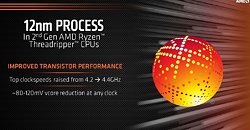

But the thing that matters now for a lot of users is pricing, and AMD could leverage that crucial element of the equation. In fact, the company could do that very soon. Some indications reveal that AMD could launch a new Polaris revision in the near future. This new silicon is allegedly being built on TSMC's 12 nm process, something AMD did successfully with its Ryzen 2000 Series of CPUs.AMD must have learnt a good lesson there: its CPU portfolio is easily the best in its history, and the latest woes at Intel are helping and causing forecast revisions that estimate a 30% market share globally for AMD in Q4 2018. See? Intel's weakness is AMD's strength on this front.

2018 started with the worst news for Intel -Spectre and Meltdown- and it hasn't gone much better later on: the jump to the 10 nm process never seems to come, and Intel's messages about those delays have not helped to reinforce confidence in this manufacturer. The company would be three generations further than they are now without those big problems, and the situation for AMD would be quite different too.

Everything seems to make sense here: customers are upset with that RTX 2000 Series for the elite, and that Polaris revision could arrive at the right time and the right place. With a smaller node AMD could gain higher yields, decrease cost, increase clock frequencies and provide that 15% performance increase some publications are pointing to. Those are a lot of "coulds", and in fact there's no reason to believe that Polaris 30 is more than just a die shrink, so we would have the same unit counts and higher clocks.That won't probably be enough to make the hypothetical RX 680 catch up with a GTX 1070: performance of the latter is +34% the one we found in the RX 580 on average according to our tests, so even with that refresh we will have a more competitive Radeon RX family that could win the price/performance battle, and that is no small feat.

The new cards would also not target just existing GTX 7/9 Series users, but also those older AMD Radeon users that were expecting a nice upgrade on performance without having to sell their souls. And for the undecided users, the ones that are thinking about getting a GTX 1050/Ti or a GTX 1060, AMD's offer could be quite attractive if price/performance ratio hits NVIDIA where it hurts more.

That would put that new family of graphic cards (Radeon RX 600?) on a pretty good position to compete with GeForce GTX 1000. NVIDIA presumably could still be king in the power consumption area, but besides that, AMD could position itself on that $300-$500 range (and even below that) with a really compelling suite of products.

So yes, AMD could have a winning hand here. Your move, AMD.

The prices NVIDIA and its partners are asking for RTX 2080 and RTX 2080 Ti have been a clear discussion topic since we learnt about them. Most users have criticized those price points, even after NVIDIA explained the reasoning behind them. Those chips are bigger, more complex and more powerful than ever, so yes, costs have increased and that has to be taken into account.None of that matters. It even doesn't matter what ray-tracing can bring to the game, and it doesn't matter if those Tensor Cores will provide benefits beyond DLSS. I'm not even counting on the fact that DLSS is a proprietary technology that will lock us (and developers, for that matter) a little bit more in another walled garden. Even if you realize that the market perception is clear: who has the fastest graphics card is perceived as the tech/market leader.

There's certainly a chance that RTX takes off and NVIDIA sets again the bar in this segment: the reception of the new cards hasn't been overwhelming, but developers could begin to take advantage of all the benefits Turing brings. If they do, we will have a different discussion, one in which future cards such as RTX 2070/2060 and its derivatives could bring a bright future for NVIDIA... and a dimmer one for AMD.

But the thing that matters now for a lot of users is pricing, and AMD could leverage that crucial element of the equation. In fact, the company could do that very soon. Some indications reveal that AMD could launch a new Polaris revision in the near future. This new silicon is allegedly being built on TSMC's 12 nm process, something AMD did successfully with its Ryzen 2000 Series of CPUs.AMD must have learnt a good lesson there: its CPU portfolio is easily the best in its history, and the latest woes at Intel are helping and causing forecast revisions that estimate a 30% market share globally for AMD in Q4 2018. See? Intel's weakness is AMD's strength on this front.

2018 started with the worst news for Intel -Spectre and Meltdown- and it hasn't gone much better later on: the jump to the 10 nm process never seems to come, and Intel's messages about those delays have not helped to reinforce confidence in this manufacturer. The company would be three generations further than they are now without those big problems, and the situation for AMD would be quite different too.

Everything seems to make sense here: customers are upset with that RTX 2000 Series for the elite, and that Polaris revision could arrive at the right time and the right place. With a smaller node AMD could gain higher yields, decrease cost, increase clock frequencies and provide that 15% performance increase some publications are pointing to. Those are a lot of "coulds", and in fact there's no reason to believe that Polaris 30 is more than just a die shrink, so we would have the same unit counts and higher clocks.That won't probably be enough to make the hypothetical RX 680 catch up with a GTX 1070: performance of the latter is +34% the one we found in the RX 580 on average according to our tests, so even with that refresh we will have a more competitive Radeon RX family that could win the price/performance battle, and that is no small feat.

The new cards would also not target just existing GTX 7/9 Series users, but also those older AMD Radeon users that were expecting a nice upgrade on performance without having to sell their souls. And for the undecided users, the ones that are thinking about getting a GTX 1050/Ti or a GTX 1060, AMD's offer could be quite attractive if price/performance ratio hits NVIDIA where it hurts more.

That would put that new family of graphic cards (Radeon RX 600?) on a pretty good position to compete with GeForce GTX 1000. NVIDIA presumably could still be king in the power consumption area, but besides that, AMD could position itself on that $300-$500 range (and even below that) with a really compelling suite of products.

So yes, AMD could have a winning hand here. Your move, AMD.

110 Comments on NVIDIA's Weakness is AMD's Strength: It's Time to Regain Mid-Range Users

Context: Nvidia dropped the GP106 Pascal GTX 1060 less than a month after the RX480. Though it wasn't till mid-end of August that AIB reviews, then real stock wasn't till like Sept. AMD was briskly selling 480's and 470's; at and below there MSRP, as folk weren't all seeing that "value" in a card that offered 2Gb (25%) less memory, at 30% more cash while 3-4% performance up-tick.

The worst was even by October mining was effecting the 480/470 prices make them less palatable, and by Christmas they where only getting bought by miners. The GTX 1060 stayed reasonable in price and miners weren't flocking to them. While... why sure as the Steam lumps all GTX1060 (3Gb Geldings and 6Gb) as one, there are high usage. If mining had not been in play, and we looked at all Polaris (480/470/580/570) there's in all probability not near the Steam discrepancy some tout today.

If the mining craze was'nt there, those cards would be available for MSRP. Then you have these a-hole webshops who put their extra margin on top of the MSRP and there you have it.

But buying used is used is used, and should be priced accordingly. You should never pay more than you are willing to risk for a short-lived product. The second-hand market should never be compared to prices of new hardware. E.g. a two year old RX 480 at $120 should be considered as a card with two years less of lifetime vs. a brand new card. And especially in these mining times I would not buy any used graphics card.

of course it was soon fixed w/firmware but unfortunately, the FUD of it causing your house to burn down had already spread like . .wildfire (no pun intended!) then the later and more expensive 1060 seemed like the better choice regardless.

personally, i'm convinced i will be holding on to my 980ti until it blows up. if nothing is around by then, i give up w/gaming AAA titles.

I look at this piece of editorial as well as the RTG crazed fanboys( and fangirls, fancats and etc), all I can think of is this:

IMO, AMD will reenter the gaming GPU market when HBM becomes affordable enough, and 7nm is sampling well enough to launch. Nvidia is sort of locked into this generation for the next year, so I wouldn't be surprised to see a Navi launch targeting the stack (IF Navi performs) around the same time as Zen 2, so Feb\Mar next year.

As for the muppets saying Polaris sold well, it did - to miners. Steam survey shows the 1060 outpacing it around 5-1. Which is a shame, the 580 is a better card than the 1060 most of the time - it should have sold better. But see the original point, gamers don't buy AMD even when they have competitive products.

In the interest of avoiding political discussions, please leave your helicopter implications at home.

My 390 has been solid with the exception of spending a year with a driver bug in Overwatch that took AMD forever to fix. That alone is reason enough for me to not return to AMD again. Their driver development team is so outclassed by nVidia. When I had issues with nVidia, they'd get fixed much faster than that. However, nVidia choosing to dump 106 series chips in the high end. Chips that have been historically relegated to midrange/low mid GPUs, that is something that doesn't make me want to go back. It means nVidia is getting away with near robbery by charging hundreds higher for a low mid-mid part. Granted, part due to AMD's ineptitude in delivering competitive GPUs, nVidia has been able to get away with this.

To get the GPU I'd want to buy, I'd have to spend close to $500 now when the original sweet spot price point was traditionally in the $300 area. Whatever nVidia dumps in the $300 segment likely will be both underperforming and crippled in terms of VRAM. Hence why I jumped ship back to AMD for the 390. I hope AMD really drills nVidia for this behavior with some solid boards. But more Polaris isn't going to cut it. Polaris is a dead end chip that has been underwhelming since launch and revisions do nothing to address the problems.

On the note of Memory Bandwidth: Why would AMD opt for a quad-stack, 4096-bit interface on Vega 20 (This is confirmed as we have seen the chip being displayed), with potentially over 1TB/s of raw memory bandwidth if it wasn't at least somewhat limited by memory bandwidth? Or is that purely due to memory capacity reasons? Honestly almost everyone I talk to about GCN says it is crying out for more bandwidth. It's also worth pointing out that NVIDIA's Delta-Colour Compression is significantly better than AMD's in Vega: 1080 Ti almost certainly has quite a bit more effective bandwidth than Vega when that is factored in.

So resource utilisation is a major issue for Vega, then. Do you think there is any chance they could have 'fixed' any of this for Vega 20? I won't lie, I've been kinda hoping for some Magical Secret Sauce for Vega 10, perhaps NGG Fast Path or the fabled Primitive Shaders. -shrug- even if it doesn't happen, I am satisfied with my Vega 56 as it is, I am only playing at 1080p, 60 Hz so it is plenty fast enough.

But the problem with Steam surveys is that it just shows the number of users who played with that GPU, not the actual number of different machines.

For example the ratio of Intel / nVidia sky-rocketed when they added the Chinese users to the survey.

The reason for that is East Asian users generally does not own their own PC but play on Internet Cafe with pre-built PCs ( which generally are Intel / nVidia), each machine can serve hundreds of different users at different times.

The problem that I see for Nvidia though is that they have priced the 20x0 series using the 10x0 series comparable performance price points. This means that the only real performance per dollar increase is the as-yet unknown performance boost from DLSS and the image quality improvements from RTX. If Nvidia priced the 20x0 series at the same model price points as the previous 10x0 series then they would literally own the market and force AMD's GPU marketshare from around 15% down into the single digits.

(good piece, but anything mentioning red/green in a positive fashion WILL get trolled into oblivion by those who subscribe their version of the truth)

blah GREEN!

It really is a personal choice. I just bought a used 580 (Power Color Red Devil 8GB) from a trusted TPU'er, that mined on it. By under volting it and boosting memory clocks. I went with the 580 because I am going to be using a 1080p monitor and it is right in line with a lot of cards, at 1080p, that are much more expensive to buy. So, once my gaming rig is finished, hopefully Sunday, I will be adding another 580 to the Steam results, LOL.

I am far from a "Gaming Enthusiast" and more of a play-when-I-can gamer. I am sure I will be plenty happy with my 580. My last gaming card, now in one of my T3500's is going to do some extra duty as my gamer, for now, is a MSI 7850.

In a broader sense though yes, Steam Survey can really paint a wrong picture if you consider it 'the entire market'.Yes and at that price AMD will be doing lots of work for nothing more than a break even. You know this won't happen unless they can build a GPU as efficiently as they built Zen: awesome yields, good efficiency, and performance that can be scaled across the whole product stack. The idea that somehow you can win on price alone just won't fly. You need a product that is competitive at an architectural / design level.

summer 2016:

- rx470: $179

- rx480 4gb: $199

- rx480 8gb: $239

- 1060 3gb: $199

- 1060 6gb: $249

spring 2017:

- rx570: 169

- rx580 4gb: $199

- rx580 8gb: $239No it would not. Steam HW survey despite its shortcomings is good enough for some generic data and it does show GTX1080 and up cards make up around 5% of the market. It is not a big enough sector to shake things.That is an awesome card and congrats for getting one. A note though - these sold anywhere between $400 and $500 when new, basically twice the MRSP ;)

If you want better quality hold back paying for crap use your wallets not your impulsive buying nature to show all these companies who owns them yes its you the consumer who owns their ass with out you they would be out of business.... common logical sense (Save money buy old tech wait until issues and bugs are sorted then invest in the hardware simple )

that's my 2p's worth

I agree with you for the most part, the idea here was to express that shared idea : c'mon AMD, do something, react, give users an option. I think they really can take advantage on the pricing issues with RTX, but it won't be easy to see big chances. Let's see what happens, I guess I'm being too optimistic here.

Thank you for sharing your thoughts, as I've said they are a good portrait of the current situation.

AFAIK Ryzen is build on GloFo 12nm ....