Thursday, September 27th 2018

NVIDIA's Weakness is AMD's Strength: It's Time to Regain Mid-Range Users

It won't be easy to see an AMD counterpart to NVIDIA GeForce RTX 2080 Ti in the short time. Heck, it will be difficult to see any real competitors to the RTX 2000 Series anytime soon. But maybe AMD doesn't need to compete on that front. Not yet. The reason is sound and clear: RTX prices are NVIDIA's weakness, and that weakness, my fellow readers, could become AMD's biggest strength.

The prices NVIDIA and its partners are asking for RTX 2080 and RTX 2080 Ti have been a clear discussion topic since we learnt about them. Most users have criticized those price points, even after NVIDIA explained the reasoning behind them. Those chips are bigger, more complex and more powerful than ever, so yes, costs have increased and that has to be taken into account.None of that matters. It even doesn't matter what ray-tracing can bring to the game, and it doesn't matter if those Tensor Cores will provide benefits beyond DLSS. I'm not even counting on the fact that DLSS is a proprietary technology that will lock us (and developers, for that matter) a little bit more in another walled garden. Even if you realize that the market perception is clear: who has the fastest graphics card is perceived as the tech/market leader.

There's certainly a chance that RTX takes off and NVIDIA sets again the bar in this segment: the reception of the new cards hasn't been overwhelming, but developers could begin to take advantage of all the benefits Turing brings. If they do, we will have a different discussion, one in which future cards such as RTX 2070/2060 and its derivatives could bring a bright future for NVIDIA... and a dimmer one for AMD.

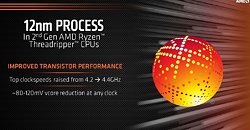

But the thing that matters now for a lot of users is pricing, and AMD could leverage that crucial element of the equation. In fact, the company could do that very soon. Some indications reveal that AMD could launch a new Polaris revision in the near future. This new silicon is allegedly being built on TSMC's 12 nm process, something AMD did successfully with its Ryzen 2000 Series of CPUs.AMD must have learnt a good lesson there: its CPU portfolio is easily the best in its history, and the latest woes at Intel are helping and causing forecast revisions that estimate a 30% market share globally for AMD in Q4 2018. See? Intel's weakness is AMD's strength on this front.

2018 started with the worst news for Intel -Spectre and Meltdown- and it hasn't gone much better later on: the jump to the 10 nm process never seems to come, and Intel's messages about those delays have not helped to reinforce confidence in this manufacturer. The company would be three generations further than they are now without those big problems, and the situation for AMD would be quite different too.

Everything seems to make sense here: customers are upset with that RTX 2000 Series for the elite, and that Polaris revision could arrive at the right time and the right place. With a smaller node AMD could gain higher yields, decrease cost, increase clock frequencies and provide that 15% performance increase some publications are pointing to. Those are a lot of "coulds", and in fact there's no reason to believe that Polaris 30 is more than just a die shrink, so we would have the same unit counts and higher clocks.That won't probably be enough to make the hypothetical RX 680 catch up with a GTX 1070: performance of the latter is +34% the one we found in the RX 580 on average according to our tests, so even with that refresh we will have a more competitive Radeon RX family that could win the price/performance battle, and that is no small feat.

The new cards would also not target just existing GTX 7/9 Series users, but also those older AMD Radeon users that were expecting a nice upgrade on performance without having to sell their souls. And for the undecided users, the ones that are thinking about getting a GTX 1050/Ti or a GTX 1060, AMD's offer could be quite attractive if price/performance ratio hits NVIDIA where it hurts more.

That would put that new family of graphic cards (Radeon RX 600?) on a pretty good position to compete with GeForce GTX 1000. NVIDIA presumably could still be king in the power consumption area, but besides that, AMD could position itself on that $300-$500 range (and even below that) with a really compelling suite of products.

So yes, AMD could have a winning hand here. Your move, AMD.

The prices NVIDIA and its partners are asking for RTX 2080 and RTX 2080 Ti have been a clear discussion topic since we learnt about them. Most users have criticized those price points, even after NVIDIA explained the reasoning behind them. Those chips are bigger, more complex and more powerful than ever, so yes, costs have increased and that has to be taken into account.None of that matters. It even doesn't matter what ray-tracing can bring to the game, and it doesn't matter if those Tensor Cores will provide benefits beyond DLSS. I'm not even counting on the fact that DLSS is a proprietary technology that will lock us (and developers, for that matter) a little bit more in another walled garden. Even if you realize that the market perception is clear: who has the fastest graphics card is perceived as the tech/market leader.

There's certainly a chance that RTX takes off and NVIDIA sets again the bar in this segment: the reception of the new cards hasn't been overwhelming, but developers could begin to take advantage of all the benefits Turing brings. If they do, we will have a different discussion, one in which future cards such as RTX 2070/2060 and its derivatives could bring a bright future for NVIDIA... and a dimmer one for AMD.

But the thing that matters now for a lot of users is pricing, and AMD could leverage that crucial element of the equation. In fact, the company could do that very soon. Some indications reveal that AMD could launch a new Polaris revision in the near future. This new silicon is allegedly being built on TSMC's 12 nm process, something AMD did successfully with its Ryzen 2000 Series of CPUs.AMD must have learnt a good lesson there: its CPU portfolio is easily the best in its history, and the latest woes at Intel are helping and causing forecast revisions that estimate a 30% market share globally for AMD in Q4 2018. See? Intel's weakness is AMD's strength on this front.

2018 started with the worst news for Intel -Spectre and Meltdown- and it hasn't gone much better later on: the jump to the 10 nm process never seems to come, and Intel's messages about those delays have not helped to reinforce confidence in this manufacturer. The company would be three generations further than they are now without those big problems, and the situation for AMD would be quite different too.

Everything seems to make sense here: customers are upset with that RTX 2000 Series for the elite, and that Polaris revision could arrive at the right time and the right place. With a smaller node AMD could gain higher yields, decrease cost, increase clock frequencies and provide that 15% performance increase some publications are pointing to. Those are a lot of "coulds", and in fact there's no reason to believe that Polaris 30 is more than just a die shrink, so we would have the same unit counts and higher clocks.That won't probably be enough to make the hypothetical RX 680 catch up with a GTX 1070: performance of the latter is +34% the one we found in the RX 580 on average according to our tests, so even with that refresh we will have a more competitive Radeon RX family that could win the price/performance battle, and that is no small feat.

The new cards would also not target just existing GTX 7/9 Series users, but also those older AMD Radeon users that were expecting a nice upgrade on performance without having to sell their souls. And for the undecided users, the ones that are thinking about getting a GTX 1050/Ti or a GTX 1060, AMD's offer could be quite attractive if price/performance ratio hits NVIDIA where it hurts more.

That would put that new family of graphic cards (Radeon RX 600?) on a pretty good position to compete with GeForce GTX 1000. NVIDIA presumably could still be king in the power consumption area, but besides that, AMD could position itself on that $300-$500 range (and even below that) with a really compelling suite of products.

So yes, AMD could have a winning hand here. Your move, AMD.

110 Comments on NVIDIA's Weakness is AMD's Strength: It's Time to Regain Mid-Range Users

@dmartin Ryzen 2000 Series is being built on GF's 12 nm, not on TSMC's 12 nm.

With a smaller node AMD could gain higher yields, decrease cost, increase clock frequencies and provide that 15% performance increase some publications are pointing to. Those are a lot of "coulds", and in fact there's no reason to believe that Polaris 30 is more than just a die shrink, so we would have the same unit counts and higher clocks.

If Polaris 30 is being built on TSMC's 12 nm then is not comparable to Polaris 10 or 20 which are built on GF's 14 nm instead. The metrics are not compatible between different foundries, we can't be sure if is a smaller node or die shrink after all, so any performance uplift is uncertain.

"resistance movement or a cult, refusing to face the facts."

I just see two huge Ranting's/Breakdowns of this "Editorial" in a matter of minutes, with all these current stat's and claims, almost like they had them canned and waiting in the wings. And, then there's attacks that this "new contributing editor" is a "plant", while two "short-timers" can construct volumes of rebuttal, and they purport he and other must be some shrill or resistance for not adhering to their tyrants... Wow!

The thing to Steam indicator is all GTX 1060 are lumped together, the 6Gb and the cheaper 3Gb geldings. So apples to apples lump the 470/570 numbers in the AMD data also.

What is the point of these bits of text? To echo the popular sentiment on a forum after the fact? If the idea is to breathe life into a discussion or create one... well. Mission accomplished, but there are a dozen threads that have done that already. And this piece literally adds nothing to it, all it serves is to repeat it.

Regardless.... here's my take on the editorial you wrote up @dmartin

I think it's a mistake to consider Nvidia's Turing a 'weakness'. You guys act like they dropped Pascal on the head when they launched Turing and there is no way back. What Turing is, is an extremely risky and costly attempt to change the face of gaming. Another thing Turing is, is another performance bracket and price bracket over the 1080ti. Nothing more, nothing less. As for perf/dollar, we are now completely stagnated for 3 years or more - and AMD has no real means to change that either.

You can have all sorts of opinion on that, but that does not change the landscape one bit, and a rebranded Polaris (2nd rebrand mind you, where have we seen this before... oh yeah, AMD R9 - that worked out well!) won't either. AMD needs to bring an efficiency advantage to its architecture and until then, Nvidia can always undercut them on price because they simply need less silicon to do more. Specifically in the midrange. Did you fail to realize that the midrange GPU offering realistically hasn't changed one bit with Turing's launch?

If anyone really thinks that an RX680 or whatever will win back the crowd to AMD, all you need to do is look at recent history and see how that is not the case. Yes, AMD sold many 480s when GPUs were scarce, expensive and mostly consumed by miners. In the meantime - DESPITE - mining AMD still lost market share to Nvidia. That's how great they sell.. Look at Steam Survey and you see a considerably higher percent of 1060's than you see RX480/580s. Look anywhere else with lots of data and you can see an overwhelming and crystal clear majority of Nvidia versus AMD.

What I think is that while Nvidia may lose some market share ánd they may have miscalculated the reception of RTRT / RTX, the Turing move still is a conscious and smart move where they can only stand to gain influence and profit. Simply because Pascal still is for sale. They cover the entire spectrum regardless. Considering that, you can also conclude that the 'Pascal stock' really was no accident at all. Nvidia consciously chose to keep that ace up its sleeve, in case Turing wasn't all they made it out to be. There is really no other option here, Nvidia isn't stupid. And I think that choice was made the very moment Nvidia knew Turing was going to get fabbed on 12nm. It had to be dialed back.

Nothing's changed, and until AMD gets a lean architecture and can fight Nvidia's top spots again, this battle is already lost. Even if Turing costs 2000 bucks. The idiocy of stating you can compete with a midrange product needs to stop. It doesn't exist in GPU. Todays midrange is tomorrow's shit performance - it has no future, it simply won't last.

Polaris would still be good if those damned prices were lower. 4GB RX580 at €200 is what it should be.

I'm not claiming Nvidia is close to perfect, but this just sounds to me like one of those people who get a little disappointed once and then boycotts the vendor "forever".

I've experienced a lot over the years with GPUs, and worn out or bricked a good stack of GPUs (even killing a couple with my own flawed code…), I've seen countless crashes, broken drivers, etc. all of this without touching GPU overclocking. If I were to boycott a vendor once I encounter a minor problem, then I would have nowhere to go.Finally! Have we found that one guy who actually plays AotS?:cool: </sarcasm>

Like I said, the 480 competed well, but with efficiency gains, pascal based midrange cards are going to outperform AMD at all levels (performance, power consumption, cost), unless nvidia just decides NOT to do that and leave AMD anything below the 2070.

If AMD wants to be competitive in the midrange, they need to be able to meet nvidia tit for tat. When you are shopping on a budget, one brand begin consistently 10-20% faster is going to ensure you buy that brand. You get the best deal for the $$$, and that will be nvidia unless they dont compete.

I've seen games at 1200p already push over 4GB on my 480. Games are memory hungry now.NO! God why does everybody think I'm talking about old pascal cards?!?

Mid range cards based on TURING would destroy AMD's bang for the buck argument with GCN. A die shrink might not be enough for GCN. They really need to update their arch to stay competitive in any market segment. IF nvidia comes out with Turing midrange cards, AMD wont have much support until they get a new arch out, unless nvidia outprices themselves ridiculously or just doesnt bother to make anything below the 2070.

This was in response to somebody saying "well AMD will still have the midrange". AMD needs to update their arch, regardless if they are going to compete in the high end, because nvidia's arch improvements will make it to mid range GPUs eventually, and once they do, polaris will have to be so cheap there will not be any profit in it for AMD or the AIBs.

A turing mid range card is what AMD needs to watch for. They were loosing money in the early 2010s, and the low price of GPUs was NOT helping matters. They dont want to get trapped in that situation again. Remember, those super low prices came after the utter collapse of the mining scene the first time around, and the market was flooded with AMD cards. Those prices were not sustainable, as was shown with the 300 series being more expensive as AMD attempted to make money somewhere.

And the peice is right , the 2060 When it shows is going to compete on price with most amd hardware perhaps but without useable RTx and a higher price it's also fighting all old pascals on ebay ie a lot of stuff is equal or better.

Nice to see our resident intel and Nvidia power user involved in the debate, you earning good coin.

And I'm not saying AMD is not behind the 8-ball here or that Nvidia has upped the ante being they're dropping cash on R&D and engineering as they have one singular focus. But it still it's about a Bang-for-Buck market and given Nvidia's release of Pascale and now Turing they haven't been slow'n their roll as to pricing. They're on a different path needing to apsolutly recoup those expenditures on all market segments, even the high volume sellers.. As not recouping that is a bad, in not having the cash to sustain.

AMD is in the same situation as when Piledriver CPU's had to be the backstop for several years till Zen came about. This to will pass.I'm sure AMD and AIB's could still have decent margins with a re-spin and optimize the current Polaris, go with GDDR6 as a "pipe-cleaner" exercise given the design money is definitely spent. So they pick-up a little extra from just the 12nm process, and refined boost algorithms to push past 1500Mhz, all perhaps in a 175W envelope. They end up with a card that would provide strong/competent 1440p... and $230 price it will have a lot going for it. Then there's the geldings (570's) for $160; and 1080p where perhaps their best attack, bolstering the "entry-novice" as that would keep them pulling market share.

People buy: A) On price; B) Monitor resolution now and near future; C) If they see or find games that tax the experience/immersion of play.

Seriously, why are you desperately trying to prove all this ? Is this a business meeting and your trying to make a sales pitch ? It's because of comments like these people end believing some of you are paid shills, for real. Just say this : "I think 1060 is better because it's a bit faster and more power efficient" , spare us the block of text and numbers. It's been 2 god damn years since these cards have been released we know the deal.

AMD is fighting an uphill battle in that area, I have heard that NVIDIA are getting developers to actively change the rendering pipeline in major PC-ported game engines to better suit their GPU designs, at the expense of performance on GCN. Even with GCN in the major gaming consoles, when porting to PC, that all goes out of the window. I am not saying GCN is a perfect architecture, it is quite obvious that there needs to be some serious redesign, especially with regards to its resource balancing and scalability (64 ROP, 4 SE, 64 CU seems to be an architectural limitation at this stage).

I think GCN was designed with a different idea of what game engines would use in 3D graphics. A strong emphasis on Compute performance, but a lighter approach to Pixel and Primitive performance. It is obvious that NVIDIA's architecture is more balanced / suited to current game engines. That brings me to the utilisation point: I have been experimenting with GPUOpen's Radeon GPU Profiler and taking some profiles of DX12 and Vulkan games that I own. From what I can see Vega's shader array spends a significant amount of timing doing literally nothing. This is known as "Wavefront Occupancy". I was actually shocked to see it visualised. It is obvious that Vega is not shader bound in current games as the performance gap when clock speeds are normalised between 56 CU and 64 CU chips is ~5%, despite the latter having 14.5% more shading resources.

What I am trying to say with this somewhat lengthy post is, that Vega is not the garbage as so many people claim, AMD tried to fix some major bottlenecks with NGG, but for whatever reason it is not fully implemented in production silicon, or enabled in the current driver stack - Vega 10 falls back to a legacy implementation of fixed-function geometry processing, all while its shader array spends more time than not, idling. So take a moment to fully understand why this could be, rather than bashing the GPU as 'garbage'. Remember that RTG doesn't have the luxury of the funds to develop multiple GPU lines for different uses, Vega is a multi-purpose chip delivering extremely competitive Compute performance and serviceable gaming performance. It also has the highest (Currently out of the dGPUs) level of DX12 feature support, even greater than Turing.

TLDR: Vega is heavily underutilised in games, potentially due to lack of real effort by devs to optimise for it, or being designed for a dramatically different type of workload (Compute-heavy) than what current games demand. Here's to hoping AMD can turn Radeon around. Thanks for reading. -Ash

The difference between TSMC's 10 nm and 7 nm are small, and since Vega 10 is being built in GF's 14nm, we can't compare between different foundries, so the real density is unclear.

Vega 20 is very unlike to double transistors since it has the same amount of cores as Vega 10. Anyway Vega 20 is not expected to launch in gaming market, I believe the reason is that the yields are not good enough to be priced at competitive levels (less than $800).

Of course AMD says it is only 2x density so it is 10nm. But why would they call it 7nm then.

2) Well, considering most top games are console ports, the game bias today is favoring AMD more than ever. Still most people are misguided what is actually done in "optimizations" from developers. In principle, games are written using a common graphics API, and none of the big ones are optimized by design for any GPU architecture or a specific model. Developers are of course free to create different render paths for various hardware, but this is rare and shouldn't be done, it's commonly only used when certain hardware have major problems with certain workloads. Many games are still marginally biased one way or the other, this is not intentionally, but simply a consequence of most developers doing the critical development phases on one vendor's hardware, and then by accident doing design choices which favors one of them. This bias is still relatively small, rarely over 5-10%.

So let's put this one to rest once and for all; games don't suck because they are not optimized for a specific GPU. It doesn't work that way.Do you have concrete evidence of that?

Even if that is true, the point of benchmarking 15-20 games is that it will eliminate outliers.Your observation of idle resources is correct(3), that is the result of the big problem with GCN.

You raise some important questions here, but the assessment is wrong (4).

As I've mentioned, GCN scales nearly perfect on simple compute workloads. So if a piece of hardware can scale perfectly, then you might be tempted to think that the error is not the hardware but the workload? Well, that's the most common "engineering" mistake; you have a problem (the task of rendering) and a solution (hardware), and when the solution is not working satisfactory, you re-engineer the problem not the solution. This is why we always hear people scream that "games are not optimized for this hardware yet", well the truth is that games rarely are.

The task of rendering is of course in principle just math, but it's not as simple as people think. It's actually a pipeline of workloads, many of which may be heavily parallel within a block, but may also have tremendous amounts of resource dependencies. The GPU have to divide this rendering tasks into small worker threads (GPU threads, not CPU threads) which runs on the clusters, and based on memory controller(s), cache, etc. it has to schedule things to that the GPU is well saturated at any time. Many things can cause stalls, but the primary ones are resource dependencies (e.g. multiple cores needs the same texture at the same time) and dependencies between workloads. Nearly all of Nvidia's efficiency advantage comes down to this, which answers your (3).

Even with the new "low level APIs", developers still can't access low level instructions or even low-level scheduling on the GPU. There are certainly things developers can do to render more efficiently, but most of that will be bigger things (on a logic or algorithmic level) that benefits everyone, like changing the logic in a shader program or achieving something with less API calls. The true low-level optimizations that people fantasize about is simply not possible yet, even if people wanted to.

we see how that went - to my utter disappointment. so here we are.

hawaii will be 5 y/o next month (happy birthday!) though it was a hot hungry power hog; it's price/performance was undisputed. that was, imo AMD's last successful launch.

and that makes a sad panda.

I will mention...I just picked up an RX 480 for 120. on ebay. Just like brand new. There's a ton of RX 470/480 or RX 570/580's floating around dirt cheap out there. With today's 15% off everything...good deals galore.

For 1080p gaming...I'm more than ecstatic...borderline giddy!

:),

Liquid Cool