Sunday, November 25th 2018

14nm 6th Time Over: Intel Readies 10-core "Comet Lake" Die to Preempt "Zen 2" AM4

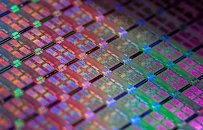

If Intel's now-defunct "tick-tock" product development cadence held its ground, the 14 nm silicon fabrication node should have seen just two micro-architectures, "Broadwell" and "Skylake," with "Broadwell" being an incrementally improved optical shrink of 22 nm "Haswell," and "Skylake" being a newer micro-architecture built on a then more matured 14 nm node. Intel's silicon fabrication node advancement went off the rails in 2015-16, and 14 nm would go on to be the base for three more "generations," including the 7th generation "Kaby Lake," the 8th generation "Coffee Lake," and 9th generation "Coffee Lake Refresh." The latter two saw Intel increase core-counts after AMD broke its slumber. It turns out that Intel won't let the 8-core "Coffee Lake Refresh" die pull the weight of Intel's competitiveness and prestige through 2019, and is planning yet another stopgap, codenamed "Comet Lake."

Intel's next silicon fabrication node, 10 nm, takes off only toward the end of 2019, and AMD is expected to launch its 7 nm "Zen 2" architecture much sooner than that (debuts in December 2018). Intel probably fears AMD could launch client-segment "Zen 2" processors before Intel's first 10 nm client-segment products, to cash in on its competitive edge. Intel is looking to blunt that with "Comet Lake." Designed for the LGA115x mainstream-desktop platform, "Comet Lake" is a 10-core processor die built on 14 nm, and could be the foundation of the 10th generation Core processor family. It's unlikely that the underlying core design is changed from "Skylake" (circa 2016). It could retain the same cache hierarchy, with 256 KB per core L2 cache, and 20 MB shared L3 cache. All is not rosy in the AMD camp. The first AMD 7 nm processors will target the enterprise segment and not client, and CEO Lisa Su in her quarterly financial results calls has been evasive about when the first 7 nm client-segment products could come out. There was some chatter in September of a "Zen+" based 10-core socket AM4 product leading up to them.

Source:

HotHardware

Intel's next silicon fabrication node, 10 nm, takes off only toward the end of 2019, and AMD is expected to launch its 7 nm "Zen 2" architecture much sooner than that (debuts in December 2018). Intel probably fears AMD could launch client-segment "Zen 2" processors before Intel's first 10 nm client-segment products, to cash in on its competitive edge. Intel is looking to blunt that with "Comet Lake." Designed for the LGA115x mainstream-desktop platform, "Comet Lake" is a 10-core processor die built on 14 nm, and could be the foundation of the 10th generation Core processor family. It's unlikely that the underlying core design is changed from "Skylake" (circa 2016). It could retain the same cache hierarchy, with 256 KB per core L2 cache, and 20 MB shared L3 cache. All is not rosy in the AMD camp. The first AMD 7 nm processors will target the enterprise segment and not client, and CEO Lisa Su in her quarterly financial results calls has been evasive about when the first 7 nm client-segment products could come out. There was some chatter in September of a "Zen+" based 10-core socket AM4 product leading up to them.

123 Comments on 14nm 6th Time Over: Intel Readies 10-core "Comet Lake" Die to Preempt "Zen 2" AM4

So you can score a cheap 260 EUR TN somewhere. Great... it has no relation to your price argument in terms of CPU prices and how all of a sudden gaming is so expensive. You're now saying its not? Do you even logic?

I see this trend alot. Kids whine about price and then proceed to invest and force themselves in the most expensive niches that they can find: be it high refresh, 4K, or silly demands in terms of peripherals... Even you are saying you'd back down to medium settings on a lightweight game to get decent FPS to suit your monitor choice. Its .... well. To each his own, but my mind is blown.Hear, hear

But there's a MASSIVE difference between 240Hz gaming and 30Hz gaming, no matter what.

If you compare the prices of what most gamers were actually buying back then, to the prices of the top end, and then you compare the modern equivalents to those, you'll see that "Mainstream" has jumped in price by ~63% in the last 7 years and "HEDT" has jumped by ~88%, while the $ itself has only risen by ~12%

www.cnet.com/news/intel-releases-new-prices-on-pentiums/

But the thing is back then CPUs were the main component of a pc, well it still is but nowadays GPU has taken over quite a lot of workload that cpu used to perform

and the prices on computers were still very high in the 90s.

I remember my parents paid approximately 3000 usd for a Dell pc with pentium 120mhz and 8MegaByte ram back in 1996

the graphic processing unit was integrated onto the motherboard and had 2 MegaByte of memory....

The further back you go in time, the more different not only pricing becomes, but the PURPOSE of the hardware also changes, in many cases entire market segments simply don't exist when you go back in time, so the usefulness of a comparison becomes very limited.

My former HD5870, fastest GPU in the world by that time, cost me 350€. Nowadays the fastest GPU costs 1200€ in my country. Enough said.

at least thats how it felt when I was playing Duke Nukem 3D and Quake

The strange thing is there were more players in the cpu market with Cyrix being one of the big names I am sure there were also a few others around other than Intel and yet the cost of a computer were just outrageously high at least compared to the prices today.

BUT that doesn't mean Nvidia and Intel are not greedy for charging 1300 bucks for a consumer gpu and 600+ for a refresh-refresh-refresh cpu

Cant wait to see what the next step is though, now that Moore's law is coming.

No wait, how about a TUF board 10-cores 20-threads. :roll:

The ROG forum should be fun to watch.

"Waddaya mean it's only a 2-phase VRM?" :D

Intel is going to launch some new SKU's without IGP soon-ish. Same socket as now at least...

I'm afraid that's all I can share right now, but I'm sure more details will leak soon.

The last 10 core they had (7900x) was popping mobos at launch. The 9900K OC'd has that ability - this 10 core is going to be a house burner.

The VRM won't be an issue if you choose the right board.

For those worrying about Dual Ring-bus performance in games, I would guess they can write drivers that tell a game to utilize only 1 of the 2 ringbusses. A 5.2GHz 5-core will game just as well as a 5GHz 8-core, and then they can attempt to continue to compete in multithreading with midrange Zen 2.

This socket would also pave the way for the inevitable 14nm++++ 12, 14, and 16-core dual-dies Intel will be forced to launch in 2020....

The problem is that Z390 is already a high end chipset and it should be expected that any Z390 board should run the rull range of Intel's lineup without breaking a sweat.

If we were talking about B360 boards, then sure, budget board, super high end CPU, that's a mismatch, plain and simple. But Z390 is a high end chipset. The boards are expensive. One of the main draws to buying that chipset is overclocking.

So why do products even exist with that chipset, that have no hope of maintaining even a mild overclock without making us have to side-eye the VRMs on each one individually?

The simple answer is, that's happening because Intel are wringing the scrawny fucking neck of 14nm too long, too hard, and hoping the consumer doesn't notice how close to the ragged edge these chips are actually running.

I'm afraid I can't share much more, as this information hasn't leaked yet so...

I guess it would be on current Intel roadmaps, as it's apparently not that far off.

And that "who we´re dealing with" is just ridiculous. People in 2018 still have problems to accept gamers that play on both PC and consoles? Damn, you got stuck in the past bud. On the previous page I had to take a picture to show my 240hz monitor and explained how I love to game on it. That doesn´t stop me from enjoying consoles aswell. Is there any contract we sign wich says that if we play PC we can´t touch consoles? Please...... move along.

Maybe if PC kept delivering amazing exclusives pushing boundaries I wouldn´t have to buy consoles. Think about it. But when was the last one? Crysis 1 in 2008? And Nintendo Switch is another target for me, didn´t buy yet because is still too expensive for what if offers. But it has amazing games aswell and I will for sure play it in the future. Open your mind.