Monday, September 2nd 2019

AMD "Renoir" APU to Support LPDDR4X Memory and New Display Engine

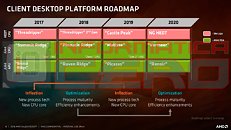

AMD's next-generation "Renoir" APU, which succeeds the company's 12 nm "Picasso," will be the company's truly next-generation chip to feature an integrated graphics solution. It's unclear as of now, if the chip will be based on a monolithic die, or if it will be a multi-chip module of a 7 nm "Zen 2" chiplet paired with an enlarged I/O controller die that has the iGPU. We're getting confirmation on two key specs - one, that the iGPU will be based on the older "Vega" graphics architecture, albeit with an updated display engine to support the latest display standards; and two, that the processor's memory controller will support the latest LPDDR4X memory standard, at speeds of up to 4266 MHz DDR. In comparison, Intel's "Ice Lake-U" chip supports LPDDX4X up to 3733 MHz.

Code-lines pointing toward "Vega" graphics with an updated display controller mention the new DCN 2.1, found in AMD's new "Navi 10" GPU. This controller supports resolutions of up to 8K, DSC 1.2a, and new resolutions of 4K up to 240 Hz and 8K 60 Hz over a single cable, along with 30 bits per pixel color. The multimedia engine is also suitably updated to VCN 2.1 standard, and provides hardware-accelerated decoding for some of the newer video formats, such as VP9 and H.265 at up to 90 fps at 4K, and 8K up to 24 fps, and H.264 up to 150 fps at 4K. There's no word on when "Renoir" comes out, but a 2020 International CES unveil is likely.

Source:

Phoronix

Code-lines pointing toward "Vega" graphics with an updated display controller mention the new DCN 2.1, found in AMD's new "Navi 10" GPU. This controller supports resolutions of up to 8K, DSC 1.2a, and new resolutions of 4K up to 240 Hz and 8K 60 Hz over a single cable, along with 30 bits per pixel color. The multimedia engine is also suitably updated to VCN 2.1 standard, and provides hardware-accelerated decoding for some of the newer video formats, such as VP9 and H.265 at up to 90 fps at 4K, and 8K up to 24 fps, and H.264 up to 150 fps at 4K. There's no word on when "Renoir" comes out, but a 2020 International CES unveil is likely.

39 Comments on AMD "Renoir" APU to Support LPDDR4X Memory and New Display Engine

Inflection - new process tech, new CPU core - doesn't quite describe Picasso.

(or am i excited over nothing?)

Intel CPUs are basically the No. 1 choice when it comes to office PC, home servers, work servers, worstations.

iGPU is only needed to show the view, and no workload is needed. We are buying things like 8700k, 9900k not because they are intel, but because they are powerful and have iGPU.

I wish AMD added iGPU into their high end models - NOT FOR GAMING.

While AMD is doing a good job on providing 4 core APU, it is however, based previous generation, which is not acceptable in my opinion.

There is definitely a sector where low-end gaming on an iGPU is a thing and AMD APUs have that sector locked down right now with 2200G/2400G/3200G/3400G. It is their decision to produce as few different dies as possible and the APU lineup is a result of that. Bigger CPUs without iGPU is also a clear decision, they would have needed a smaller iGPU for those or using the Vega would have pushed die size to too large.

Adding an iGPU in the processor (not for gaming) is a waste of space. There's so many discrete cards and cheap.

Considering price of the 9900k and it's capability in the server market I strongly disagree this is the CPU to go for(just because it has iGPU) with it's limited PCI-e lanes I don't think so.

So basically I disagree with his post.

HDMI 2.0 you can get from GTX1030 at 70€, GPU with Displayport 1.2 can be had for about 90€.

For this reason, they should use monolithic typology.

Unless you go APU and call it a day but this is for the server market so 3800X is more than enough for home workstation or home server and cheaper and the difference in performance between 9900K and 3800X is negligible

1440p or 2160p monitors are pretty commonplace, usually with Displayport 1.2 for connector.

Prices do differ between regions, I suppose. In EU 9900K can be had for 475€, 3800X is 389€ and 3700X is 335€.

Yeah monolithic design is definitely what I'd prefer as well, but then we'll have to see the economics of that.

I actually think that getting the CPU portion of the power draw down is more important that the Vega IGP, so if Renoir APUs are 7nm Zen2 cores and 12nm IGP and IO, they'll be fine.

Ideally, the whole thing will be at 7nm but with the 2700U I bought myself in February, and with some STAPM power-budget tweaking thanks to modified BIOSes and RyzenAdj, I can say for certain that the 4C/8T of Raven Ridge load can comfortably use 15-18W and will never consume less than 5W in a normal system. The Vega10 IGP will add maybe 8-9W at full load and peak clocks but rarely get to run at those speeds because most laptops OEMs set a STAPM limit of somewhere between 15-25W (20W in my case). That means that before long, the CPU is using the lion's share of the power budget and the Vega cores are throttled back to 25% of their ideal clocks, despite being the more important part of the equation when the IGP is active in a 3D or GPGPU compute application.

At 15-18W potential peak CPU core usage the IGP really suffers in a Ryzen APU, and most people will be buying Mobile Ryzen for the Vega cores, otherwise they'd just get an Intel with worse graphics but better battery life. In the case of the 15W ultrabooks, the IGP is throttled down to pointless speeds. In the case of 20W and 25W models, the IGP is running sub-optimally if the CPU is busy. TSMC's 7nm seems to have huge efficiency gains so even if ONLY the CPU cores in Renoir were moved to 7nm, that 15-18W CPU peak draw could drop down to Maybe 10-12W, leaving far more headroom for the IGP to do their thing in the ideal 15W power budget.

My preference would be for AMD to better balance their APU power budget in hardware so that the Vega cores get first dibs on whatever power is available. It's actually self-balancing, because if the CPU clocks drop too far from IGP greed, the IGP will stall and free up power budget for the CPU. The current CPU-first implementation doesn't really work. The CPU clocks up and overfeeds the IGP which then rejects frames because it can't process them fast enough. It's a stupid waste :(