Mar 30th, 2025 04:01 EDT

change timezone

Latest GPU Drivers

New Forum Posts

- Windows 10 Vs 11, Which one too choose? (125)

- Can you guess Which game it is? (19)

- Did Nvidia purposely gimp the performance of 50xx series cards with drivers (126)

- RTX 5090 very slow while rendering or video/photo editing. (8)

- What are you playing? (23313)

- 13500 or 14500 or 12700 or 12700k (0)

- GPU Crashing System From Hibernation (10)

- Upgrade from a AMD AM3+ to AM4 or AM5 chipset MB running W10? (61)

- PCI 4.0 16x slot reported as a PCI 5.0 8x with the AMD 9070 XT Reaper GPU (39)

- The TPU UK Clubhouse (26006)

Popular Reviews

- Sapphire Radeon RX 9070 XT Pulse Review

- ASRock Phantom Gaming B850 Riptide Wi-Fi Review - Amazing Price/Performance

- Samsung 9100 Pro 2 TB Review - The Best Gen 5 SSD

- Palit GeForce RTX 5070 GamingPro OC Review

- Assassin's Creed Shadows Performance Benchmark Review - 30 GPUs Compared

- Sapphire Radeon RX 9070 XT Nitro+ Review - Beating NVIDIA

- ASRock Radeon RX 9070 XT Taichi OC Review - Excellent Cooling

- Enermax REVOLUTION D.F. 12 850 W Review

- AMD Ryzen 7 9800X3D Review - The Best Gaming Processor

- AMD Ryzen 9 9950X3D Review - Great for Gaming and Productivity

Controversial News Posts

- AMD RDNA 4 and Radeon RX 9070 Series Unveiled: $549 & $599 (260)

- MSI Doesn't Plan Radeon RX 9000 Series GPUs, Skips AMD RDNA 4 Generation Entirely (142)

- Microsoft Introduces Copilot for Gaming (124)

- AMD Radeon RX 9070 XT Reportedly Outperforms RTX 5080 Through Undervolting (119)

- NVIDIA Reportedly Prepares GeForce RTX 5060 and RTX 5060 Ti Unveil Tomorrow (115)

- Over 200,000 Sold Radeon RX 9070 and RX 9070 XT GPUs? AMD Says No Number was Given (100)

- NVIDIA GeForce RTX 5050, RTX 5060, and RTX 5060 Ti Specifications Leak (96)

- Retailers Anticipate Increased Radeon RX 9070 Series Prices, After Initial Shipments of "MSRP" Models (90)

Monday, August 31st 2020

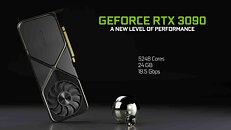

Performance Slide of RTX 3090 Ampere Leaks, 100% RTX Performance Gain Over Turing

NVIDIA's performance expectations from the upcoming GeForce RTX 3090 "Ampere" flagship graphics card underline a massive RTX performance gain generation-over-generation. Measured at 4K UHD with DLSS enabled on both cards, the RTX 3090 is shown offering a 100% performance gain over the RTX 2080 Ti in "Minecraft RTX," greater than 100% gain in "Control," and close to 80% gain in "Wolfenstein: Young Blood." NVIDIA's GeForce "Ampere" architecture introduces second generation RTX, according to leaked Gainward specs sheets. This could entail not just higher numbers of ray-tracing machinery, but also higher IPC for the RT cores. The specs sheets also refer to third generation tensor cores, which could enhance DLSS performance.

Source:

yuten0x (Twitter)

Mar 30th, 2025 04:01 EDT

change timezone

Latest GPU Drivers

New Forum Posts

- Windows 10 Vs 11, Which one too choose? (125)

- Can you guess Which game it is? (19)

- Did Nvidia purposely gimp the performance of 50xx series cards with drivers (126)

- RTX 5090 very slow while rendering or video/photo editing. (8)

- What are you playing? (23313)

- 13500 or 14500 or 12700 or 12700k (0)

- GPU Crashing System From Hibernation (10)

- Upgrade from a AMD AM3+ to AM4 or AM5 chipset MB running W10? (61)

- PCI 4.0 16x slot reported as a PCI 5.0 8x with the AMD 9070 XT Reaper GPU (39)

- The TPU UK Clubhouse (26006)

Popular Reviews

- Sapphire Radeon RX 9070 XT Pulse Review

- ASRock Phantom Gaming B850 Riptide Wi-Fi Review - Amazing Price/Performance

- Samsung 9100 Pro 2 TB Review - The Best Gen 5 SSD

- Palit GeForce RTX 5070 GamingPro OC Review

- Assassin's Creed Shadows Performance Benchmark Review - 30 GPUs Compared

- Sapphire Radeon RX 9070 XT Nitro+ Review - Beating NVIDIA

- ASRock Radeon RX 9070 XT Taichi OC Review - Excellent Cooling

- Enermax REVOLUTION D.F. 12 850 W Review

- AMD Ryzen 7 9800X3D Review - The Best Gaming Processor

- AMD Ryzen 9 9950X3D Review - Great for Gaming and Productivity

Controversial News Posts

- AMD RDNA 4 and Radeon RX 9070 Series Unveiled: $549 & $599 (260)

- MSI Doesn't Plan Radeon RX 9000 Series GPUs, Skips AMD RDNA 4 Generation Entirely (142)

- Microsoft Introduces Copilot for Gaming (124)

- AMD Radeon RX 9070 XT Reportedly Outperforms RTX 5080 Through Undervolting (119)

- NVIDIA Reportedly Prepares GeForce RTX 5060 and RTX 5060 Ti Unveil Tomorrow (115)

- Over 200,000 Sold Radeon RX 9070 and RX 9070 XT GPUs? AMD Says No Number was Given (100)

- NVIDIA GeForce RTX 5050, RTX 5060, and RTX 5060 Ti Specifications Leak (96)

- Retailers Anticipate Increased Radeon RX 9070 Series Prices, After Initial Shipments of "MSRP" Models (90)

131 Comments on Performance Slide of RTX 3090 Ampere Leaks, 100% RTX Performance Gain Over Turing

VR and RT are rather comparable. Its not even a weak comparison like @lexluthermiester thinks. They both are addons to the base gaming experience. They both require additional hardware. They both require a specific performance floor to be enjoyable. They both require additional developer effort while no gameplay (length, content) is added - in fact it actually inspires shorter playtime, as more time is lost on other things.

Effectively, while it was touted as a massive time saver, RTRT does impact the dev time to market, VR does the exact same thing. It adds base cost to any game that is to be developed for a broad market and multiple input devices. Its a step harder than the ones we had until now, too. Somehow Jensen is selling the idea that this will at some point pay off for devs, but there is no proof of that yet. The games with RT don't sell more because of it. The vast majority can't use the features. I believe BF V was one of the less played Battlefields in recent times, for example. RT didn't help it. Metro Exodus... same thing. Cyberpunk - it will again be more of the same. None of these games sold on their RT features, and none of them added playtime for players due to that either. I've not seen a single person saying he'd replay Control just to gaze at reflections again.

The chicken/egg situation is similar between these technologies, they both apply to gaming, and they both, still, are only in view for a niche of the PC gaming market.

Anyone thinking this will gain traction now or in the next two years is still deluding himself. It wasn't that way with Turing, and it hasn't changed since. Not one bit. HL: Alyx also didn't become the catalyst to mass VR adoption, did it?

Bottom line... the RT hype train should not be boarded just yet, if you ask me. Especially not when the hardware is apparently forcing so many sacrifices from us, financially, heat and size wise, and in raw raster perf growth. I'll take a software solution any day of the week and we know they exist. CryEngine has a neat implementation, give me that instead, its cheap, simple, and can 100% utilize existing hardware while the visual difference is minimal at best.

I'm sure Nvidia still refines their training, but the results of that can be delivered in a driver update, they're not blocking anything anymore.

TPU's comment section feels like a joke sometimes.

We all knew RT is only used for part of the rendering, but you're making a joke out of yourself by acting like RT is a bad thing and developers should stick to rasterization forever because it somehow gives better results in your ignorant mind.

Try playing a racing game with VR and suddenly you can't go back anymore...

I've said it before, I'll say it again: bolting RT on top of rasterization is like a hybrid car: you still have to source the materials for everything and you get more things that can go wrong. That doesn't mean an electric car (or going just RT) is a bad idea.

What a time to be alive...

RT is no different. I think many can see the benefit, and so do I, but they question whether it is feasible. I'm kind of on the same train of thought when it comes to the current implementation (brute forcing) of RT. The whole rasterized approach was based on the idea that brute forcing everything was not ever going to work out well. I don't see why this has changed, if you zoom out a bit, we see all sorts of metrics are strained. Die size / cost. Wafer/production capacities versus demand. Market adoption problems... And since recently, the state of the world economy, climate, and whether growth is always a good thing. None of that supports making bigger dies to do mostly the same things.

But yea, been over this previously... let's see that momentum in the delivery of working RT in products. So far... its too quiet. A silence that is similar to that of an electric car ;)Nobody considered it flawed until Jensen launched RTX and said it was. A good thing to keep in mind. Maybe devs did. But end users really never cared. A demand was created where there wasn't one. its good business before anything else.

Also, please dig a part of this demo, that would benefit from RTRT:

Unlike EVs (which I also feel were pushed too soon, too hard), there is a demand for RTRT, even if it's not all about games. As for RTRT adoption, two years ago nobody was talking about it, today RTRT is in both Nvidia and (soon to be released) AMD silicon. Windows and both major consoles. That's a pretty good start if you ask me.

Of course there is a very solid argument to be made that the value of RTRT in RTX 20xx GPUs has so far been minimal, expecting that to stay true in the future comes off as naive. Sure, UE5 does great things with software-based quasi-RT rather than hardware RTRT, but even UE4 has optional support for RTRT, so there's no doubt UE5 will do the same. There's also no knowing if the techniques Epic have shown off for UE5 translate to RTRT's strength such as realistic reflections. Not to mention RTRT support in the upcoming consoles guaranteeing its proliferation in next-gen console titles.

Will most games in two years be RTRT? Of course not. Developers can't master new techniques that quickly at scale. But slowly and surely more and more games will add various RTRT lighting and reflection techniques to increase the visual fidelity, which is a good thing for all of us. Is this a revolution? Not in and of itself, as rasterization will likely be the basis for the vast majority of games for at least the next decade. But rasterization and RTRT can be combined with excellent results. RTRT obviously isn't necessary or even suited for all types of games or visual styles, and comes with an inherent performance penalty (at least until GPU makers find a way of overcoming this, which might not ever happen), but it has a place just like all the other tools in the game developer's arsenal of bag of tricks.

wait...

This was the case when Jensen sold his 10 gigarays for the PC environment, and it seems to be the case for the next console gen for that environment. One might say that the actual adoption is actually just going to start now, and Turing was a proof of concept at best. That also applies to the games we've seen with RT. Very controlled environments. Linearity or a relatively small map, or a big one (Exodus) but then all you get is a GI pass... Stuff you can optimize and tweak almost per scene and sequence. So far... I'm just not convinced. Maybe it is good for a first gen... but the problem is, we're paying the premium ever since with little to show for it. Come Ampere, and you have no option to avoid it anymore, even if there is no content at all that you want to play.

@Valantar great point about the difference with VR hardware adoption because yes, this is absolutely true. But don't underestimate the capability of people to ride it out when they see no reason to purchase something new. GPUs can last a looong time. Again, a car analogy fits here. Look at what ancient crap you still see on the road. And for many of the same reasons: mostly emotional, and financial, and some functional: we like that engine sound, the cancerous smell, etc. :)

You only need to look at the sentiment on this forum. I don't think people are really 'anti RT'. They just don't want to pay more for it and if they do, it better be worth it.

I do agree that RTX seemed rushed, but then again that's the chicken and egg problem of a high-profile GPU feature - you won't get developer support until hardware exists, and people won't buy hardware until it can be used, so ... early adopters are always getting the short end of the stick. I don't see any way to change that, sadly, but as I said above I expect RTRT proliferation to truly take off within two years of the launch of the upcoming consoles. Given that the XSX uses DXR, code for that can pretty much be directly used in the PC port, dramatically simplifying cross-platform development.

In the beginning I was a serious RTX skeptic, and I still think the value proposition of those features has been downright terrible for early RTX 20xx buyers, but I've held off on buying a new GPU for a while now mainly because I want RTRT in an AMD GPU - as you say, GPUs can be kept for a long time, and with my current one serving me well for five years I don't want to be left in the lurch in a couple of years by buying something lacking a major feature. A 5700 or 5700 XT would have been a massive upgrade over my Fury X, but if I'd bought one of those last year or earlier this year I know I would have regretted doing so within a couple of years. I'd rather once again splurge on a high-end card and have it last me 3-5 years. By which time I think I will have gotten more than my money's worth in RT-enabled games.

:D

Those TFlops numbers are multiplied by 2 because 2 operations per shader, but I remember that when Geforce 2 GTS was launched there was a similar 2 ops per pixel, and real performance was way lower than theoretical performance.

But that was one good presentation, Jensen and Nvidia marketing is top-notch. And the prices are decent this time.

To go further base upon the earlier trailers of the game before the geometric detail was somewhat castrated in area's. I want the fully polygon 4K max detail experience with RTRT just give me a target year to wait for. I'm thinking a decade is in the ballpark area short of some huge advancements that said some of those thing like VRS could skew and compromise things a touch and alter the situation and isn't what I'm asking I don't image quality compromises. Though I do defiantly believe those kinds of advancements will make RTRT more widely and easily available at a pretty progressive pace especially so in these earlier generations where the tech is more in it's infancy where relatively smaller improvements and advancements can lead to rather huge and staggering perceived improvements DLSS for example is defiantly becoming more and more intricate and usable and I do strongly believe combined with VRS it'll become harder and harder to discern some of the difference while improving performance by interjecting post process upscale in a VRS manner.That's the crux of the problem a continuation of a situation people already disheartening situation compounds the issue further. That's why in some aspects AMD's RNDA is a slight reprieve, but defiantly not perfect we all know that Nvidia in general has more resources to deliver a more reliable, seamless, and overall less problematic and frustrating experience than AMD is really capable of doing so financially at present. They've often got solid enough hardware, but enough dedicated resources to provide as well of a pain free experience so to speak they lack that extra layer of polish. In part due to the lack of resources and budget in big part at least combined with the the debt that they took on and then got mismanaged by it's previous CEO, but Lisa Su has really reatroactively been correcting a lot of those things over time. I believe we'll see a stronger advancement from AMD going forward under her leadership in both CPU and GPU divisions though I feel the GPU won't fully get the push it needs until AMD's lead on the CPU diminishes. I see it as more of a fall back pathway if they can't maintain a CPU dominance, but if they can they'll just as well assume to continue on that pathway while making incremental progress with GPU's. I feel that applies in both directions because that's how they are diversified at present.

As for the rest, you're mixing up fully path traced games (Quake RTX) with other titles with various RT implementations in lighting, reflections, etc. Keep your arguments straight if you want them taken seriously - you can't complain about the visual fidelity of showcase fully path-traced games and use them as an example of mixed-RT-and-rasterization games not looking good. That makes no sense. I mean, sure, there will always be a performance penalty to RTRT, at least until hardware makers scale up RTRT hardware to "match". But does that include sacrificing visual fidelity? That depends on the implementation. As for you insisting on no-holds-barred, full quality renders all the time ... well, that's what the adage "work smart, not hard" is for. If there is no perceptible difference in fidelity, what is the harm in using smarter rendering techniques to increase performance? Is there some sort of rule saying that straightforward, naïve rendering techniques are better somehow? It sounds like you need to start trusting your senses rather than creating placebo effects for yourself: if you can't tell the difference in a blind comparison test, the most performant solution is obviously better. Do you refuse to use tessellation too? Do you demand that developers render all lighting and shadows on geometry in real time rather than pre-baking it? You have clearly drawn an arbitrary line which you have defined as "real rendering", and are taking a stand for it for some reason that is frankly beyond me. Relax and enjoy your games, please.

As for a "target year" for full quality 4k RTRT: likely never. Why? Because games are a moving target. New hardware means game developers will push their games further. There is no such thing as a status quo in performance requirements.

The only real RT I've seen is Quake and the marble demo. Look at all the videos released yesterday:

If I didn't tell the average person, would anyone even know RT was on? Does Watch Dogs look better? No. Does the Cyberpunk video impress more than the earlier Cyberpunk videos? No. Even when you are watching RT videos, the quality is coming from the rasterization, while RT is almost doing nothing but tanking your framerate. Some people are gullible.

Did Horizon 2 look better or worse than everything we saw yesterday? Exactly.

People forget Battlefield 5 had the most advanced implementation of RT. And everyone, including me, turned it off. Speedy optimization and art > reality and accuracy in gaming.It was kind of funny a lot of the partners had marketing material already to go and some even published, with the "half the cuda core numbers" so yeah, wait for reviews. The positive thing for me with the RTX 3000 release is they doubled down on rasterization performance, instead of putting everything into RT. It's a bit of a return to form.