Wednesday, October 28th 2020

AMD Announces the Radeon RX 6000 Series: Performance that Restores Competitiveness

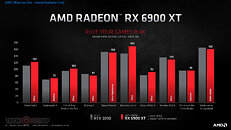

AMD (NASDAQ: AMD) today unveiled the AMD Radeon RX 6000 Series graphics cards, delivering powerhouse performance, incredibly life-like visuals, and must-have features that set a new standard for enthusiast-class PC gaming experiences. Representing the forefront of extreme engineering and design, the highly anticipated AMD Radeon RX 6000 Series includes the AMD Radeon RX 6800 and Radeon RX 6800 XT graphics cards, as well as the new flagship Radeon RX 6900 XT - the fastest AMD gaming graphics card ever developed.

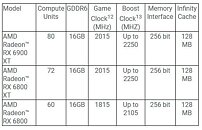

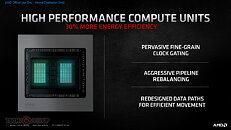

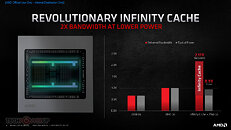

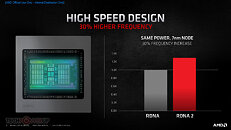

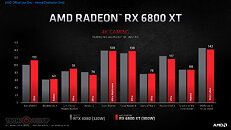

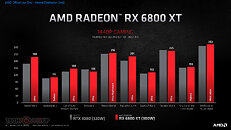

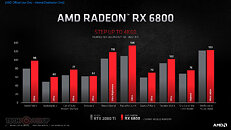

AMD Radeon RX 6000 Series graphics cards are built upon groundbreaking AMD RDNA 2 gaming architecture, a new foundation for next-generation consoles, PCs, laptops and mobile devices, designed to deliver the optimal combination of performance and power efficiency. AMD RDNA 2 gaming architecture provides up to 2X higher performance in select titles with the AMD Radeon RX 6900 XT graphics card compared to the AMD Radeon RX 5700 XT graphics card built on AMD RDNA architecture, and up to 54 percent more performance-per-watt when comparing the AMD Radeon RX 6800 XT graphics card to the AMD Radeon RX 5700 XT graphics card using the same 7 nm process technology.AMD RDNA 2 offers a number of innovations, including applying advanced power saving techniques to high-performance compute units to improve energy efficiency by up to 30 percent per cycle per compute unit, and leveraging high-speed design methodologies to provide up to a 30 percent frequency boost at the same power level. It also includes new AMD Infinity Cache technology that offers up to 2.4X greater bandwidth-per-watt compared to GDDR6-only AMD RDNA -based architectural designs.

"Today's announcement is the culmination of years of R&D focused on bringing the best of AMD Radeon graphics to the enthusiast and ultra-enthusiast gaming markets, and represents a major evolution in PC gaming," said Scott Herkelman, corporate vice president and general manager, Graphics Business Unit at AMD. "The new AMD Radeon RX 6800, RX 6800 XT and RX 6900 XT graphics cards deliver world class 4K and 1440p performance in major AAA titles, new levels of immersion with breathtaking life-like visuals, and must-have features that provide the ultimate gaming experiences. I can't wait for gamers to get these incredible new graphics cards in their hands."

Powerhouse Performance, Vivid Visuals & Incredible Gaming Experiences

AMD Radeon RX 6000 Series graphics cards support high-bandwidth PCIe 4.0 technology and feature 16 GB of GDDR6 memory to power the most demanding 4K workloads today and in the future. Key features and capabilities include:

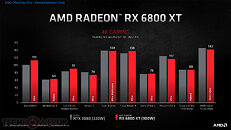

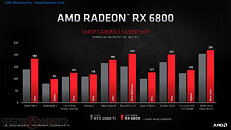

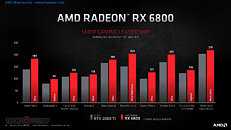

Powerhouse Performance

In the coming weeks, AMD will release a series of videos from its ISV partners showcasing the incredible gaming experiences enabled by AMD Radeon RX 6000 Series graphics cards in some of this year's most anticipated games. These videos can be viewed on the AMD website.

AMD Radeon RX 6000 Series graphics cards are built upon groundbreaking AMD RDNA 2 gaming architecture, a new foundation for next-generation consoles, PCs, laptops and mobile devices, designed to deliver the optimal combination of performance and power efficiency. AMD RDNA 2 gaming architecture provides up to 2X higher performance in select titles with the AMD Radeon RX 6900 XT graphics card compared to the AMD Radeon RX 5700 XT graphics card built on AMD RDNA architecture, and up to 54 percent more performance-per-watt when comparing the AMD Radeon RX 6800 XT graphics card to the AMD Radeon RX 5700 XT graphics card using the same 7 nm process technology.AMD RDNA 2 offers a number of innovations, including applying advanced power saving techniques to high-performance compute units to improve energy efficiency by up to 30 percent per cycle per compute unit, and leveraging high-speed design methodologies to provide up to a 30 percent frequency boost at the same power level. It also includes new AMD Infinity Cache technology that offers up to 2.4X greater bandwidth-per-watt compared to GDDR6-only AMD RDNA -based architectural designs.

"Today's announcement is the culmination of years of R&D focused on bringing the best of AMD Radeon graphics to the enthusiast and ultra-enthusiast gaming markets, and represents a major evolution in PC gaming," said Scott Herkelman, corporate vice president and general manager, Graphics Business Unit at AMD. "The new AMD Radeon RX 6800, RX 6800 XT and RX 6900 XT graphics cards deliver world class 4K and 1440p performance in major AAA titles, new levels of immersion with breathtaking life-like visuals, and must-have features that provide the ultimate gaming experiences. I can't wait for gamers to get these incredible new graphics cards in their hands."

Powerhouse Performance, Vivid Visuals & Incredible Gaming Experiences

AMD Radeon RX 6000 Series graphics cards support high-bandwidth PCIe 4.0 technology and feature 16 GB of GDDR6 memory to power the most demanding 4K workloads today and in the future. Key features and capabilities include:

Powerhouse Performance

- AMD Infinity Cache - A high-performance, last-level data cache suitable for 4K and 1440p gaming with the highest level of detail enabled. 128 MB of on-die cache dramatically reduces latency and power consumption, delivering higher overall gaming performance than traditional architectural designs.

- AMD Smart Access Memory - An exclusive feature of systems with AMD Ryzen 5000 Series processors, AMD B550 and X570 motherboards and Radeon RX 6000 Series graphics cards. It gives AMD Ryzen processors greater access to the high-speed GDDR6 graphics memory, accelerating CPU processing and providing up to a 13-percent performance increase on a AMD Radeon RX 6800 XT graphics card in Forza Horizon 4 at 4K when combined with the new Rage Mode one-click overclocking setting.9,10

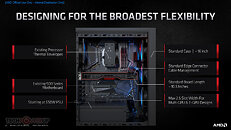

- Built for Standard Chassis - With a length of 267 mm and 2x8 standard 8-pin power connectors, and designed to operate with existing enthusiast-class 650 W-750 W power supplies, gamers can easily upgrade their existing large to small form factor PCs without additional cost.

- DirectX 12 Ultimate Support - Provides a powerful blend of raytracing, compute, and rasterized effects, such as DirectX Raytracing (DXR) and Variable Rate Shading, to elevate games to a new level of realism.

- DirectX Raytracing (DXR) - Adding a high performance, fixed-function Ray Accelerator engine to each compute unit, AMD RDNA 2-based graphics cards are optimized to deliver real-time lighting, shadow and reflection realism with DXR. When paired with AMD FidelityFX, which enables hybrid rendering, developers can combine rasterized and ray-traced effects to ensure an optimal combination of image quality and performance.

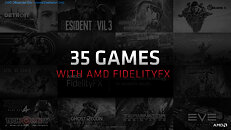

- AMD FidelityFX - An open-source toolkit for game developers available on AMD GPUOpen. It features a collection of lighting, shadow and reflection effects that make it easier for developers to add high-quality post-process effects that make games look beautiful while offering the optimal balance of visual fidelity and performance.

- Variable Rate Shading (VRS) - Dynamically reduces the shading rate for different areas of a frame that do not require a high level of visual detail, delivering higher levels of overall performance with little to no perceptible change in image quality.

- Microsoft DirectStorage Support - Future support for the DirectStorage API enables lightning-fast load times and high-quality textures by eliminating storage API-related bottlenecks and limiting CPU involvement.

- Radeon Software Performance Tuning Presets - Simple one-click presets in Radeon Software help gamers easily extract the most from their graphics card. The presets include the new Rage Mode stable over clocking setting that takes advantage of extra available headroom to deliver higher gaming performance.

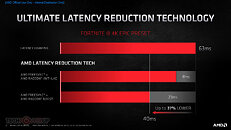

- Radeon Anti-Lag - Significantly decreases input-to-display response times and offers a competitive edge in gameplay.

In the coming weeks, AMD will release a series of videos from its ISV partners showcasing the incredible gaming experiences enabled by AMD Radeon RX 6000 Series graphics cards in some of this year's most anticipated games. These videos can be viewed on the AMD website.

- DIRT 5 - October 29

- Godfall - November 2

- World of Warcraft : Shadowlands - November 10

- RiftBreaker - November 12

- FarCry 6 - November 17

- AMD Radeon RX 6800 and Radeon RX 6800 XT graphics cards are expected to be available from global etailers/retailers and on AMD.com beginning November 18, 2020, for $579 USD SEP and $649 USD SEP, respectively. The AMD Radeon RX 6900 XT is expected to be available December 8, 2020, for $999 USD SEP.

- AMD Radeon RX 6800 and RX 6800 XT graphics cards are also expected to be available from AMD board partners, including ASRock, ASUS, Gigabyte, MSI, PowerColor, SAPPHIRE and XFX, beginning in November 2020.

394 Comments on AMD Announces the Radeon RX 6000 Series: Performance that Restores Competitiveness

mGPU tries to deal with this by setting bitmasks to keep certain tasks on a single GPU and an abstracted copy engine to reduce coherency requirements, but it comes down to the developer needing to explicitly manage that at the moment.

It is also not the same pipeline state object between GCN and RDNA2 either, RDNA2 can prioritise compute and can stop the graphics pipeline entirely. Since gpus are large latency hiding devices, I think this would give us the necessary time needed to seam the images back into one before the timestamp is missed, but I'm rambling.

www.hardwaretimes.com/amd-navi-vs-vega-a-look-at-the-rdna-graphics-architecture/

He said that this system it would be great for 1080/1440p. And I said it could do 4K, unless he wants to stay in lower res for high (100+) framerate.

All 3 current 6000 GPUs are meant for 4K and not 1080/1440p. That was the point...

I didnt speak numbers, but thats what I meant.

price war is only effective if you can keep gaining market share from competitor. with ryzen it works. but in GPU world what happen for the past 10 years shows us that price war are ineffective against nvidia. and i have seen it when nvidia starts retaliating with price war the one that end up giving up first was AMD.

There will in all likelihood be later articles diving into SAM and similar features, and SAM articles are likely to include comparisons to both Ryzen 3000 and Intel setups, but those will necessarily be separate from the base review. Not least as testing like that would mean a massive increase in the work required: TPUs testing covers 23 games at three resolutions, so 69 data points (plus power and thermal measurements). Expand that to three platforms and you have 207 data points, though ideally you'd want to test Ryzen 5000 with SAM both enabled and disabled to single out its effect, making it 276 data points. Then there's the fact that there are three GPUs to test, and that one would want at least one RTX comparison GPU for each test. Given that reviewers typically get about a week to ready their reviews, there is no way that this could be done in time for a launch review.

That being said, I'm very much looking forward to w1zzard's SAM deep dive.