Wednesday, October 28th 2020

AMD Announces the Radeon RX 6000 Series: Performance that Restores Competitiveness

AMD (NASDAQ: AMD) today unveiled the AMD Radeon RX 6000 Series graphics cards, delivering powerhouse performance, incredibly life-like visuals, and must-have features that set a new standard for enthusiast-class PC gaming experiences. Representing the forefront of extreme engineering and design, the highly anticipated AMD Radeon RX 6000 Series includes the AMD Radeon RX 6800 and Radeon RX 6800 XT graphics cards, as well as the new flagship Radeon RX 6900 XT - the fastest AMD gaming graphics card ever developed.

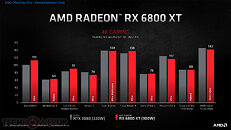

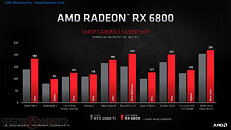

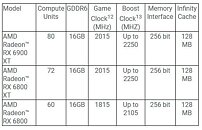

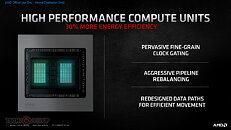

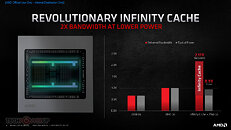

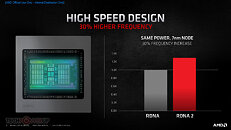

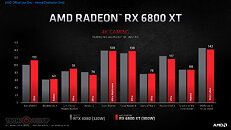

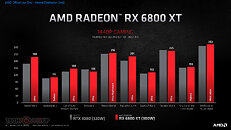

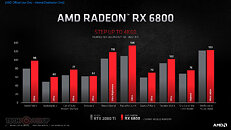

AMD Radeon RX 6000 Series graphics cards are built upon groundbreaking AMD RDNA 2 gaming architecture, a new foundation for next-generation consoles, PCs, laptops and mobile devices, designed to deliver the optimal combination of performance and power efficiency. AMD RDNA 2 gaming architecture provides up to 2X higher performance in select titles with the AMD Radeon RX 6900 XT graphics card compared to the AMD Radeon RX 5700 XT graphics card built on AMD RDNA architecture, and up to 54 percent more performance-per-watt when comparing the AMD Radeon RX 6800 XT graphics card to the AMD Radeon RX 5700 XT graphics card using the same 7 nm process technology.AMD RDNA 2 offers a number of innovations, including applying advanced power saving techniques to high-performance compute units to improve energy efficiency by up to 30 percent per cycle per compute unit, and leveraging high-speed design methodologies to provide up to a 30 percent frequency boost at the same power level. It also includes new AMD Infinity Cache technology that offers up to 2.4X greater bandwidth-per-watt compared to GDDR6-only AMD RDNA -based architectural designs.

"Today's announcement is the culmination of years of R&D focused on bringing the best of AMD Radeon graphics to the enthusiast and ultra-enthusiast gaming markets, and represents a major evolution in PC gaming," said Scott Herkelman, corporate vice president and general manager, Graphics Business Unit at AMD. "The new AMD Radeon RX 6800, RX 6800 XT and RX 6900 XT graphics cards deliver world class 4K and 1440p performance in major AAA titles, new levels of immersion with breathtaking life-like visuals, and must-have features that provide the ultimate gaming experiences. I can't wait for gamers to get these incredible new graphics cards in their hands."

Powerhouse Performance, Vivid Visuals & Incredible Gaming Experiences

AMD Radeon RX 6000 Series graphics cards support high-bandwidth PCIe 4.0 technology and feature 16 GB of GDDR6 memory to power the most demanding 4K workloads today and in the future. Key features and capabilities include:

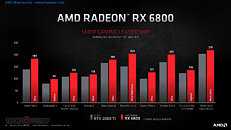

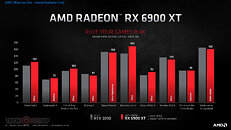

Powerhouse Performance

In the coming weeks, AMD will release a series of videos from its ISV partners showcasing the incredible gaming experiences enabled by AMD Radeon RX 6000 Series graphics cards in some of this year's most anticipated games. These videos can be viewed on the AMD website.

AMD Radeon RX 6000 Series graphics cards are built upon groundbreaking AMD RDNA 2 gaming architecture, a new foundation for next-generation consoles, PCs, laptops and mobile devices, designed to deliver the optimal combination of performance and power efficiency. AMD RDNA 2 gaming architecture provides up to 2X higher performance in select titles with the AMD Radeon RX 6900 XT graphics card compared to the AMD Radeon RX 5700 XT graphics card built on AMD RDNA architecture, and up to 54 percent more performance-per-watt when comparing the AMD Radeon RX 6800 XT graphics card to the AMD Radeon RX 5700 XT graphics card using the same 7 nm process technology.AMD RDNA 2 offers a number of innovations, including applying advanced power saving techniques to high-performance compute units to improve energy efficiency by up to 30 percent per cycle per compute unit, and leveraging high-speed design methodologies to provide up to a 30 percent frequency boost at the same power level. It also includes new AMD Infinity Cache technology that offers up to 2.4X greater bandwidth-per-watt compared to GDDR6-only AMD RDNA -based architectural designs.

"Today's announcement is the culmination of years of R&D focused on bringing the best of AMD Radeon graphics to the enthusiast and ultra-enthusiast gaming markets, and represents a major evolution in PC gaming," said Scott Herkelman, corporate vice president and general manager, Graphics Business Unit at AMD. "The new AMD Radeon RX 6800, RX 6800 XT and RX 6900 XT graphics cards deliver world class 4K and 1440p performance in major AAA titles, new levels of immersion with breathtaking life-like visuals, and must-have features that provide the ultimate gaming experiences. I can't wait for gamers to get these incredible new graphics cards in their hands."

Powerhouse Performance, Vivid Visuals & Incredible Gaming Experiences

AMD Radeon RX 6000 Series graphics cards support high-bandwidth PCIe 4.0 technology and feature 16 GB of GDDR6 memory to power the most demanding 4K workloads today and in the future. Key features and capabilities include:

Powerhouse Performance

- AMD Infinity Cache - A high-performance, last-level data cache suitable for 4K and 1440p gaming with the highest level of detail enabled. 128 MB of on-die cache dramatically reduces latency and power consumption, delivering higher overall gaming performance than traditional architectural designs.

- AMD Smart Access Memory - An exclusive feature of systems with AMD Ryzen 5000 Series processors, AMD B550 and X570 motherboards and Radeon RX 6000 Series graphics cards. It gives AMD Ryzen processors greater access to the high-speed GDDR6 graphics memory, accelerating CPU processing and providing up to a 13-percent performance increase on a AMD Radeon RX 6800 XT graphics card in Forza Horizon 4 at 4K when combined with the new Rage Mode one-click overclocking setting.9,10

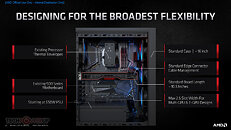

- Built for Standard Chassis - With a length of 267 mm and 2x8 standard 8-pin power connectors, and designed to operate with existing enthusiast-class 650 W-750 W power supplies, gamers can easily upgrade their existing large to small form factor PCs without additional cost.

- DirectX 12 Ultimate Support - Provides a powerful blend of raytracing, compute, and rasterized effects, such as DirectX Raytracing (DXR) and Variable Rate Shading, to elevate games to a new level of realism.

- DirectX Raytracing (DXR) - Adding a high performance, fixed-function Ray Accelerator engine to each compute unit, AMD RDNA 2-based graphics cards are optimized to deliver real-time lighting, shadow and reflection realism with DXR. When paired with AMD FidelityFX, which enables hybrid rendering, developers can combine rasterized and ray-traced effects to ensure an optimal combination of image quality and performance.

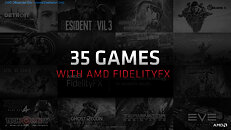

- AMD FidelityFX - An open-source toolkit for game developers available on AMD GPUOpen. It features a collection of lighting, shadow and reflection effects that make it easier for developers to add high-quality post-process effects that make games look beautiful while offering the optimal balance of visual fidelity and performance.

- Variable Rate Shading (VRS) - Dynamically reduces the shading rate for different areas of a frame that do not require a high level of visual detail, delivering higher levels of overall performance with little to no perceptible change in image quality.

- Microsoft DirectStorage Support - Future support for the DirectStorage API enables lightning-fast load times and high-quality textures by eliminating storage API-related bottlenecks and limiting CPU involvement.

- Radeon Software Performance Tuning Presets - Simple one-click presets in Radeon Software help gamers easily extract the most from their graphics card. The presets include the new Rage Mode stable over clocking setting that takes advantage of extra available headroom to deliver higher gaming performance.

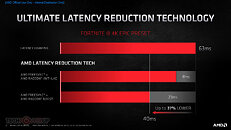

- Radeon Anti-Lag - Significantly decreases input-to-display response times and offers a competitive edge in gameplay.

In the coming weeks, AMD will release a series of videos from its ISV partners showcasing the incredible gaming experiences enabled by AMD Radeon RX 6000 Series graphics cards in some of this year's most anticipated games. These videos can be viewed on the AMD website.

- DIRT 5 - October 29

- Godfall - November 2

- World of Warcraft : Shadowlands - November 10

- RiftBreaker - November 12

- FarCry 6 - November 17

- AMD Radeon RX 6800 and Radeon RX 6800 XT graphics cards are expected to be available from global etailers/retailers and on AMD.com beginning November 18, 2020, for $579 USD SEP and $649 USD SEP, respectively. The AMD Radeon RX 6900 XT is expected to be available December 8, 2020, for $999 USD SEP.

- AMD Radeon RX 6800 and RX 6800 XT graphics cards are also expected to be available from AMD board partners, including ASRock, ASUS, Gigabyte, MSI, PowerColor, SAPPHIRE and XFX, beginning in November 2020.

394 Comments on AMD Announces the Radeon RX 6000 Series: Performance that Restores Competitiveness

I always wait for the reviews but these presentations do give some level of information and comparison how their products perform against competition.

I can tell you now, You won't see much difference in performance between 3070 and 6800 with the reviews. If you do see those, I'm sure it will be in AMD's favor.

How will it turn out, we will yet to see. :)

Everything is correlated.

I will read the reviews and if 6800 will be faster than 3070 at a comparable price I will buy it when available. I am not going to replace my 3900X with a 5900X until later next year, so I need a review without using the Smart Access Memory @ 1440P (my resolution for gaming).you are completely turning things on the table in order to hide your clearly baseless claim about Fortnite being played mostly by 14 years old kids....

YOU started saying the 14 years old kids don't know or care what DLSS is (or on what planet they are), and I just pointed out that maybe Fortnite is also played by older guys that, according to your narrative, are more capable of understanding DLSS and RT.

Here to refresh your fading memory:I don't think knowledge is age related. And I surely know 16 years old kids smarter than you in this field.

Saying that 20-25 year old people absolutely know about DLSS is by far the most ridiculous and baseless thing said on here and it doesn't follow my narrative at all, I simply said the kind of people that care about this come from a very small niche. Your original claim that most people who play Fortnite would chose a GPU with DLSS is still not only ridiculous but not backed by anything at all. Again, you wanted to play this dumb game of showing definite proof and so far all your ideas have been debunked.Criiiiiiiiiiiiiiiiiinge

Hype on the other hand, is presentation of a product without full scope of what it offers and blown out of proportion. For instance exaggeration of what the product can do, like performance.

At least that's how I take it.

English could not be my first language, but I wrote this:That is quite different than "any 20-25 years old know about DLSS".

So maybe you should review your language skills, other than statistics...

Let me know when you find data about 14 years old Fortnite players. Until then, bye bye...

Online there are tons of videos about DLSS 2.0 quality.

1. Where are all the people congratulating AMD on 16GB of vram vs 10GB? It seems they're shitting on the AMD 6000 series and still leaning towards the RTX 3000 series. So RTX > 6GB of vram (which was a dealbreaker before)?

2. My 11 year old asked for a new RTX card(he's on a GTX 1070@1080p) when the Fortnite preview dropped on YouTube. Kids aren't as ignorant as you think, and marketing works.

He gon' be pissed when he gets my 5700XT hand-me-down. :D

It's obvious there are young tech enthusiasts who know what DLSS is, though at age 14 I sincerely doubt there are more than a handful of tech enthusiasts worldwide with the reasoning and temperament to not just be outright screaming fanboys and fangirls - that's not their fault, they just lack the biological and experiential basis for acting like reasonable adults. But still, inferring that "above a certain age anyone knows about [obscure technical term X]" is way, way out there.

As for Fortnite being full of 14-year-olds: that's a given. No, the majority aren't 14 - that would be really weird. But there are tons of kids playing it, and likely the majority of players are quite young. Add to that the broad popularity of the game, and it's safe to assume that the average Fortnite player has absolutely no idea what DLSS is. Heck, there are likely more people playing Fortnite on consoles and phones than people in the whole world who know what DLSS is.

i find it interesting that amd says 650 watt for 6800 \ 750 watt for 6800xt \ and 850watt for 6900xt while all have 2x8pin with latter 2 same tbp

I guess it will be the same with the next consoles and videocards based on the same RDNA2 GPU.

And then there are eyes to see and brains to use, god forbid.

And even some articles to be found, with, god forbid, actual pics:

arstechnica.com/gaming/2020/07/why-this-months-pc-port-of-death-stranding-is-the-definitive-version/

At the end of the day, it is a cherry picking game:

DLSS 2.0 (these are shots from picture that hypes DLSS by the way):

But it demonstrates how delusional DLSS hypers are. As any other image upscaling tech, it has its ups and downs.

WIth 2.0 it is largely the same as with TAA which it based on: it gets blurry, it wipes out small stuff, it struggles with quickly moving stuff.

Btw, those comparison shots look to have different DoF/focal planes; the one on top has sharper leaves but more blurry grass, the one on the bottom has the opposite. Makes it very difficult to make a neutral comparison.

Easy.

The Doom's demo with Ampere super-exclusive-totallynotforshilling-preview.Stone that is at the same focal distance as the bush is sharper on below pic.

Seriously, do you really want to venture in "DLSS doesn't make things look worse"?

Put simply: if you have to do that, you also need a very good screen and to be actively looking for problems to spot them while playing. There is obviously a threshold below which these things do become visible, but for now, while I don't think DLSS is the second coming of Raptorjesus like some people do, it's a damn impressive technology nonetheless. The only major drawback is how complex its implementation is and thus how limited adoption is bound to be, as well as it being proprietary.

Heck, when I get a new monitor I'll be going for something like a 32" 2160p panel (need the sharpness for work), but I'll likely play most games at 1440p render resolution and let bog-standard GPU upscaling handle the rest. I'll likely not be able to tell much of a difference in-game, and the performance increase will be massive.