Wednesday, October 28th 2020

AMD Announces the Radeon RX 6000 Series: Performance that Restores Competitiveness

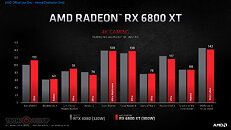

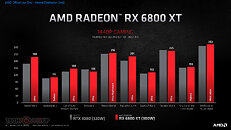

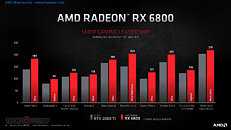

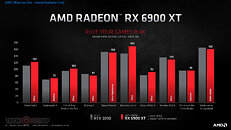

AMD (NASDAQ: AMD) today unveiled the AMD Radeon RX 6000 Series graphics cards, delivering powerhouse performance, incredibly life-like visuals, and must-have features that set a new standard for enthusiast-class PC gaming experiences. Representing the forefront of extreme engineering and design, the highly anticipated AMD Radeon RX 6000 Series includes the AMD Radeon RX 6800 and Radeon RX 6800 XT graphics cards, as well as the new flagship Radeon RX 6900 XT - the fastest AMD gaming graphics card ever developed.

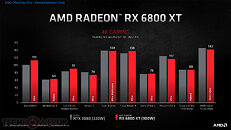

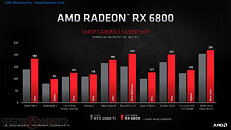

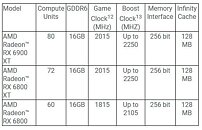

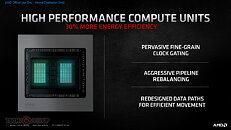

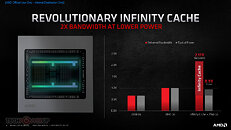

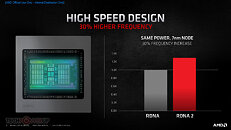

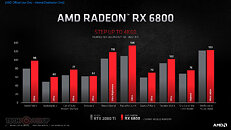

AMD Radeon RX 6000 Series graphics cards are built upon groundbreaking AMD RDNA 2 gaming architecture, a new foundation for next-generation consoles, PCs, laptops and mobile devices, designed to deliver the optimal combination of performance and power efficiency. AMD RDNA 2 gaming architecture provides up to 2X higher performance in select titles with the AMD Radeon RX 6900 XT graphics card compared to the AMD Radeon RX 5700 XT graphics card built on AMD RDNA architecture, and up to 54 percent more performance-per-watt when comparing the AMD Radeon RX 6800 XT graphics card to the AMD Radeon RX 5700 XT graphics card using the same 7 nm process technology.AMD RDNA 2 offers a number of innovations, including applying advanced power saving techniques to high-performance compute units to improve energy efficiency by up to 30 percent per cycle per compute unit, and leveraging high-speed design methodologies to provide up to a 30 percent frequency boost at the same power level. It also includes new AMD Infinity Cache technology that offers up to 2.4X greater bandwidth-per-watt compared to GDDR6-only AMD RDNA -based architectural designs.

"Today's announcement is the culmination of years of R&D focused on bringing the best of AMD Radeon graphics to the enthusiast and ultra-enthusiast gaming markets, and represents a major evolution in PC gaming," said Scott Herkelman, corporate vice president and general manager, Graphics Business Unit at AMD. "The new AMD Radeon RX 6800, RX 6800 XT and RX 6900 XT graphics cards deliver world class 4K and 1440p performance in major AAA titles, new levels of immersion with breathtaking life-like visuals, and must-have features that provide the ultimate gaming experiences. I can't wait for gamers to get these incredible new graphics cards in their hands."

Powerhouse Performance, Vivid Visuals & Incredible Gaming Experiences

AMD Radeon RX 6000 Series graphics cards support high-bandwidth PCIe 4.0 technology and feature 16 GB of GDDR6 memory to power the most demanding 4K workloads today and in the future. Key features and capabilities include:

Powerhouse Performance

In the coming weeks, AMD will release a series of videos from its ISV partners showcasing the incredible gaming experiences enabled by AMD Radeon RX 6000 Series graphics cards in some of this year's most anticipated games. These videos can be viewed on the AMD website.

AMD Radeon RX 6000 Series graphics cards are built upon groundbreaking AMD RDNA 2 gaming architecture, a new foundation for next-generation consoles, PCs, laptops and mobile devices, designed to deliver the optimal combination of performance and power efficiency. AMD RDNA 2 gaming architecture provides up to 2X higher performance in select titles with the AMD Radeon RX 6900 XT graphics card compared to the AMD Radeon RX 5700 XT graphics card built on AMD RDNA architecture, and up to 54 percent more performance-per-watt when comparing the AMD Radeon RX 6800 XT graphics card to the AMD Radeon RX 5700 XT graphics card using the same 7 nm process technology.AMD RDNA 2 offers a number of innovations, including applying advanced power saving techniques to high-performance compute units to improve energy efficiency by up to 30 percent per cycle per compute unit, and leveraging high-speed design methodologies to provide up to a 30 percent frequency boost at the same power level. It also includes new AMD Infinity Cache technology that offers up to 2.4X greater bandwidth-per-watt compared to GDDR6-only AMD RDNA -based architectural designs.

"Today's announcement is the culmination of years of R&D focused on bringing the best of AMD Radeon graphics to the enthusiast and ultra-enthusiast gaming markets, and represents a major evolution in PC gaming," said Scott Herkelman, corporate vice president and general manager, Graphics Business Unit at AMD. "The new AMD Radeon RX 6800, RX 6800 XT and RX 6900 XT graphics cards deliver world class 4K and 1440p performance in major AAA titles, new levels of immersion with breathtaking life-like visuals, and must-have features that provide the ultimate gaming experiences. I can't wait for gamers to get these incredible new graphics cards in their hands."

Powerhouse Performance, Vivid Visuals & Incredible Gaming Experiences

AMD Radeon RX 6000 Series graphics cards support high-bandwidth PCIe 4.0 technology and feature 16 GB of GDDR6 memory to power the most demanding 4K workloads today and in the future. Key features and capabilities include:

Powerhouse Performance

- AMD Infinity Cache - A high-performance, last-level data cache suitable for 4K and 1440p gaming with the highest level of detail enabled. 128 MB of on-die cache dramatically reduces latency and power consumption, delivering higher overall gaming performance than traditional architectural designs.

- AMD Smart Access Memory - An exclusive feature of systems with AMD Ryzen 5000 Series processors, AMD B550 and X570 motherboards and Radeon RX 6000 Series graphics cards. It gives AMD Ryzen processors greater access to the high-speed GDDR6 graphics memory, accelerating CPU processing and providing up to a 13-percent performance increase on a AMD Radeon RX 6800 XT graphics card in Forza Horizon 4 at 4K when combined with the new Rage Mode one-click overclocking setting.9,10

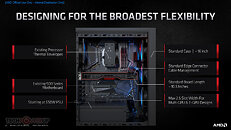

- Built for Standard Chassis - With a length of 267 mm and 2x8 standard 8-pin power connectors, and designed to operate with existing enthusiast-class 650 W-750 W power supplies, gamers can easily upgrade their existing large to small form factor PCs without additional cost.

- DirectX 12 Ultimate Support - Provides a powerful blend of raytracing, compute, and rasterized effects, such as DirectX Raytracing (DXR) and Variable Rate Shading, to elevate games to a new level of realism.

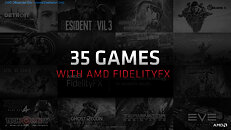

- DirectX Raytracing (DXR) - Adding a high performance, fixed-function Ray Accelerator engine to each compute unit, AMD RDNA 2-based graphics cards are optimized to deliver real-time lighting, shadow and reflection realism with DXR. When paired with AMD FidelityFX, which enables hybrid rendering, developers can combine rasterized and ray-traced effects to ensure an optimal combination of image quality and performance.

- AMD FidelityFX - An open-source toolkit for game developers available on AMD GPUOpen. It features a collection of lighting, shadow and reflection effects that make it easier for developers to add high-quality post-process effects that make games look beautiful while offering the optimal balance of visual fidelity and performance.

- Variable Rate Shading (VRS) - Dynamically reduces the shading rate for different areas of a frame that do not require a high level of visual detail, delivering higher levels of overall performance with little to no perceptible change in image quality.

- Microsoft DirectStorage Support - Future support for the DirectStorage API enables lightning-fast load times and high-quality textures by eliminating storage API-related bottlenecks and limiting CPU involvement.

- Radeon Software Performance Tuning Presets - Simple one-click presets in Radeon Software help gamers easily extract the most from their graphics card. The presets include the new Rage Mode stable over clocking setting that takes advantage of extra available headroom to deliver higher gaming performance.

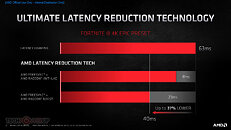

- Radeon Anti-Lag - Significantly decreases input-to-display response times and offers a competitive edge in gameplay.

In the coming weeks, AMD will release a series of videos from its ISV partners showcasing the incredible gaming experiences enabled by AMD Radeon RX 6000 Series graphics cards in some of this year's most anticipated games. These videos can be viewed on the AMD website.

- DIRT 5 - October 29

- Godfall - November 2

- World of Warcraft : Shadowlands - November 10

- RiftBreaker - November 12

- FarCry 6 - November 17

- AMD Radeon RX 6800 and Radeon RX 6800 XT graphics cards are expected to be available from global etailers/retailers and on AMD.com beginning November 18, 2020, for $579 USD SEP and $649 USD SEP, respectively. The AMD Radeon RX 6900 XT is expected to be available December 8, 2020, for $999 USD SEP.

- AMD Radeon RX 6800 and RX 6800 XT graphics cards are also expected to be available from AMD board partners, including ASRock, ASUS, Gigabyte, MSI, PowerColor, SAPPHIRE and XFX, beginning in November 2020.

394 Comments on AMD Announces the Radeon RX 6000 Series: Performance that Restores Competitiveness

AMD is literally going to have all of the consoles catalog of games which will be build and optimized for AMD RDNA2 implementation of ray tracing.

Personally I think Nvidia was pushing it way too hard with ray tracing, they just needed a "new" feature to put on the marketing, without it actually being ready for practical use. In fact even the Ampere series is crap at rendering rays, and guess what their next gen will be as well, same with AMD. We are realistically at least 5 years to be able to properly trace rays in real time in games, without making it extremely specific and cutting 9/10 corners. Right now ray tracing is literally just a marketing gimmick, its extremely specific and limited.

If you actually did a full ray traced game with all of the caveats of actually tracing rays and you have trillions of rays in the scenes it will literally blow up existing GPU's, its not possible to do it. It will render like 0.1fps per second.

This is why they have to use cheats in order to make it work, this is why its only used on 1 specific thing, either shadows, either reflections, either global illumination, etc.... never all of them and even at that its very limited. They limit the rays that get processed, so its only the barebones rays that get traced.

Again we could have had a 100x times better ray tracing implementation in 5 years, with full ray tracing capabilities that doesn't cut as much corners, that comes somewhat close to rendered ray tracing, that isn't essentially a gimmick that tanks your performance by 50% and that is with very specific and very limited tracing, again if you actually did a better ray tracing it will literally tank performance completely, you'd be running at less than 1 frame per second.

Copy form another thread:

"I can see there is a lot of confusion about the new feature AMD is calling "Smart Access Memory" and how it works. My 0.02 on the subject.

According to the presentation the SAM feature can be enabled only in 500series boards with a ZEN3 CPU installed. My assumption is that they use PCI-E 4.0 capabilities for this, but I'll get back to that.

The SAM feature has nothing to do with InfinityCache. IC is used to compensate the 256bit bandwithd between the GPU and VRAM. Thats it, end of story. And according to AMD this is equivalent of a 833bit bus. Again, this has nothing to do with SAM. IC is in the GPU and works for all systems the same way. They didnt say you have to do anything to "get it" to work. If it works with the same effectiveness with all games we will have to see.

Smart Access Memory

They use SAM to have CPU access to VRAM and probably speed up things a little on the CPU side. Thats it. They said it in the presentation, and they showed it also...

And they probably can get this done because of PCI-E 4.0 speed capability. If true thats why no 400series support.

They also said that this feature may be better in future than it is today, once game developers optimize their games for it.

I think AMD just made PCI-E 4.0 (on their own platform) more relevant than it was until now!"

Full CPU access to GPU memory:

----------------------------------------------------------------------

So who knows better than AMD if the 16GB is necessary or not?

devblogs.microsoft.com/directx/announcing-microsoft-directx-raytracing/

DXR is M$'s

This is a very good time for the consumer in the PC industry!!

What I find most interesting is that the 6900XT might be even better than the 3090 at 8k gaming. Really looking forward to more tests with 8k panels like the one LTT did, but more fleshed out and expansive.

www.tweaktown.com/news/75952/amd-smart-access-memory-zen-3-rdna-2-intel-nvidia-destroyer/index.html

I guess it wouldn’t be 350+W but stil no 300W.

I’m not saying that what AMD has accomplished is not impressive. It is more than just impressive. And with that SAM feature with 5000cpu+500boards it might change the game.

And to clarify something, SAM will be available on all 500series boards. Not only X570. They use PCI-E 4.0 interconnect between CPU and GPU for the former to have VRAM memory access. All 500 boards have PCI-E 4.0 speed for GPUs.

The path that nVidia has chosen, do not allow them to implement such a cache. And I really doubt that they will abandon Tensor and RT cores in future.

The thing is that we don’t know if increasing the actual bandwidth to 320 or 384 is going to scale well. You have to have stronger cores to utilize extra (normal or effective) bandwidth.

EDIT PS

and they have to redesign mem controller for wider bus = expensive and larger die.

It’s a no go...

But then again... 6800XT AIBs mean matching the 6900XT for less... (700~800+)

It’s complicated...

Second, remember Fermi: Nvidia put out a first generation of cards which were horrible, and then they iterated on the same node just 6 months later and fixed most of the problems.

The theoretical bandwidth showed up on AMDs slides is just as theoretical as Ampere TFlops.

Let's not get ahead of ourselves, AMD did well in this skirmish, the war is far from over.

Before this, i said do not underestimate amd, amd has a new management but to tell you the truth i myself have not had the thought amd could be competitive this time x nvidia, i guessed 30% less performance than x 3080, i was wrong.

Edit: ... and mindshare, tbh, which they haven't completely eroded yet, at least in some markets

The fact that RDNA3 is coming in 2 years gives nVidia room to respond.