Wednesday, October 28th 2020

AMD Announces the Radeon RX 6000 Series: Performance that Restores Competitiveness

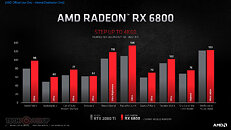

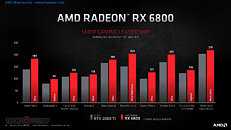

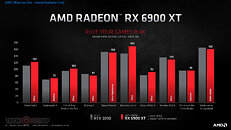

AMD (NASDAQ: AMD) today unveiled the AMD Radeon RX 6000 Series graphics cards, delivering powerhouse performance, incredibly life-like visuals, and must-have features that set a new standard for enthusiast-class PC gaming experiences. Representing the forefront of extreme engineering and design, the highly anticipated AMD Radeon RX 6000 Series includes the AMD Radeon RX 6800 and Radeon RX 6800 XT graphics cards, as well as the new flagship Radeon RX 6900 XT - the fastest AMD gaming graphics card ever developed.

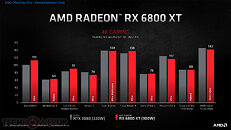

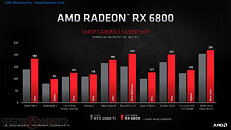

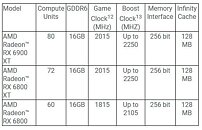

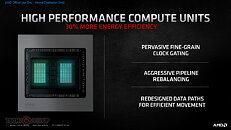

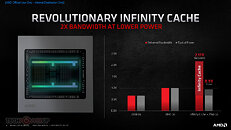

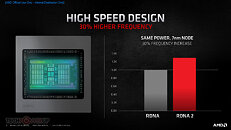

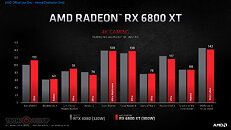

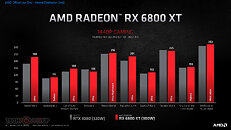

AMD Radeon RX 6000 Series graphics cards are built upon groundbreaking AMD RDNA 2 gaming architecture, a new foundation for next-generation consoles, PCs, laptops and mobile devices, designed to deliver the optimal combination of performance and power efficiency. AMD RDNA 2 gaming architecture provides up to 2X higher performance in select titles with the AMD Radeon RX 6900 XT graphics card compared to the AMD Radeon RX 5700 XT graphics card built on AMD RDNA architecture, and up to 54 percent more performance-per-watt when comparing the AMD Radeon RX 6800 XT graphics card to the AMD Radeon RX 5700 XT graphics card using the same 7 nm process technology.AMD RDNA 2 offers a number of innovations, including applying advanced power saving techniques to high-performance compute units to improve energy efficiency by up to 30 percent per cycle per compute unit, and leveraging high-speed design methodologies to provide up to a 30 percent frequency boost at the same power level. It also includes new AMD Infinity Cache technology that offers up to 2.4X greater bandwidth-per-watt compared to GDDR6-only AMD RDNA -based architectural designs.

"Today's announcement is the culmination of years of R&D focused on bringing the best of AMD Radeon graphics to the enthusiast and ultra-enthusiast gaming markets, and represents a major evolution in PC gaming," said Scott Herkelman, corporate vice president and general manager, Graphics Business Unit at AMD. "The new AMD Radeon RX 6800, RX 6800 XT and RX 6900 XT graphics cards deliver world class 4K and 1440p performance in major AAA titles, new levels of immersion with breathtaking life-like visuals, and must-have features that provide the ultimate gaming experiences. I can't wait for gamers to get these incredible new graphics cards in their hands."

Powerhouse Performance, Vivid Visuals & Incredible Gaming Experiences

AMD Radeon RX 6000 Series graphics cards support high-bandwidth PCIe 4.0 technology and feature 16 GB of GDDR6 memory to power the most demanding 4K workloads today and in the future. Key features and capabilities include:

Powerhouse Performance

In the coming weeks, AMD will release a series of videos from its ISV partners showcasing the incredible gaming experiences enabled by AMD Radeon RX 6000 Series graphics cards in some of this year's most anticipated games. These videos can be viewed on the AMD website.

AMD Radeon RX 6000 Series graphics cards are built upon groundbreaking AMD RDNA 2 gaming architecture, a new foundation for next-generation consoles, PCs, laptops and mobile devices, designed to deliver the optimal combination of performance and power efficiency. AMD RDNA 2 gaming architecture provides up to 2X higher performance in select titles with the AMD Radeon RX 6900 XT graphics card compared to the AMD Radeon RX 5700 XT graphics card built on AMD RDNA architecture, and up to 54 percent more performance-per-watt when comparing the AMD Radeon RX 6800 XT graphics card to the AMD Radeon RX 5700 XT graphics card using the same 7 nm process technology.AMD RDNA 2 offers a number of innovations, including applying advanced power saving techniques to high-performance compute units to improve energy efficiency by up to 30 percent per cycle per compute unit, and leveraging high-speed design methodologies to provide up to a 30 percent frequency boost at the same power level. It also includes new AMD Infinity Cache technology that offers up to 2.4X greater bandwidth-per-watt compared to GDDR6-only AMD RDNA -based architectural designs.

"Today's announcement is the culmination of years of R&D focused on bringing the best of AMD Radeon graphics to the enthusiast and ultra-enthusiast gaming markets, and represents a major evolution in PC gaming," said Scott Herkelman, corporate vice president and general manager, Graphics Business Unit at AMD. "The new AMD Radeon RX 6800, RX 6800 XT and RX 6900 XT graphics cards deliver world class 4K and 1440p performance in major AAA titles, new levels of immersion with breathtaking life-like visuals, and must-have features that provide the ultimate gaming experiences. I can't wait for gamers to get these incredible new graphics cards in their hands."

Powerhouse Performance, Vivid Visuals & Incredible Gaming Experiences

AMD Radeon RX 6000 Series graphics cards support high-bandwidth PCIe 4.0 technology and feature 16 GB of GDDR6 memory to power the most demanding 4K workloads today and in the future. Key features and capabilities include:

Powerhouse Performance

- AMD Infinity Cache - A high-performance, last-level data cache suitable for 4K and 1440p gaming with the highest level of detail enabled. 128 MB of on-die cache dramatically reduces latency and power consumption, delivering higher overall gaming performance than traditional architectural designs.

- AMD Smart Access Memory - An exclusive feature of systems with AMD Ryzen 5000 Series processors, AMD B550 and X570 motherboards and Radeon RX 6000 Series graphics cards. It gives AMD Ryzen processors greater access to the high-speed GDDR6 graphics memory, accelerating CPU processing and providing up to a 13-percent performance increase on a AMD Radeon RX 6800 XT graphics card in Forza Horizon 4 at 4K when combined with the new Rage Mode one-click overclocking setting.9,10

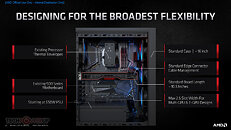

- Built for Standard Chassis - With a length of 267 mm and 2x8 standard 8-pin power connectors, and designed to operate with existing enthusiast-class 650 W-750 W power supplies, gamers can easily upgrade their existing large to small form factor PCs without additional cost.

- DirectX 12 Ultimate Support - Provides a powerful blend of raytracing, compute, and rasterized effects, such as DirectX Raytracing (DXR) and Variable Rate Shading, to elevate games to a new level of realism.

- DirectX Raytracing (DXR) - Adding a high performance, fixed-function Ray Accelerator engine to each compute unit, AMD RDNA 2-based graphics cards are optimized to deliver real-time lighting, shadow and reflection realism with DXR. When paired with AMD FidelityFX, which enables hybrid rendering, developers can combine rasterized and ray-traced effects to ensure an optimal combination of image quality and performance.

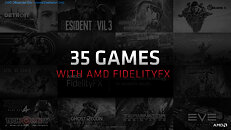

- AMD FidelityFX - An open-source toolkit for game developers available on AMD GPUOpen. It features a collection of lighting, shadow and reflection effects that make it easier for developers to add high-quality post-process effects that make games look beautiful while offering the optimal balance of visual fidelity and performance.

- Variable Rate Shading (VRS) - Dynamically reduces the shading rate for different areas of a frame that do not require a high level of visual detail, delivering higher levels of overall performance with little to no perceptible change in image quality.

- Microsoft DirectStorage Support - Future support for the DirectStorage API enables lightning-fast load times and high-quality textures by eliminating storage API-related bottlenecks and limiting CPU involvement.

- Radeon Software Performance Tuning Presets - Simple one-click presets in Radeon Software help gamers easily extract the most from their graphics card. The presets include the new Rage Mode stable over clocking setting that takes advantage of extra available headroom to deliver higher gaming performance.

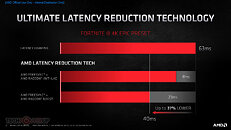

- Radeon Anti-Lag - Significantly decreases input-to-display response times and offers a competitive edge in gameplay.

In the coming weeks, AMD will release a series of videos from its ISV partners showcasing the incredible gaming experiences enabled by AMD Radeon RX 6000 Series graphics cards in some of this year's most anticipated games. These videos can be viewed on the AMD website.

- DIRT 5 - October 29

- Godfall - November 2

- World of Warcraft : Shadowlands - November 10

- RiftBreaker - November 12

- FarCry 6 - November 17

- AMD Radeon RX 6800 and Radeon RX 6800 XT graphics cards are expected to be available from global etailers/retailers and on AMD.com beginning November 18, 2020, for $579 USD SEP and $649 USD SEP, respectively. The AMD Radeon RX 6900 XT is expected to be available December 8, 2020, for $999 USD SEP.

- AMD Radeon RX 6800 and RX 6800 XT graphics cards are also expected to be available from AMD board partners, including ASRock, ASUS, Gigabyte, MSI, PowerColor, SAPPHIRE and XFX, beginning in November 2020.

394 Comments on AMD Announces the Radeon RX 6000 Series: Performance that Restores Competitiveness

Combining two different memory technologies like you are suggesting would be a complete and utter nightmare. Either you'd need to spend a lot of time and compute shuffling data back and forth between the two VRAM pools, or you'd need to double the size of each (i.e. instead of a 16GB GPU you'd need a 16+16GB GPU), driving up prices massively. Not to mention the board space requirements - those boards would be massive, expensive, and very power hungry. And then there's all the issues getting this to work - if you're using VRS as a differentiator then parts of the GPU need to be rendering a scene from one VRAM pool with the rest of the GPU rendering the same scene from a different VRAM pool, which would either mean waiting massive amounts of time for data to copy over, tanking performance, or keeping data duplicated in two VRAM pools simultaneously, which is both expensive in terms of power and would cause all kinds of issues with two different render passes and VRAM pools each informing new data being loaded to both pools at the same time. As I said: a complete and utter nightmare. Not to mention that one of the main points of Infinity Cache is to lower VRAM bandwidth needs. Adding something like this on top makes no sense.

I would expect narrower buses for lower end GPUs, though the IC will likely also shrink due to the sheer die area requirements of 128MB of SRAM. I'm hoping for 96MB of IC and a 256-bit or 192-bit bus for the next cards down. 128 won't be doable unless they keep the full size cache, and even then that sounds anemic for a GPU at that performance level (RTX 2080-2070-ish).

From AMD's utter lack of mentioning it, I'm guessing CrossFire is just as dead now as it was for RDNA1, with the only support being in major benchmarks.

Just a lot of people didn’t have faith in AMD because it seemed that it was too far behind in the GPU market. But the last 3-4 years AMD has shown some seriousness about their products, and seem more organized and focused.

I'd like to see what could be done with CF with the infinity cache better bandwidth and I/O even if it gets split amongst the GPU's should translate to less micro stutter. Less bus bottleneck complications are always good. It would be interesting if some CPU cores got introduced on the GPU side and a bit of on the fly compress in the form of LZX or XPRESS 4K/8K/16K got used prior to the infinity cache sending that data along to the CPU. Even if it only could compress files up to a certain file size on the fly quickly it would be quite useful and you can use those types of compression methods with VRAM as well.

That's typically my budget. I am hoping something gets released along those lines, it could double my current performance and put it in 5700XT performance territory.

If not oh well, I have many other ways to put $350 cnd to better use.

RDNA1

RDNA2

————————

And I’m pretty convinced that Crossfire is dead long ago.

RTings rate C9/B9 VRR 4K 40-60hz (maybe because they disn't have HDMI2.1 source to test upper rates?)

looks like there is a modding solution to activate Freesync up to 4k 120hz

Amd/comments/g65mw7

some guys are experiencing short black screens when launching/exiting some game.

but well no big issue.

I think Im gonna go red. sweet 16Gb and frequencies reaching heaven 2300Mhz+ yummy I want it give that to me.

Green is maxing 1980Mhz on all they products. OC isn't existing at all there.

DXR on AMD with 1 RA (Raytracing Accelerator) per CU seems not so bad. I mean with the consoles willing to implement a bit of Raytracing, at least we will be able activate DXR and see what it looks like. anyway it doesnt looks like the wow thing now. just some puddles of water reflexions here and there.

DLSS.. well AMD is working on a solution, and given that the consoles are demanding for this solution as they have less power to reach 4k60+ FPS this could get somewhere in the end.

Drivers Bugs : many 5700XT users doesnt report issues seems many months, and again given that most of games are now dev. for both PC and Consoles Im pretty confident that AMD is gonna be robbust.

Also Im fed up with all my interfaces beeing green. Geforce Exp. I want to discovers the AMD HMI just for the fun to look into every possible menues and options.

I would have gonne for the 6900XT if they had it priced 750$ on a 80 / 72 CU ration basis

that would have been reasonable. even 800$ just for the "Elite" product feeling. but 999$ they got berserker here. not gonna give them credits.

In my opinion they should have done a bigger die and crush NVidia once for all. just for the fun.

all in all... November seems exitingah yeah thx just saw your answer now. good to see that it works for you as well.

This I “heard” partially...

The three 6000 we’ve seen so far is based on the Navi21 right? 80CUs full die. They may have one more N21 with further less CUs, don’t know how many, probably 56 or even less active with 8GB(?) and probably same 256bit bus. But this isn’t coming soon I think because they may have to make inventory first (because of present good fab yields) and also see how things will go with nVidia.

Further down they have Navi22. Probably (?)40CUs full die with 192bit bus, (?)12GB, and clocks up to 2.5GHz, 160~200W, with who knows how much IC it will have. That will be better than 5700XT.

And also cutdown versions of N22 with 32~36CUs 8/10/12GB 160/192bit (for 5600/5700 replacements) and so on, but at this point is all on full speculations and things may change in future.

Also rumors for Navi23 with 24~32CUs but... it’s way too soon.

Navi21: 4K

Navi22: 1440p and ultrawide

Navi23: 1080p only

That being said, it makes no sense for them to launch further disabled Navi 21 SKUs. Navi 21 is a big and expensive die, made on a mature process with a low error rate. They've already launched a SKU with 25% of CUs disabled. Going below that would only be warranted if there were lots of defective dice that didn't even have 60 working CUs. That's highly unlikely, and so they would then be giving up chips they could sell in higher power, more expensive SKUs just to make cut-down ones - again, why would they do that? And besides, AMD has promised that RDNA will be the basis for their full product stack going forward, so we can expect at the very least two more die designs going forward - they had two below 60 CUs for RDNA 1 after all, and reducing that number makes no sense at all. I would expect the rumors of a mid-size Navi 22 and a small Navi 23 to be relatively accurate, though I'm doubtful about Navi 22 having only 40 CUs - that's too big a jump IMO. 44, 48? Sure. And again, 52 would place it too close to the 6800. 80-72-60-(new die)-48-40-32-(new die)-28-24-20 sounds like a likely lineup to me, which gives us everything down to a 5500 non-XT, with the possibility of 5400/5300 SKUs with disabled memory, lower clocks, etc.

As for memory, I agree with @Zach_01 that AMD will likely stay away from GDDR6X entirely. It just doesn't make sense for them. With IC working to the degree that they only need a relatively cheap 256-bit GDDR6 bus on their top end SKU, going for a more expensive, more power hungry RAM standard on a lower end SKU would just be plain weird. What would they gain from it? I wouldn't be surprised if Navi 22 still had a 256-bit bus, but it might only get fully enabled on top bins (6700 XT, possibly 6700) - a 256-bit bus doesn't take much board space and isn't very expensive (the RX 570 had one, after all). My guess: fully enabled Navi 22 will have something like a 256-bit G6 bus with 96MB of IC. Though it could of course be any number of configurations, and no doubt AMD has simulated the crap out of this to decide which to go for - it could also be 192-bit G6+128MB IC, or even 192-bit+96MB if that delivers sufficient performance for a 6700 XT SKU.

Its really nice and exciting to see them both fight for "seats" and market share, all over again... Not only for the new and more advanced products (from both), but for the competition also!

I'm all set for a GPU for the next couple of years but all I want is to see them fight!!

That is a lot more from the 15 month period (RDNA1 to 2).

The impressive stuff may continue on their all new platform on early 2022 for ZEN5 and late 2022 for RDNA3, and it could be bigger than what we’ve seen so far.

www.tweaktown.com/images/news/7/4/74274_06_amds-next-gen-rdna-3-revolutionary-chiplet-design-could-crush-nvidia_full.png

(Source.) It's AMD's typical vague roadmap, it can mean any part of 2022, though 2021 is very unlikely.

RDNA3 is said to be another huge leap and on TSMC's 5nm whilst Nvidia will be trundling along on 7nm or flirting with Samsung's el cheapo crappy 8nm+ or 7 nm (nvidia mistake) with their 4000 series.

Plus, while AMD might feel encouraged to slow things down a bit on the CPU side, since they are starting to compete with themselves a bit, in the GPU market they need to keep the fast pace for quite a while, before even hoping to get to a similar position.