Monday, January 4th 2021

AMD Radeon Navi 21 XTXH Variant Spotted, Another Flagship Graphics Card Incoming?

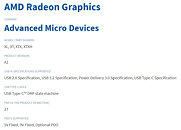

AMD has recently launched its Radeon "Big Navi" 6000 series of graphics cards, making entry to the high-end market and positioning itself well against the competition. The "Big Navi" graphics cards are based on Navi 21 XL (Radeon RX 6800), Navi 21 XT (Radeon RX 6800 XT), and Navi 21 XTX (Radeon RX 6900 XT) GPU revision, each of which features a different number of Shaders/TMUs/ROPs. The highest-end Navi 21 XTX is the highest performance revision featuring 80 Compute Units with 5120 cores. However, it seems like AMD is preparing another similar silicon called Navi 21 XTXH. Currently, it is unknown what the additional "H" means. It could indicate an upgraded version with more CUs, or perhaps a bit cut down configuration. It is unclear where such a GPU would fit in the lineup or is it just an engineering sample that is never making it to the market. It could represent a potential response from AMD to NVIDIA's upcoming GeForce RTX 3080 Ti graphics card, however, that is just speculation. Other options suggest that such a GPU would be a part of mainstream notebook lineup, just like Renoir comes in the "H" variant. We have to wait and see what AMD does to find out more.

Sources:

USB, via VideoCardz

40 Comments on AMD Radeon Navi 21 XTXH Variant Spotted, Another Flagship Graphics Card Incoming?

XH - extra Hot ;)

Now I'm waiting for the 3080ti.

As I suggested in my first comment, I'm referring to the H-series CPUs. I'm deducing the XTXH would be an XTX-class GPU coupled with an H-series CPU (i.e. high-performance mobile). As mobile parts go, AMD does add the "m" suffix to their retail branding, but XTX is not a retail name (it was once, a long time ago, but not any more), XTX defines the die-family it belongs to.

At 27W I can't say it's a 3090-killer, as this looks like the power envelope for a mobile GPU.

But hey, this is micron we are talking about here. They've been caught being anti-competitive in the past. I very much doubt they care about screwing AMD over if Nvidia has promised to buy a ton of their chips.

First, this is how things look like on a 7870 level GPU (PS4, non pro):

reflections, shades, light effects, you name it, all in there.

Second, this is how things look in the demo of the latest version of the most popular game engine on the planet, oh, it uses none of the "hardware RT" even though it is present on the respective platform and even though hardware RT is supported by even older version of the same engine:

Last, but not least, people do not seem to know what actually is "hardware RT" and what it isn't.

If we trust DF "deep dive" (in many parts, honestly, pathetic, but there aren't too many reviews of the kind to choose from) there are multiple steps involved in what is regarded as RT:

1) Creating the structure

2) Checking rays for intersections

3) Doing something with that (denoising, some temporal tricks for reflection, etc)

In this list, ONLY STEP 2 is hardware accelerated. This is why, while AMD likely beats NV in raw RT-ing power (especially with that uber infinity cache) most green sponsored tiles run poorly on AMD GPUs, as #1 and #3, while having nothing to do with RT, are largely optimized for NV GPUs.

This also shows how "AMD is behind on RT" doesn't quite reflect "what RT" it is about (and why AMD's GPUs wipe the floor with green GPUs in, say, Dirt 5 RT).

Ultimately, tech fails to deliver on... pretty much any front:

Promise 1: "yet unseen graphics"

There are NO uber effects that we have not seen already, and to make it more insulting, even GoW on PS4 has 90%+ of all of them, despite using pathetic GPU

Promise 2: "mkay, we had those effects, but now it's so much easier to implement"

It is exactly the opposite. There is a lot of tinkering, most of it GPU manufacturer specific AND it still has major negative impact on performance.

CP2077 is an interesting example of it, with many "RT on" versions looking WORSE than "RT off".

Oh, and then there is "DLSS: The Hype For Brain Dead"... :D

www.tomshardware.com/news/6900-xt-overclocked-to-world-record

were going to see aNavi22 GXL , XT XTX & XTXH as well already seen them in person :P

Too many of the RTX on/off comparisons aren't fair comparisons, they're RTX vs no effort at all - not RTX vs the alternative shadow/reflection/illumination/transparency methods we've seen since DX11 engines became popular. Gimmicks like Quake2 RTX or Minecraft are interesting tech demos, but that's not to say you couldn't also get very close facsimiles of their appearance at far higher framerates if developers put in the effort to import those assets into a modern game engine that supports SSAO, SSR, dynamic shadowmaps etc.

IMO, realtime raytracing may be the gold-standard for accuracy but it's also the least-efficient, dumbest, brute-force method that ends up being the least elegant solution with the worst possible performance of all the potential methods to render a scene's lighting.

DLSS and FidelityFX SR should not be dragged down with the flaws of raytracing though - sure, it's a crutch that can go some way towards mitigating the inefficiencies of realtime raytracing, but that shining praise of the technology - it can singlehandedly undo most of the damage caused by the huge performance penalties of RT and alongside VRS, I believe it is the technology that will let us continue increasing resolution without needing exponentially more GPU power to keep up. I have a 4K120 TV and AAA games simply aren't going to run at 4K120 without help. 4K displays have been mainstream for a decade and mass 8K adoption is looming on the horizon, no matter how pointless it may seem.