Tuesday, January 5th 2021

Intel DG2 Xe-HPG Features 512 Execution Units, 8 GB GDDR6

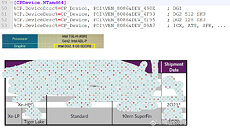

Intel's return to discrete gaming GPUs may have had a modest beginning with the Iris Xe MAX, but the company is looking to take a real stab at the gaming market. Driver code from the latest 100.9126 graphics driver, and OEM data-sheets pieced together by VideoCardz, reveal that its next attempt will be substantially bigger. Called "DG2," and based on the Xe-HPG graphics architecture, a derivative of Xe targeting gaming graphics, the new GPU allegedly features 512 Xe execution units. To put this number into perspective, the Iris Xe MAX features 96, as does the Iris Xe iGPU found in Intel's "Tiger Lake" mobile processors. The upcoming 11th Gen Core "Rocket Lake-S" is rumored to have a Xe-based iGPU with 48. Subject to comparable clock speeds, this alone amounts to a roughly 5x compute power uplift over DG1, 10x over the "Rocket Lake-S" iGPU. 512 EUs convert to 4,096 programmable shaders.

A leaked OEM data-sheet referencing the DG2 also mentions a rather contemporary video memory setup, with 8 GB of GDDR6 memory. While the Iris Xe MAX is built on Intel's homebrew 10 nm SuperFin node, Intel announced that its Xe-HPG chips will use third-party foundries. With these specs, Intel potentially has a GPU to target competitive e-sports gaming (where the money is). Sponsorship of major e-sports clans could help with the popularity of Intel Graphics. With enough beans on the pole, Intel could finally invest in scaling up the architecture to even higher client graphics market segments. As for availability, VideoCardz predicts a launch roughly coinciding with that of Intel's "Tiger Lake-H" mobile processor series, possibly slated for mid-2021.

Source:

VideoCardz

A leaked OEM data-sheet referencing the DG2 also mentions a rather contemporary video memory setup, with 8 GB of GDDR6 memory. While the Iris Xe MAX is built on Intel's homebrew 10 nm SuperFin node, Intel announced that its Xe-HPG chips will use third-party foundries. With these specs, Intel potentially has a GPU to target competitive e-sports gaming (where the money is). Sponsorship of major e-sports clans could help with the popularity of Intel Graphics. With enough beans on the pole, Intel could finally invest in scaling up the architecture to even higher client graphics market segments. As for availability, VideoCardz predicts a launch roughly coinciding with that of Intel's "Tiger Lake-H" mobile processor series, possibly slated for mid-2021.

34 Comments on Intel DG2 Xe-HPG Features 512 Execution Units, 8 GB GDDR6

Wake me up when they get serious k

First, 4096SP is about 6.4 MX350's.

Second, RX6800 is 6 MX350's.

So to build off the conservative "5x" remark from @Vayra86 we'd choose the GTX 1050 rather than the MX350 as the memory subsystem is more in line with the DG2's dedicated GDDR. 5x of GTX 1050 performance is the RTX 3070/RTX 2080 Ti. That's pretty serious if it's priced well, and judging by only 8GB of VRAM it probably will be.

Can Intel feed those SPs and are they efficient enough? Is the support in good order? All of this can easily put them far below 6 MX350's and I reckon it will. And then there is power budget... die size... lots of things that really matter to gauge if there is any future in it at all.

Anyway, I hope they make a big dent with their first serious release. I just question if this is the one, as we've not really seen much of Xe in gaming yet. Its easy to feed a low end IGP next to a CPU, and its what Intel has done for ages, and the results are convincingly unimpressive. I still don't quite understand why they axed Broadwell as they did, when you see console APUs now that are using similar cache and on chip memory systems. But maybe that was the old, pre-panic-mode-laid-back-quadcore-Intel at work. Today they have Raja but somehow that doesn't inspire confidence.

This is a wild assumption and another reason I'm not entirely convinced this is the big one for Intel. Last I checked they wanted to leverage their 10nm SuperFin, but apparently it really doesn't like power a lot.

videocardz.com/newz/intel-confirms-dg2-gpu-xe-hpg-features-up-to-512-execution-units

Edit: Haven't heard much about TSMC 6nm, apparently it was revealed late 2019. According to this, they went into mass production in August 2020. Couldn't find what they were mass producing though. Perhaps it is DG2.

Edit 2: According to this article, 6nm is an enhanced EUV 7nm with +20% density. For reference, this would make it about the same density as Samsung's new 5nm node.

www.gizchina.com/2020/08/21/tsmc-6nm-process-is-currently-in-mass-production/

"Intel gaming GPU" doesn't roll off the tongue just yet, knowing their history it's no wonder that most people are cautious. For "newcomers" in the market and so close to launch, I don't know if them not giving any numbers on the expected performance is either a proof of wisdom, or just that there's nothing to hype about.

If we take a Vega8 and multiply by 5.3 to emulate the shift from 96 to 512 EU, we get a "Vega42". That's not exactly bleeding edge, given that a Vega64 is starting to show its age, but 2/3rds of a Vega64 would probably put it in the ballpark of a GTX 1060 6GB or RX570.

If Intel are now willing to invest the resources to compete at gaming GPUs (and surely AI/compute as well), it will happen. It is a gigantic investment at this point but they aren't a strapped for cash startup. I'm all for seeing NVidia getting slapped around a bit. Heh.

Sucking up some more of TSMCs capacity will surely affect other parts of the market though. Zero sum game, you can't get blood from a turnip.