Friday, May 7th 2021

SSDs More Reliable than HDDs: Backblaze Study

In the initial days of SSDs, some 11-odd years ago, SSDs were considered unreliable. They'd randomly fail on you, causing irrecoverable data loss. Gaming desktop users usually installed an HDD to go with the SSD in their builds, so they could take regular whole-disk images of the SSD onto the HDD; Microsoft even added a disk imaging feature with Windows 7. Since then, SSDs have come a long way with reliability, are now backed with longer warranties than HDDs, and high endurance. Notebook vendors are increasingly opting for SSDs as the sole storage device in their thin-and-light products. A Backblaze study reveals an interesting finding: SSDs are 21 times more reliable than HDDs.

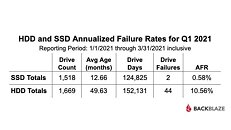

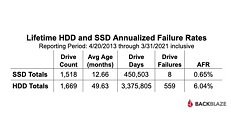

Backblaze is popular for conducting regular actionable studies on storage device reliability in the enterprise segment, particularly dissecting how each brand of HDD and SSD fares in terms of drive failures or average failure rates (AFR). In a study covering Q1 2021 (January 1 to March 31), Backblaze finds that the AFR of HDDs across brands, stands at 10.56%. In the same period, SSDs across brands lodged an AFR of a stunning 0.58%. In other words, roughly 1 in 10 HDDs failed, compared to roughly 1 in 200 SSDs. Things get interesting when Backblaze looks all the way back to 2013, when it started studying drive reliability.With annualized failure rates studied between April 2013 and April 1, 2021, Backblaze finds that SSDs total 0.65% AFR, while HDDs do 6.04%. The relatively higher HDD failure rates are attributable to the fact that they have moving parts; pull more average age (before they need replacement), the fact that before they reach their manufacturer-rated endurance, SSDs are highly tolerant to electrical faults using capacitor banks, and the fact that HDD manufacturers have generally reduced the warranties on their drives after the HDD factory flooding incidents in 2012.

Source:

Tom's Hardware

Backblaze is popular for conducting regular actionable studies on storage device reliability in the enterprise segment, particularly dissecting how each brand of HDD and SSD fares in terms of drive failures or average failure rates (AFR). In a study covering Q1 2021 (January 1 to March 31), Backblaze finds that the AFR of HDDs across brands, stands at 10.56%. In the same period, SSDs across brands lodged an AFR of a stunning 0.58%. In other words, roughly 1 in 10 HDDs failed, compared to roughly 1 in 200 SSDs. Things get interesting when Backblaze looks all the way back to 2013, when it started studying drive reliability.With annualized failure rates studied between April 2013 and April 1, 2021, Backblaze finds that SSDs total 0.65% AFR, while HDDs do 6.04%. The relatively higher HDD failure rates are attributable to the fact that they have moving parts; pull more average age (before they need replacement), the fact that before they reach their manufacturer-rated endurance, SSDs are highly tolerant to electrical faults using capacitor banks, and the fact that HDD manufacturers have generally reduced the warranties on their drives after the HDD factory flooding incidents in 2012.

41 Comments on SSDs More Reliable than HDDs: Backblaze Study

Unfortunately both options are sketchy since NTFS is closed, but at least this one is free