Thursday, August 26th 2021

NVIDIA Readying GeForce RTX 3090 SUPER, A Fully Unlocked GA102 with 400W Power?

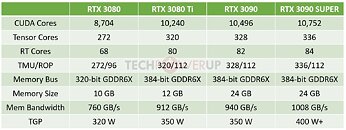

NVIDIA is readying the GeForce RTX 3090 SUPER, the first "SUPER" series model from the RTX 30-series, following a recent round of "Ti" refreshes for its product stack. According to kopite7kimi and Greymon55, who each have a high strike-rate with NVIDIA rumors, the RTX 3090 SUPER could finally max-out the 8 nm "GA102" silicon on which nearly all high-end models from this NVIDIA GeForce generation are based. A fully unlocked GA102 comes with 10,752 CUDA cores, 336 Tensor cores, 84 RT cores, 336 TMUs, and 112 ROPs. The RTX 3090 stops short of maxing this out, with its 10,496 CUDA cores.

NVIDIA's strategy with the alleged RTX 3090 SUPER will be to not only max out the GA102 silicon, with its 10,752 CUDA cores, but also equip it with the fastest possible GDDR6X memory variant, which ticks at 21 Gbps data-rate, compared to 19.5 Gbps on the RTX 3090, and 19 Gbps on the RTX 3080 and RTX 3080 Ti. At this speed, across the chip's 384-bit wide memory bus, the RTX 3090 SUPER will enjoy 1 TB/s of memory bandwidth. Besides more CUDA cores, it's possible that the GPU Boost frequency could be increased. All this comes at a cost, though, with Greymon55 predicting a total graphics power (TGP) of at least 400 W, compared to 350 W of the RTX 3090. A product launch is expected within 2021.

Sources:

Greymon55 (Twitter), kopite7kimi (Twitter), WCCFTech

NVIDIA's strategy with the alleged RTX 3090 SUPER will be to not only max out the GA102 silicon, with its 10,752 CUDA cores, but also equip it with the fastest possible GDDR6X memory variant, which ticks at 21 Gbps data-rate, compared to 19.5 Gbps on the RTX 3090, and 19 Gbps on the RTX 3080 and RTX 3080 Ti. At this speed, across the chip's 384-bit wide memory bus, the RTX 3090 SUPER will enjoy 1 TB/s of memory bandwidth. Besides more CUDA cores, it's possible that the GPU Boost frequency could be increased. All this comes at a cost, though, with Greymon55 predicting a total graphics power (TGP) of at least 400 W, compared to 350 W of the RTX 3090. A product launch is expected within 2021.

99 Comments on NVIDIA Readying GeForce RTX 3090 SUPER, A Fully Unlocked GA102 with 400W Power?

Do none of you realize that the reason nvidia is releasing this card is because they have more dyes than can sell to the professional crowd? If they didn't this card would not exist, nvidia is always going to make a larger profit off the professional crowd than the gaming crowd. To me this just signals the beginning of the end of the card shortage, it'll just trickle down from here.

So, really, being all happy about a Super that is still inferior to whats possible even with the same atchitecture so Nvidia makes more money "to produce more", it really doesnt get more backwards than that. You pay premium for sub top, cut down chips on a sub optimal node.

Enjoy it, anyway. But a 3090 really isnt great in any way shape or form if you ask me. The fact is AMD has the better chips now, node and efficiency wise.

I do think your last sentence is accurate. Shortages are slowly going away. But it has nothing to do with Nvidia sales or money.

Imo ray tracing was a mistake and the performance benefits of DLSS could have easly been made up with actual upgrades in performance gen after gen instead of ray tracing, but people are going to disagree with me, and thats fine, Nvidia spent hundreds of millions to convince laymen consumers ray tracing was the future, when in reality it made things worse for benefits only an eagle eye would see.

Peformance/Watt of the 3060/3060Ti/3070 is fantastic - way better than that previous-gen TSMC 12nm and competitive with the RDNA2 stuff made on TSMC 7nm.

I think the real problem is that Ampere's design simply isn't power efficient at scale. Nvidia and Nvidia apologists are quick to blame Samsung but the real blame here is on Nvidia for making a GPU with >28bn transistors that is >50% bigger than the previous gen. There's no way that was ever not going to be a power-guzzling monster. To put it into context, Nvida's GA-102 has more transistors than the 6900XT and you have to remember that almost a third of the transistors in Navi21 are just cache that use very little power.

Is it Nvidia doing too many transistors...? Remember, lower peak clocks... so that's going to be an early turning point when you size up. If a node can't support big chips well, isn't it just a node with more limitations than the others? Its not the smallest, either. Its not the more efficient one with smaller chips, either. I have no conclusive answer tbh, but the competition offers perspective.

Ampere's design, had it been on 7nm TSMC, would probably sit 20-40W lower with no trouble depending on what SKU you look at. But even so, those chips are still effin huge... and I'm actually seeing Nvidia moving towards an 'AMD' move here, going bigger and bigger making their chips harder to market in the end. There are limits to size, Turing was already heavily inflated and trend seems to continue as Nvidia plans for full blown RT weight going forward. It is really as predicted... I think AMD is being VERY wise postponing that expansion of GPU hardware, look at what's happening around us - shortages don't accomodate mass sales of huge chips at all. Gaming for the happy few won't ever survive very long. I think Nvidia is going to get forced to either change or price itself out of the market.

And...meanwhile we don't see massive aversion to less capable RT-chips either. Its still 'fun to have' but never 'can't be missed', only a few are on that train if I look around. Its still just a box of effects that can be fixed in other ways and devs still do that, even primarily so. Consoles not being very fast in RT is another nail in the coffin - and those are certainly going to last longer than Ampere or Nvidia's next gen. I think the judge is still not out on what the market consensus is going to be - but multi functional chips that can do a little bit OR get used for raw performance are the only real way forward, long term. RT effects won't get easier. Another path I'm seeing is some hard limit (or baseline) of non-RT performance across the entire stack, and just RT bits getting scaled up, if the tech has matured in gaming. But something's gonna give.We should not be surprised if CUDA efficiency has reached its peak. I think they already moved there with Maxwell and Pascal cemented it with high peak clocks with power delivery tweaks and small feature cuts. Given a good node, what they CAN do is clock CUDA a good 300 mhz higher again. More cache has also been implemented since Turing (and Ampere added more I believe). Gonna be interesting what they'll try next.

oh right, Nvidia spent it on ray tracing and tensor cores, good investment :shadedshu:

The fact a 5 year old card still runs everything evrn beyond 1080p only underlines that fact. RT is a desperate attempt to create new demand out of a few lights and shadows. Not better gaming in any way.

Telemetry data shows that most current gen console users prefer to play on performance 60fps mode than ray tracing mode because that's what makes the experience better.

Nothing feels better than firing up a new game and see how smooth it runs at 144hz, now that dream is a lot farther unless you buy 1400$ GPUs every gen or two.

Software is the reason why I cant make it more stable at higher levels. they put bios flashing software in all the driver updates recently so they flashed everyone cards without they knowing, so they capped me...

that's the reason why I'm selling the fastest MINING BULLETPROOF DEATHMACHING CARD on Ebay right now. 300+fps all day 200+ 4k up to your CPU and powersupply at that point but she can mine at peaks to 125.5mh at 116w 70 to 90mh all day average.

just replace the ram and VRM thermal pads after 2 months of hard use.

Look mama, I learned something today.