Monday, June 6th 2022

Intel LGA1851 to Succeed LGA1700, Probably Retain Cooler Compatibility

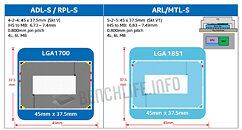

Intel's next-generation desktop processor socket will be the LGA1851. Leaked documents point to the next-generation socket being of identical dimensions to the current LGA1700, despite the higher pin-count, which could indicate cooler compatibility between the two sockets, much in the same way as the LGA1200 retained cooler-compatibility with prior Intel sockets tracing all the way back to the LGA1156. The current LGA1700 will service only two generations of Intel Core, the 12th Generation "Alder Lake," and the next-gen "Raptor Lake" due for later this year. "Raptor Lake" will be Intel's last desktop processor built on a monolithic silicon, as the company transitions to multi-chip modules.

Intel Socket LGA1851 will debut with the 14th Gen Core "Meteor Lake" processors due for late-2023 or 2024; and will hold out until the 15th Gen "Arrow Lake." Since "Meteor Lake" is a 3D-stacked MCM with a base tile stacked below logic tiles; the company is making adjustments to the IHS thickness to end up with an identical package thickness to the LGA1700, which would be key to cooler-compatibility, besides the socket's physical dimensions. Intel probably added pin-count to the LGA1851 by eating into the "courtyard" (the central gap in the land-grid), because the company states that the pin-pitch hasn't changed from LGA1700.

Sources:

BenchLife.info, VideoCardz

Intel Socket LGA1851 will debut with the 14th Gen Core "Meteor Lake" processors due for late-2023 or 2024; and will hold out until the 15th Gen "Arrow Lake." Since "Meteor Lake" is a 3D-stacked MCM with a base tile stacked below logic tiles; the company is making adjustments to the IHS thickness to end up with an identical package thickness to the LGA1700, which would be key to cooler-compatibility, besides the socket's physical dimensions. Intel probably added pin-count to the LGA1851 by eating into the "courtyard" (the central gap in the land-grid), because the company states that the pin-pitch hasn't changed from LGA1700.

197 Comments on Intel LGA1851 to Succeed LGA1700, Probably Retain Cooler Compatibility

Even when i went 1700x to 2700x, I went from X370 to X470.. Then to X570 when i bought my 3900x.

because the performance gain from them to anything prior to 8th gen intel was basically nothing.

My x370 on the other hand, the ryzen 1400 it started with to the 5600x i'm putting in it is worlds apart.

You do things a certain way because you had to. You're used to it.

Given wings you'd just walk around because you've always had those legs, everything's designed for legs and you're just gunna leg it like you always have and everyone else has.

You know, except everything else with wings who thinks you're daft for not realizing the obvious freedom you have by using that option.

Now get off my lawn you dirty kids before I tell your ma!

Only because Intel has trained you well over the years

One board only two chip series for intel

I might want to upgrade from a 10900k but 11900k is my only option and it's a downgrade seeing I'd loose 2 cores and only gain a little single core performance so big whoop on that so called upgrade path.

12900k is not an option because it obviously requires a new board, so I'm sure a lot of people would like to upgrade maybe not everyone but far more than you might think

I read all the time amd folks upgrading from years old series to near newest, boy that sure would be nice to do if I could on a intel platform but sadly it is not an option.

x99 eol haswell-e but broardwell-e was no prize either but did have a 10 core but it was stupid priced

x299 eol about the only intel platform that had 3 series options 79-99-109 but frankly 79 was a thermal defect series should of never been created.

Just to fill in one or two of those dots if you don't mind

I've only gone intel platforms in the past and find it silly to eol so many of my boards just because intel requires it

Funny intel chose the "lakes" naming scheme I wonder how many real lakes would be filled by all these silly socket changes :laugh:

Some would buy Intel, but Intel makes platforms with No upgrade path besides storage and GPU.

I personally wouldn't buy gen 1 of a new architecture, two is always better so I never would have an upgrade path via Intel.

But Intel does power my two laptops, I'm no Intel hater.

But.

When it comes to a chip, mounted on a circuit board(substrate = pin in to pin out addapter) that's put in a socket on a circuit, having it's socket swapped add nauseum just to push board sales.

I have a problems with that.

Also, who on their right mind that keeps their cpu for 5 to 7 years buys an almost 2 year old cpu? Cause thats how old the 5950x.

X370 and b350 are completely outdated right now and besides the 3d every other cpu that is supported is 2 years old. So yeah, not a great option12900k scores 28k at 156 watts and 15k+ at 35w,making it the most efficient cpu on planet earth. Ive uploaded results in the cbr23 thread.

Maybe 2nd generation, maybe not.

@fevgatos , stepped upgrades, clearly beyond you, but you could have bought a 2600X and x470( I don't Dooo 1st gen) then bought a 3800X a year later.

Then buy a x570 , then buy a 5950X

Stepped, upgrades, not the most extreme example you spout of b350 and a 1700 to a 5950X.

Some also sell systems on cheap, so can leverage that to their own systems advantage.

As for if that would be better, that's a personal choice depending on your own use case.

I'm not saying my way is best, I am saying my way suited me best.

And it's a choice I would rather have then NOT have.

Plus 8700k to alderlake would have been two to three years of lacking performance in x86 tasks, go you.

That's called an opinion, not a fact.

I got No snags doing it my way, and all it took was knowing what to buy, when, and what it supported, dramatically hard I know.

All your points against are still aimed at ab350 owner going through the series of CPU.

Great understanding of what I said there.

On the other hand there are multiple benefits to what Intel is doing so...

Total power (wattmetter) is 25W idle.

When we talk about that extreme consumption, only the torture scenario is taken into account. In the real world, consumption is much, much lower.

This system does not exceed 300W per day in 8 hours of operation (www, multimedia, Office, WoT and some old games.)

I will upgrade to 10500 only when the AV1 codec will completely replace VP9 in youtube and netflix.

Ironically, without the integrated graphics processor I would have had to buy a much more expensive video card than the motherboard under discussion on this topic. :laugh:

11600K and 3070Ti eat ~ 400W / h in AAA games. At the factory settings, it goes to 500W.Or they're all good.

In other words, it is important to buy what is cheaper and offers better performance for your requirements. I see a problem if you buy the X processor because it's better in gaming but you don't play, or you don't have a video card to highlight it. How does the 5800x3d help a 6500XT?

What I do with more cores is my business alone but I will say, every pc I have owned in the last ten years spent it's time on and working at 100% 24/7 362(it does require maintenance)

I can think of only two benefits, one IO improvement (I updated mobo same CPU after 16 months for this anyway), two Intel's bottom line. . .

F#@k Intel's bottom line.

@Gica I have no clue what your on about, I have my systems listed, a 6500Xt isn't on it.