Thursday, July 17th 2008

Three New NVIDIA Tools Help Dev's Quickly Debug and Speed-up Games

Today's top video games use complex programming and rendering techniques that can take months to create and tune in order to get the image quality and silky-smooth frame rates that gamers demand. Thousands of developers worldwide including members of Blizzard Entertainment, Crytek, Epic Games, and Rockstar Games rely on NVIDIA development tools to create console and PC video games. Today, NVIDIA has expanded its award-winning development suite with three new tools that vastly speed up this development process, keeping projects on track and costs under control.

The new tools which are available now include:

More Details on the New Tools

PerfHUD 6 is a new and improved version of NVIDIA's graphics debugging and performance analysis tool for DirectX 9 and 10 applications. PerfHUD is widely used by the world's leading game developers to debug and optimize their games. This new version includes comprehensive support for optimizing games for multiple GPUs using NVIDIA SLI technology, powerful new texture visualization and override capabilities, an API call list, dependency views, and much more. In a recent survey, more than 300 PerfHUD 5 users reported an average speedup of 37% after using PerfHUD to tune their applications.

"Spore relies on a host of graphical systems that support a complex and evolving universe. NVIDIA PerfHUD provides a unique and essential tool for in-game performance analysis," said Alec Miller, Graphics Engineer at Maxis. "The ability to overlay live GPU timings and state helps us rapidly diagnose, fix, and then verify optimizations. As a result, we can simulate rich worlds alongside interactive gameplay. I highly recommend PerfHUD because it is so simple to integrate and to use."

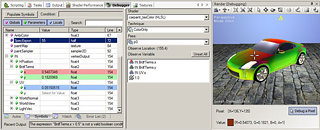

FX Composer 2.5 is an integrated development environment for fast creation of real-time visual effects. FX Composer 2.5 can be used to create shaders for HLSL, CgFX, and COLLADA FX Cg in DirectX and OpenGL. This new release features an improved user interface, DirectX 10 Support, ShaderPerf with GeForce 8 and 9 Series support, visual models and styles, and particle systems.

As longer, more complex shaders become pervasive, debugging shaders has become more of a challenge for developers. To assist developers with this task, NVIDIA introduces the brand-new NVIDIA Shader Debugger, a plug-in for FX Composer 2.5 that enables developers to inspect their code while seeing shader variables applied in real time on their geometry. The Shader Debugger can be used to debug HLSL, CgFX, and COLLADA FX Cg shaders in both DirectX and OpenGL.

The NVIDIA Shader Debugger is the first product in the NVIDIA Professional Developer Tools lineup. These are new tools directed at professional developers who need more industrial-strength capabilities and support. For example, the NVIDIA Shader Debugger will run on leading GPUs from all vendors.

In addition to the free versions available for non-commercial use, some of the new tools are subject to a license fee, but are priced to be accessible to developers. Existing free tools (such as FX Composer, PerfHUD, Texture Tools, and SDKs) will not be affected-they will continue to be available to all developers at no cost. Shader Debugger pricing information is available at www.shaderdebugger.com.

NVIDIA encourages developers to visit its developer web site here and its developer tools forums here.

Source:

NVIDIA

The new tools which are available now include:

- PerfHUD 6-a graphics debugging and performance analysis tool for DirectX 9 and 10 applications.

- FX Composer 2.5-an integrated development environment for fast creation of real-time visual effects.

- Shader Debugger-helps debug and optimize shaders written with HLSL, CgFX, and COLLADA FX Cg in DirectX and OpenGL.

More Details on the New Tools

PerfHUD 6 is a new and improved version of NVIDIA's graphics debugging and performance analysis tool for DirectX 9 and 10 applications. PerfHUD is widely used by the world's leading game developers to debug and optimize their games. This new version includes comprehensive support for optimizing games for multiple GPUs using NVIDIA SLI technology, powerful new texture visualization and override capabilities, an API call list, dependency views, and much more. In a recent survey, more than 300 PerfHUD 5 users reported an average speedup of 37% after using PerfHUD to tune their applications.

"Spore relies on a host of graphical systems that support a complex and evolving universe. NVIDIA PerfHUD provides a unique and essential tool for in-game performance analysis," said Alec Miller, Graphics Engineer at Maxis. "The ability to overlay live GPU timings and state helps us rapidly diagnose, fix, and then verify optimizations. As a result, we can simulate rich worlds alongside interactive gameplay. I highly recommend PerfHUD because it is so simple to integrate and to use."

FX Composer 2.5 is an integrated development environment for fast creation of real-time visual effects. FX Composer 2.5 can be used to create shaders for HLSL, CgFX, and COLLADA FX Cg in DirectX and OpenGL. This new release features an improved user interface, DirectX 10 Support, ShaderPerf with GeForce 8 and 9 Series support, visual models and styles, and particle systems.

As longer, more complex shaders become pervasive, debugging shaders has become more of a challenge for developers. To assist developers with this task, NVIDIA introduces the brand-new NVIDIA Shader Debugger, a plug-in for FX Composer 2.5 that enables developers to inspect their code while seeing shader variables applied in real time on their geometry. The Shader Debugger can be used to debug HLSL, CgFX, and COLLADA FX Cg shaders in both DirectX and OpenGL.

The NVIDIA Shader Debugger is the first product in the NVIDIA Professional Developer Tools lineup. These are new tools directed at professional developers who need more industrial-strength capabilities and support. For example, the NVIDIA Shader Debugger will run on leading GPUs from all vendors.

In addition to the free versions available for non-commercial use, some of the new tools are subject to a license fee, but are priced to be accessible to developers. Existing free tools (such as FX Composer, PerfHUD, Texture Tools, and SDKs) will not be affected-they will continue to be available to all developers at no cost. Shader Debugger pricing information is available at www.shaderdebugger.com.

NVIDIA encourages developers to visit its developer web site here and its developer tools forums here.

19 Comments on Three New NVIDIA Tools Help Dev's Quickly Debug and Speed-up Games

And the chances of optimized games for ATI would be......?

....unless NV comes up with its DX10.1 fleet.

DX 10 is not that special, Im glad Microshaft is moving to DX11...

Speaking of... @ bta: Is DX11 going to be backwards compatible with DX10? Does it fall back to DX10 if the card doesnt support it like DX 10.1 does?

EDIT: although the crap they pulled with the Assasin's Creed patch makes them deserve the "snitch SOB" label. :laugh:

Now onto DX11, I am kinda glad they are developing it but it just worries me on the performance point-of-view that because Nvidia is the closer partner, they will be looking at the API's development closely. And since DX11 is one or two years away from now, Nvidia has got themselves a bit headstart in getting their GTXwahtever-the-heck series ready for DX11 games.

Here is a list of AMD/ATI development tools for Radon products:

# » AMD Tootle (Triangle Order Optimization Tool)

# » ATI Compress

# » CubeMapGen

# » GPU Mesh Mapper

# » GPU PerfStudio

# » GPU ShaderAnalyzer

# » Normal Mapper

# » OpenGL ES 2.0 Emulator

# » RenderMonkey™

# » The Compressonator

# » AMD Stream™

# » HLSL2GLSL

More info on each can be found here: developer.amd.com/gpu/Pages/default.aspx

I heard Postal 3 is going to be powered by the Source engine and it has wide environments so these tools might work to keep ATI on the playing field.

I don't see a point of moving onto DX11, until they sort out DX10. Over half of the 'issues' we face through DX10 are due to lack of development exposure - unless DX11 is just going to be a rehash of the core architecture introduced by way of DX10, then ok, yet if it storms in with new pipes, that's just going to screw things up.

If Nvidia is smart enough to help developers out then they deserve to have companies willing to work with them . . .Perhaps ATI should start working with MS on this as well then?

I'm holding out for the 5000 series. If the 5950 doesn't outperform 4870x2 (sounds hard to beat) then I'll get a cheap 4870x2 next year.