NVIDIA GeForce RTX 50 Series Faces Compute Performance Issues Due to Dropped 32-bit Support

PassMark Software has identified the root cause behind unexpectedly low compute performance in NVIDIA's new GeForce RTX 5090, RTX 5080, and RTX 5070 Ti GPUs. The culprit: NVIDIA has silently discontinued support for 32-bit OpenCL and CUDA in its "Blackwell" architecture, causing compatibility issues with existing benchmarking tools and applications. The issue manifested when PassMark's DirectCompute benchmark returned the error code "CL_OUT_OF_RESOURCES (-5)" on RTX 5000 series cards. After investigation, developers confirmed that while the benchmark's primary application has been 64-bit for years, several compute sub-benchmarks still utilize 32-bit code that previously functioned correctly on RTX 4000 and earlier GPUs. This architectural change wasn't clearly documented by NVIDIA, whose developer website continues to display 32-bit code samples and documentation despite the removal of actual support.

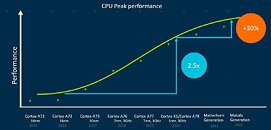

The impact extends beyond benchmarking software. Applications built on legacy CUDA infrastructure, including technologies like PhysX, will experience significant performance degradation as computational tasks fall back to CPU processing rather than utilizing the GPU's parallel architecture. While this fallback mechanism allows older applications to run on the RTX 40 series and prior hardware, the RTX 5000 series handles these tasks exclusively through the CPU, resulting in substantially lower performance. PassMark is currently working to port the affected OpenCL code to 64-bit, allowing proper testing of the new GPUs' compute capabilities. However, they warn that many existing applications containing 32-bit OpenCL components may never function properly on RTX 5000 series cards without source code modifications. The benchmark developer also notes this change doesn't fully explain poor DirectX9 performance, suggesting additional architectural changes may affect legacy rendering pathways. PassMark updated its software today, but legacy benchmarks could still suffer. Below is an older benchmark run without the latest PassMark V11.1 build 1004 patches, showing just how much the newest generations suffers without a proper software support.

The impact extends beyond benchmarking software. Applications built on legacy CUDA infrastructure, including technologies like PhysX, will experience significant performance degradation as computational tasks fall back to CPU processing rather than utilizing the GPU's parallel architecture. While this fallback mechanism allows older applications to run on the RTX 40 series and prior hardware, the RTX 5000 series handles these tasks exclusively through the CPU, resulting in substantially lower performance. PassMark is currently working to port the affected OpenCL code to 64-bit, allowing proper testing of the new GPUs' compute capabilities. However, they warn that many existing applications containing 32-bit OpenCL components may never function properly on RTX 5000 series cards without source code modifications. The benchmark developer also notes this change doesn't fully explain poor DirectX9 performance, suggesting additional architectural changes may affect legacy rendering pathways. PassMark updated its software today, but legacy benchmarks could still suffer. Below is an older benchmark run without the latest PassMark V11.1 build 1004 patches, showing just how much the newest generations suffers without a proper software support.