Intel Gen12 Xe iGPU Could Match AMD's Vega-based iGPUs

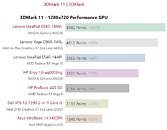

Intel's first integrated graphics solution based on its ambitious new Xe graphics architecture, could match AMD's "Vega" architecture based iGPU solutions, such as the one found in its latest Ryzen 4000 series "Renoir" iGPUs, according to leaked 3DMark FireStrike numbers put out by @_rogame. Benchmark results of a prototype laptop based on Intel's "Tiger Lake-U" processor surfaced on the 3DMark database. This processor embeds Intel's Gen12 Xe iGPU solution, which is purported to offer significant performance gains over current Gen11 and Gen9.5 based iGPUs.

The prototype 2-core/4-thread "Tiger Lake-U" processor with Gen12 graphics yields a 3DMark FireStrike score of 2,196 points, with a graphics score of 2,467, and 6,488 points physics score. These scores are comparable to 8 CU Radeon Vega iGPU solutions. "Renoir" tops out at 8 CUs, but shores up performance to the 11 CU "Picasso" levels by other means. Besides tapping into the 7 nm process to increase engine clocks, improve the boosting algorithm, and modernizing the display- and multimedia engines; AMD's iGPU is largely based on the same 3-year old "Vega" architecture. Intel Gen12 Xe makes its debut with the "Tiger Lake" microarchitecture slated for 2021.

The prototype 2-core/4-thread "Tiger Lake-U" processor with Gen12 graphics yields a 3DMark FireStrike score of 2,196 points, with a graphics score of 2,467, and 6,488 points physics score. These scores are comparable to 8 CU Radeon Vega iGPU solutions. "Renoir" tops out at 8 CUs, but shores up performance to the 11 CU "Picasso" levels by other means. Besides tapping into the 7 nm process to increase engine clocks, improve the boosting algorithm, and modernizing the display- and multimedia engines; AMD's iGPU is largely based on the same 3-year old "Vega" architecture. Intel Gen12 Xe makes its debut with the "Tiger Lake" microarchitecture slated for 2021.