Intel Xeon W9-3495X Sets World Record, Dethrones AMD Threadripper

When Intel announced the appearance of the 4th generation Xeon-W processors, the company announced that the clock multiplier was left unlocked, available for overclockers to try and push these chips even harder. However, it was only a matter of time before we saw the top-end Xeon-W SKU take a chance at beating the world record in Cinebench R23. The Intel Xeon W9-3495X SKU is officially the world record score holder with 132,484 points in Cinebench R23. The overclocker OGS from Greece managed to push all 56 cores and 112 threads of the CPU to 5.4 GHz clock frequency using liquid nitrogen (LN2) cooling setup. Using ASUS Pro WS W790E-SAGE SE motherboard and G-SKILL Zeta R5 RAM kit, the OC record was set on March 8th.

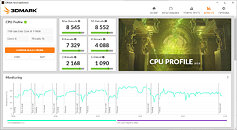

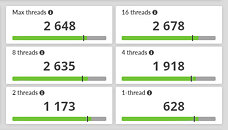

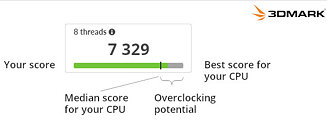

The previous record holder of this position was AMD with its Threadripper Pro 5995WX with 64 cores and 128 threads clocked at 5.4 GHz. Not only did Xeon W9-3495X set the Cinebench R23 record, but the SKU also placed the newest record for Cinebench R20, Y-Cruncher, 3DMark CPU test, and Geekbench 3 as well.

The previous record holder of this position was AMD with its Threadripper Pro 5995WX with 64 cores and 128 threads clocked at 5.4 GHz. Not only did Xeon W9-3495X set the Cinebench R23 record, but the SKU also placed the newest record for Cinebench R20, Y-Cruncher, 3DMark CPU test, and Geekbench 3 as well.