NVIDIA CMP 170HX Mining Card Tested, Based on GA100 GPU SKU

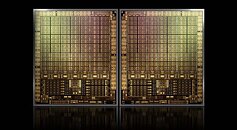

NVIDIA's Crypto Mining (CMP) series of graphics cards are made to work only for one purpose: mining cryptocurrency coins. Hence, their functionality is somewhat limited, and they can not be used for gaming as regular GPUs can. Today, Linus Tech Tips got ahold of NVIDIA's CMP 170HX mining card, which is not listed on the company website. According to the source, the card runs on NVIDIA's GA100-105F GPU, a version based on the regular GA100 SXM design used in data-center applications. Unlike its bigger brother, the GA100-105F SKU is a cut-down design with 4480 CUDA cores and 8 GB of HBM2E memory. The complete design has 6912 cores and 40/80 GB HBM2E memory configurations.

As far as the reason for choosing 8 GB HBM2E memory goes, we know that the Ethereum DAG file is under 5 GB, so the 8 GB memory buffer is sufficient for mining any coin out there. It is powered by an 8-pin CPU power connector and draws about 250 Watts of power. It can be adjusted to 200 Watts while retaining the 165 MH/s hash rate for Ethereum. This reference design is manufactured by NVIDIA and has no active cooling, as it is meant to be cooled in high-density server racks. Only a colossal heatsink is attached, meaning that the cooling needs to come from a third party. As far as pricing is concerned, Linus managed to get this card for $5000, making it a costly mining option.More images follow...

As far as the reason for choosing 8 GB HBM2E memory goes, we know that the Ethereum DAG file is under 5 GB, so the 8 GB memory buffer is sufficient for mining any coin out there. It is powered by an 8-pin CPU power connector and draws about 250 Watts of power. It can be adjusted to 200 Watts while retaining the 165 MH/s hash rate for Ethereum. This reference design is manufactured by NVIDIA and has no active cooling, as it is meant to be cooled in high-density server racks. Only a colossal heatsink is attached, meaning that the cooling needs to come from a third party. As far as pricing is concerned, Linus managed to get this card for $5000, making it a costly mining option.More images follow...