Wednesday, July 21st 2021

NVIDIA Multi-Chip-Module Hopper GPU Rumored To Tape Out Soon

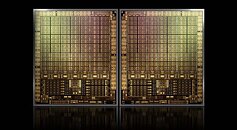

Hopper is an upcoming compute architecture from NVIDIA which will be the first from the company to feature a Multi-Chip-Module (MCM) design similar to Intel's Xe-HPC and AMD's upcoming CDNA2. The Hopper architecture has been teased for over 2 years but it would appear that it is nearing completion with a recent leak suggesting the product will tape out soon. This compute GPU will likely be manufactured on TSMC's 5 nm node and could feature two dies each with 288 Streaming Microprocessors which could theoretically provide a three-fold performance improvement over the Ampere-based NVIDIA A100. The first product to feature the GPU is expected to be the NVIDIA H100 data center accelerator which will serve as a successor to the A100 and could potentially launch in mid-2022.

Sources:

@3DCenter_org, VideoCardz

35 Comments on NVIDIA Multi-Chip-Module Hopper GPU Rumored To Tape Out Soon

More serious... So far this seems to just be oriented at other markets but eventually some cut down should reach us, especially if you consider the die sizes and power on current top end cards. Not much headroom there.

www.pcgamesn.com/amd-navi-monolithic-gpu-designI too expect the day to come for MCM to trickle down to the consumer space. But it seems AMD/nVidia are more so at the mercy of Microsoft and game developers. It is a MUCH needed evolution of graphic card design for so many reasons. cost/scalability/yields/ just to name a few big ones.

Tapout is like saying something is giving up so this is a poor choice of words even tape out really makes no sense

But some sort of internal split render tech should work I suppose. But even those technologies incur a latency hit on multi GPU already.

All thanks to people buying cards regardless of the price. Thanks everyone!

I think there is about a 50% chance RNDA3 is mGPU based. If it isn't I suspect the next Radeon Pro/Killer Instinct cards will be.