NVIDIA Prepares H100 NVL GPUs With More Memory and SLI-Like Capability

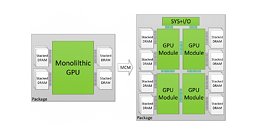

NVIDIA has killed SLI on its graphics cards, disabling the possibility of connecting two or more GPUs to harness their power for gaming and other workloads. However, SLI is making a reincarnation today in the form of a new H100 GPU model that spots higher memory capacity and higher performance. Called the H100 NVL, the GPU is a unique edition design based on the regular H100 PCIe version. What makes the H100 HVL version so special is the boost in memory capacity, now up from 80 GB in the standard model to 94 GB in the NVL edition SKU, for a total of 188 GB of HMB3 memory, running on a 6144-bit bus. Being a special edition SKU, it is sold only in pairs, as these H100 NVL GPUs are paired together and are connected by three NVLink connectors on top. Installation requires two PCIe slots, separated by dual-slot spacing.

The performance differences between the H100 PCIe version and the H100 SXM version are now matched with the new H100 NVL, as the card features a boost in the TDP with up to 400 Watts per card, which is configurable. The H100 NVL uses the same Tensor and CUDA core configuration as the SXM edition, except it is placed on a PCIe slot and connected to another card. Being sold in pairs, OEMs can outfit their systems with either two or four pairs per certified system. You can see the specification table below, with information filled out by AnandTech. As NVIDIA says, the need for this special edition SKU is the emergence of Large Language Models (LLMs) that require significant computational power to run. "Servers equipped with H100 NVL GPUs increase GPT-175B model performance up to 12X over NVIDIA DGX A100 systems while maintaining low latency in power-constrained data center environments," noted the company.

The performance differences between the H100 PCIe version and the H100 SXM version are now matched with the new H100 NVL, as the card features a boost in the TDP with up to 400 Watts per card, which is configurable. The H100 NVL uses the same Tensor and CUDA core configuration as the SXM edition, except it is placed on a PCIe slot and connected to another card. Being sold in pairs, OEMs can outfit their systems with either two or four pairs per certified system. You can see the specification table below, with information filled out by AnandTech. As NVIDIA says, the need for this special edition SKU is the emergence of Large Language Models (LLMs) that require significant computational power to run. "Servers equipped with H100 NVL GPUs increase GPT-175B model performance up to 12X over NVIDIA DGX A100 systems while maintaining low latency in power-constrained data center environments," noted the company.