Thursday, September 3rd 2020

NVIDIA Reserves NVLink Support For The RTX 3090

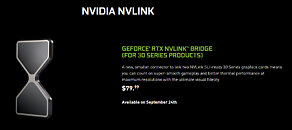

NVIDIA has issued another major blow to multi-GPU gaming with their recent RTX 30 series announcement. The only card to support NVLink SLI in this latest generation will be the RTX 3090 and will require a new NVLink bridge which costs 79 USD. NVIDIA reserved NVLink support for the RTX 2070 Super, RTX 2080 Super, RTX 2080, and RTX 2080 Ti in their Turing range of graphics cards. The AMD CrossFire multi-GPU solution has also become irrelevant after support for it was dropped with RDNA. Developer support for the feature has also declined due to the high cost of implementation, small user base, and often poor performance improvements. With the NVIDIA RTX 3090 set to retail for 1499 USD, the cost of a multi-GPU setup will exceed 3079 USD reserving the feature for only the wealthiest of gamers.

Source:

NVIDIA

81 Comments on NVIDIA Reserves NVLink Support For The RTX 3090

I really hope AMD could do something about the situation.

Not only it requires a huge effort in driver and hardware support for a huge variety of games and applications, they have the financial incentive for its disappearance.

You want 1.5X-2X performance? Without multi-GPU support, you can't just slap a second-hand card to double-up ;)

Also observe the line-ups: for 1.5X-2X performance, you always have to pay more than 2X...

If the fingers on the cards are the same as previous versions what is stopping people using those bridges?

i recon about £500 quid for a good used one..

trog

The tech never lived up to its initial promises (anyone remembers "don't get rid of your old video card, just install a new one on the side and enjoy"?) and support was always spotty.

Also:

www.techpowerup.com/forums/threads/lenovo-equips-legion-t7-with-geforce-rtx-3070-ti-16gb-gddr6.271674/

If you have that much disposable income, get one, by all means. But most of us won't.

and yes that monitor has existed for over 2 years now...

I can't find the article but there was a review back with the atI hd 5000 series.

They did 5870 in crossfire vs Trifire 5670 for less price than one 5870 the three cards where beating the dual card 5870!

The tech has been with us for over 15 years and was never able to break 5% market adoption (if that). It was clearly going nowhere.

store.steampowered.com/hwsurvey/videocard/

RTX 2080 ti With 0.98% why keep making them then ?

thats less than 5% too.

Why should Nvidia keep putting a feature out that less than 1% of all buyers use?

The 3090 will more than likely be the "professional" level card and only professionals would be spending that kind of money to have more than one.