Thursday, September 3rd 2020

NVIDIA Reserves NVLink Support For The RTX 3090

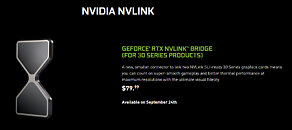

NVIDIA has issued another major blow to multi-GPU gaming with their recent RTX 30 series announcement. The only card to support NVLink SLI in this latest generation will be the RTX 3090 and will require a new NVLink bridge which costs 79 USD. NVIDIA reserved NVLink support for the RTX 2070 Super, RTX 2080 Super, RTX 2080, and RTX 2080 Ti in their Turing range of graphics cards. The AMD CrossFire multi-GPU solution has also become irrelevant after support for it was dropped with RDNA. Developer support for the feature has also declined due to the high cost of implementation, small user base, and often poor performance improvements. With the NVIDIA RTX 3090 set to retail for 1499 USD, the cost of a multi-GPU setup will exceed 3079 USD reserving the feature for only the wealthiest of gamers.

Source:

NVIDIA

81 Comments on NVIDIA Reserves NVLink Support For The RTX 3090

I could see myself building 3x3080 combo today if SLI was still supported. This thing would beat single top tier Quadro in Premiere by far for less than half the price. getting 26,000 cuda cores for $2100 would be insane value. Of course Nvidia couldn't allow this.

For SLI/Crossfire, you need considerable dedicated effort from both your software guys and each developer. And you end up with a product that earns you the "gratitude" of people that spent a lot of money for something that sometimes, maybe happens to work.

I can't obviously know for sure but it would be really bizarre. It would be one of the smallest speed bump between a ti and non-ti card.

Have you seen the price/performance??

The 24GB buffer on the 3090 will keep it relevant, and even now the only reason it is relevant is because of the VRAM, otherwise the extra 15% of performance is not worth that much money over the 3080.

I have almost no doubts that if AMD can compete with the 3080 Nvidia is ready to basically drop a 12GB 3090 and call it 3080Ti/3080Super or whatever for maybe 899-999$.

It will certainly take away a lot from building process. Only one GPU, maybe even some puny 16-lane platform will be enough, all games working to setup's full potential plug-and-play, that's so boring. I will need to find something to make things interesting again, like 24/7 sub ambient cooling, or making the system as small as possible.

Goddamn right there! :p

WoW 170 FPS @ 2K with NV Linked RTX 2080 Supers

I thought SLI was already dead an gone. I thought you needed a special unlock software key from nVidia to even use it.

I'm waiting for an nVidia card with <= 250W and 16GB.

What is your FPS like?

I thought the NVLink is what enables high speed data sharing among the GPUs, not only limited to SLI, unless that's the case for Geforce card.

And for those who think gaming cards can't run professional machine learning workload, I guess they just have no idea.

You can rest assured those Supers aren't scaling all that much...

I am still feeling bad for the rich fellows who will get more than one 3090 and will ruin their gaming experience just for benchmarks and higher but stuttering frame rates