Thursday, November 21st 2019

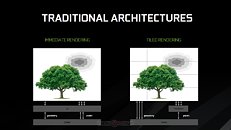

NVIDIA Develops Tile-based Multi-GPU Rendering Technique Called CFR

NVIDIA is invested in the development of multi-GPU, specifically SLI over NVLink, and has developed a new multi-GPU rendering technique that appears to be inspired by tile-based rendering. Implemented at a single-GPU level, tile-based rendering has been one of NVIDIA's many secret sauces that improved performance since its "Maxwell" family of GPUs. 3DCenter.org discovered that NVIDIA is working on its multi-GPU avatar, called CFR, which could be short for "checkerboard frame rendering," or "checkered frame rendering." The method is already secretly deployed on current NVIDIA drivers, although not documented for developers to implement.

In CFR, the frame is divided into tiny square tiles, like a checkerboard. Odd-numbered tiles are rendered by one GPU, and even-numbered ones by the other. Unlike AFR (alternate frame rendering), in which each GPU's dedicated memory has a copy of all of the resources needed to render the frame, methods like CFR and SFR (split frame rendering) optimize resource allocation. CFR also purportedly offers lesser micro-stutter than AFR. 3DCenter also detailed the features and requirements of CFR. To begin with, the method is only compatible with DirectX (including DirectX 12, 11, and 10), and not OpenGL or Vulkan. For now it's "Turing" exclusive, since NVLink is required (probably its bandwidth is needed to virtualize the tile buffer). Tools like NVIDIA Profile Inspector allow you to force CFR on provided the other hardware and API requirements are met. It still has many compatibility problems, and remains practically undocumented by NVIDIA.

Source:

3DCenter.org

In CFR, the frame is divided into tiny square tiles, like a checkerboard. Odd-numbered tiles are rendered by one GPU, and even-numbered ones by the other. Unlike AFR (alternate frame rendering), in which each GPU's dedicated memory has a copy of all of the resources needed to render the frame, methods like CFR and SFR (split frame rendering) optimize resource allocation. CFR also purportedly offers lesser micro-stutter than AFR. 3DCenter also detailed the features and requirements of CFR. To begin with, the method is only compatible with DirectX (including DirectX 12, 11, and 10), and not OpenGL or Vulkan. For now it's "Turing" exclusive, since NVLink is required (probably its bandwidth is needed to virtualize the tile buffer). Tools like NVIDIA Profile Inspector allow you to force CFR on provided the other hardware and API requirements are met. It still has many compatibility problems, and remains practically undocumented by NVIDIA.

33 Comments on NVIDIA Develops Tile-based Multi-GPU Rendering Technique Called CFR

In all cases I recall, these techniques sucked because they mandated each gpu still had to render the complete scene geometry, and only helped with fill rate.

Maybe the matrix is just glitching again...

Single-GPU tiled-rendering hardware would be tiles of static size but playing around with the tile count per GPU might work?

In legacy game rendering techniques the input consists of instructions that must be run. There's little control over time - GPU has to complete (almost) everything or there's no image at all.

So the rendering time is a result (not a parameter) and each frame has to wait for the last tile.

In RTRT frame rendering time (i.e. number of rays) is the primary input parameter. It's not relevant how you split the frame. This is perfectly fine:

bit-tech.net/reviews/tech/graphics/crossfire_preview/3/

www.anandtech.com/show/1698/5

Also, back when DX12 was launched there was a lot of hype on how good it would perform with multi-GPU setups using async technologies (indipendent chips & manufacturers) wccftech.com/dx12-nvidia-amd-asynchronous-multigpu/

Seems like everyone forgot about it...

dumpster.hardwaretidende.dk/dokumenter/nvidia_on_kyro.pdf

Edit:

For a bit of background, this was a presentation to OEMs.

Kyro was technically new and interesting but as an actual gaming GPU on desktop cards, it sucked both due to spotty support as well as lackluster performance. It definitely had its bright moments but they were too few and far between. PowerVR could not develop their tech fast enough to compete with Nvidia an ATi at the time.

PowerVR itself went along just fine, the same architecture series was (or is) a strong contender in mobile GPUs.

Or could this possibly be a Zen like chiplet design to save money and loss on the newest node?

NVlink as the fabric for communication, if only half the resources are actually required maybe I'm out in left field but put 12GB or 6GB for each chiplet and interleave the memory.

You can't get off with shared memory like that. You are still going to need a sizable part of assets accessible by both/all GPUs. Any memory far away from GPU is evil and even a fast interconnect like NVLink won't replace local memory. GPUs are very bandwidth-constrained so sharing memory access through something like Zen2's IO die is not likely to work on GPUs at this time. With big HBM cache for each GPU, maybe, but that is effectively still each GPU having its own VRAM :)

Chiplet design has been the end goal for a while and all the GPU makers have been trying their hand on this. So far, unsuccessfully. As @Apocalypsee already noted - even tiled distribution of work is not new.

I am saying the IO die could handle memory interleaving between two sets of 6GB vram and assign shared and dedicated memory and resources, it's already the same sort of memory management used, but with the ability to share resources with multiple dies, which would also make them a good shared workstation card, allow hardware management of user and resources allocation.